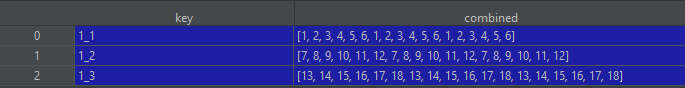

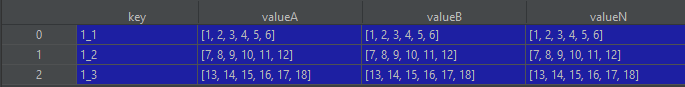

I have a dataframe of lists that looks similar to the one below (fig a). There is a single key column followed by n columns containing lists. My goal is that for each row, I will combine the lists from each column (excluding the key) into a single list in the new column, combined. An example of my desired result is below in figure B.

I've tried some methods with iteritems(), but these dataframes have the potential to be hundreds of thousands to millions of rows long which made it incredibly slow. So I am trying to avoid solutions that use that.

I'd like to use something like the list comprehension seen in

Figure B

Edit: I really appreciate all the answers I have gotten so far! I'm currently going through and timing each on my full size dataset so I can figure out which one will work best this this case. I'll update with my result shortly!

Edit 2: I tested the main solutions provided here on a few of my larger datasets and their average times are below.

# Lambda/Apply a nested list comprehension

shakiba.mrd: 1.12 s

# Sum columns

jfaccioni: 2.21 s

# Nested list comprehension with iterrows

mozway: 0.95 s

# Adding column lists together

politinsa: 3.50 s

Thanks again to everyone for their contributions!

CodePudding user response:

You can add lists in python

df['combined'] = df['valueA'] df['valueB'] df['valueN']

Or for multiple columns:

df['combined'] = [[] for _ in range(len(df))]

for letter in ['A', 'B', 'C', ...., 'N']:

df['combined'] = df[f'value{letter}']

CodePudding user response:

You can use a nested list comprehension:

dataSet['combined'] = [[e for l in x for e in l]

for _,x in dataSet.filter(like='value').iterrows()]

Output:

key valueA valueB valueN combined

0 1_1 [1, 2, 3, 4, 5, 6] [1, 2, 3, 4, 5, 6] [1, 2, 3, 4, 5, 6] [1, 2, 3, 4, 5, 6, 1, 2, 3, 4, 5, 6, 1, 2, 3, 4, 5, 6]

1 1_2 [7, 8, 9, 10, 11, 12] [7, 8, 9, 10, 11, 12] [7, 8, 9, 10, 11, 12] [7, 8, 9, 10, 11, 12, 7, 8, 9, 10, 11, 12, 7, 8, 9, 10, 11, 12]

2 1_3 [13, 14, 15, 16, 17, 18] [13, 14, 15, 16, 17, 18] [13, 14, 15, 16, 17, 18] [13, 14, 15, 16, 17, 18, 13, 14, 15, 16, 17, 18, 13, 14, 15, 16, 17, 18]

Timing comparison with repeated addition (100 rows, 100 columns, 1000 items per list):

# repeated addition of the lists

8.66 s ± 309 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

# nested list comprehension

729 ms ± 285 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

CodePudding user response:

First you should merge these columns into one new column:

merge_columns = list(dataSet.columns)

merge_columns.remove("key")

dataSet["combined"] = dataSet[merge_columns].values.tolist()

Then you should make a list from list of lists in each row:

dataSet["combined"] = dataSet["combined"].apply(lambda x: [item for sublist in x for item in sublist])

CodePudding user response:

You can simply select the columns that contain the lists, then sum the columns with .sum(axis=1).

It works like this:

import pandas as pd

data = {

'key': ['1_1', '1_2', '1_3'],

'valueA': [[1, 2, 3, 4, 5, 6], [7, 8, 9, 10, 11, 12], [13, 14, 15, 16, 17, 18]],

'valueB': [[1, 2, 3, 4, 5, 6], [7, 8, 9, 10, 11, 12], [13, 14, 15, 16, 17, 18]],

'valueN': [[1, 2, 3, 4, 5, 6], [7, 8, 9, 10, 11, 12], [13, 14, 15, 16, 17, 18]],

}

dataSet = pd.DataFrame(data)

columns_to_combine = ['valueA', 'valueB', 'valueN']

dataSet['combined'] = dataSet[columns_to_combine].sum(axis=1)

dataSet.drop(columns=columns_to_combine, inplace=True) # remove the old columns

print(dataSet)

# output:

# key combined

# 0 1_1 [1, 2, 3, 4, 5, 6, 1, 2, 3, 4, 5, 6, 1, 2, 3, 4, 5, 6]

# 1 1_2 [7, 8, 9, 10, 11, 12, 7, 8, 9, 10, 11, 12, 7, 8, 9, 10, 11, 12]

# 2 1_3 [13, 14, 15, 16, 17, 18, 13, 14, 15, 16, 17, 18, 13, 14, 15, 16, 17, 18]

CodePudding user response:

This is the easiest way to reach your goal.

dataSet['Lists'] = dataSet['valueA'] dataSet['valueB'] dataSet['valueN'] dataSet.drop(columns=['valueA','valueB',"valueN"],inplace=True) print(dataSet)