def build_model(hp):

model = keras.Sequential()

for i in range(hp.Int('input_shape', 2, 20)):

model.add(layers.Dense(units=hp.Int('units_' str(i),

min_value=32,

max_value=512,

step=32),

activation='relu'))

model.add(layers.Dense(2, activation='sigmoid'))

model.compile(

optimizer=keras.optimizers.Adam(

hp.Choice('learning_rate', [1e-2, 1e-3, 1e-4])),

loss='binary_crossentropy',

metrics=['accuracy'])

return model

tuner.search(X_train, y_train,

epochs=5,

validation_data=(X_test, y_test))

ValueError: in user code:

File "/home/user/miniconda3/lib/python3.9/site-packages/keras/engine/training.py", line 1051, in train_function *

return step_function(self, iterator)

File "/home/user/miniconda3/lib/python3.9/site-packages/keras/engine/training.py", line 1040, in step_function **

outputs = model.distribute_strategy.run(run_step, args=(data,))

File "/home/user/miniconda3/lib/python3.9/site-packages/keras/engine/training.py", line 1030, in run_step **

outputs = model.train_step(data)

File "/home/user/miniconda3/lib/python3.9/site-packages/keras/engine/training.py", line 890, in train_step

loss = self.compute_loss(x, y, y_pred, sample_weight)

File "/home/user/miniconda3/lib/python3.9/site-packages/keras/engine/training.py", line 948, in compute_loss

return self.compiled_loss(

File "/home/user/miniconda3/lib/python3.9/site-packages/keras/engine/compile_utils.py", line 201, in __call__

loss_value = loss_obj(y_t, y_p, sample_weight=sw)

File "/home/user/miniconda3/lib/python3.9/site-packages/keras/losses.py", line 139, in __call__

losses = call_fn(y_true, y_pred)

File "/home/user/miniconda3/lib/python3.9/site-packages/keras/losses.py", line 243, in call **

return ag_fn(y_true, y_pred, **self._fn_kwargs)

File "/home/user/miniconda3/lib/python3.9/site-packages/keras/losses.py", line 1930, in binary_crossentropy

backend.binary_crossentropy(y_true, y_pred, from_logits=from_logits),

File "/home/user/miniconda3/lib/python3.9/site-packages/keras/backend.py", line 5283, in binary_crossentropy

return tf.nn.sigmoid_cross_entropy_with_logits(labels=target, logits=output)

ValueError: `logits` and `labels` must have the same shape, received ((None, 2) vs (None, 1)).

Please help me solve the above error. Which is : ValueError: logits and labels must have the same shape, received ((None, 2) vs (None, 1)). I am doing Binary Classification here.

CodePudding user response:

When using binary_crossentropy you need to write the last Dense layer like below:

layers.Dense(1, activation='sigmoid')

Full code for finding the best parameter with keras-tuner:

# !pip install keras-tuner -q

import numpy as np

import keras_tuner

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers

(x_train, y_train), (x_test, y_test) = ((np.random.rand(1000,4), np.random.randint(0, 2, 1000)) ,

(np.random.rand(100,4), np.random.randint(0,2, 100)))

def build_model(hp):

model = keras.Sequential()

n_layers = 4

n_features = x_train.shape[1]

inputs = model.add(keras.Input(shape=(n_features,)))

for i in range(hp.Int("dense_layer", 1, n_layers)):

model.add(layers.Dense(units=hp.Int('units_' str(i),

min_value=32,

max_value=512,

step=32),

activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

model.compile(

optimizer=keras.optimizers.Adam(

hp.Choice('learning_rate', [1e-2, 1e-3, 1e-4])),

loss='binary_crossentropy',

metrics=['accuracy'])

return model

hp = keras_tuner.HyperParameters()

model = build_model(hp)

model.summary()

tuner = keras_tuner.RandomSearch(

build_model,

max_trials=10,

overwrite=True,

objective="val_accuracy",

# Set a directory to store the intermediate results.

directory="/logs/hyp_tune/",

)

tensorboard_cb = tf.keras.callbacks.TensorBoard('/logs/hyp_tune/')

tuner.search(

x_train, y_train,

validation_data=(x_test, y_test),

epochs=5,

callbacks=[tensorboard_cb],

)

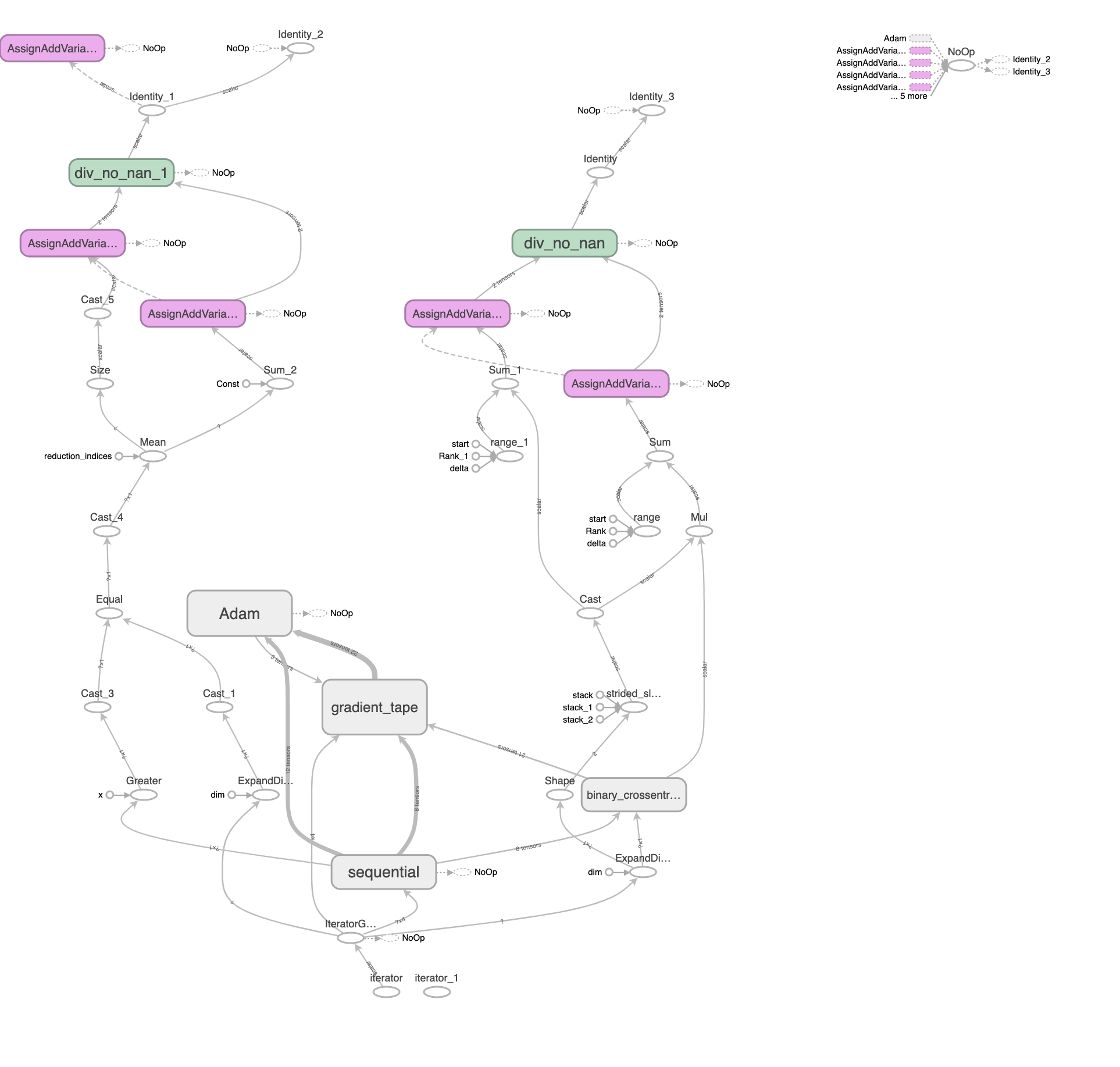

Output:

%load_ext tensorboard

%tensorboard --logdir /logs/hyp_tune/

CodePudding user response:

Binary Crossentropy loss expects the model to output a single floating-point value. Your model seems to be outputting 2. Change the last layer of your model to output a single value like so:

model.add(layers.Dense(1, activation='sigmoid'))