I am trying to convert a SQL stored procedure to databricks notebook. In the stored procedure below 2 statements are to be implemented. Here the tables 1 and 2 are delta lake tables in databricks cluster.

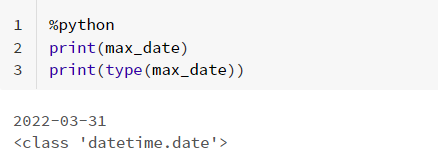

I want to use a python variable in place of max_date in SQL query. How to do it?

%sql

DELETE FROM table1 WHERE Date = max_date;

INSERT INTO table1

SELECT * FROM table2 WHERE Date = max_date;

CodePudding user response:

If you are going to run it cell by cell then you can use databricks widgets like

First cell

x=str(datetime.date.today())

dbutils.widgets.text("max_date",x)

Second cell

%sql

select getArgument("max_date") AS max_date

will give you

max_date

2022-06-04

but as mentioned here it does not work when run all is used and ideal way will be to create separate language based notebook and pass variables using %run

Other way is to use spark conf like below

First set a value for the conf [Note-the conf name should have .(dot) in it]

max_date2=str(datetime.date.today())

spark.conf.set("abc.max_dt2", max_date2)

Next try selecting value like below

%sql

select "${abc.max_dt2}" as max_date

It should give same value as above