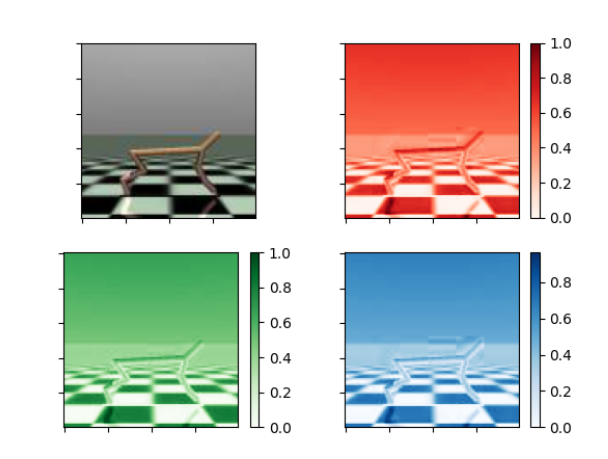

So I have this original image:

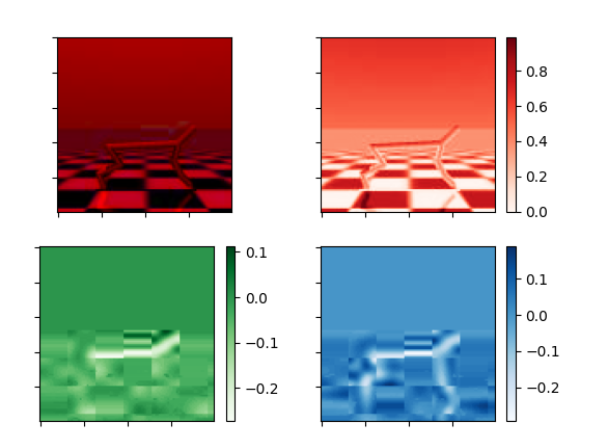

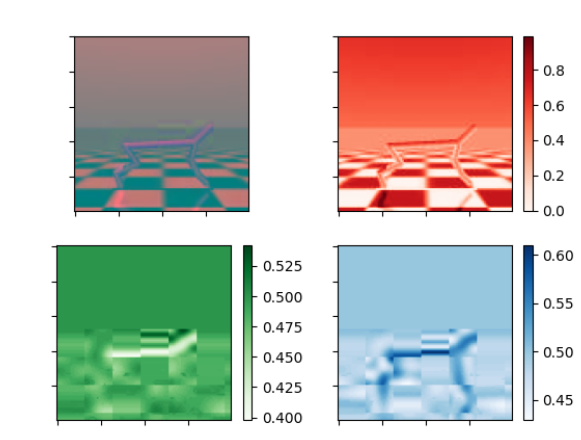

it's separated by red, blue and green channels which I know aren't equivalent to YDbDr, but should give a good general gauge on whether the channels have been converted correctly. As you can see Db and Dr equivalently are heavily pixelated when they shouldn't be.

Here's the code:

def RGB_to_YDbDr(obs_RGB):

r = obs_RGB[:, 0]

g = obs_RGB[:, 1]

b = obs_RGB[:, 2]

y = 0.299 * r 0.587 * g 0.114 * b

db = -0.450 * r -0.883 * g 1.333 * b

dr = -1.333 * r 1.116 * g 0.217 * b

return torch.stack([y, db, dr], -3)

I followed

Also seems very heavily pixelated

CodePudding user response:

Assuming that the shape of the input array is (width, height, channels), you should replace r = obs_RGB[:, 0] with r = obs_RGB[..., 0] (and likewise for g and b) and possibly torch.stack([y, db, dr], -3) with torch.stack([y, db, dr], -1).

Likely, with arr[:, 0] you want to select the whole red channel, which is an array that has shape (width, height), and this could be done with arr[..., 0]. arr[:, 0] instead will pick the first pixel across the width and the channels, resulting in array with shape (width, channels).

The torch.stack([y, db, dr], -3) line is stacking the colors BUT it is putting the channels in the first dimension. That is perfectly fine and some libraries do follow the channel-first convention, while others, including MatPlotLib and the modifications I suggested, do follow the channel-last convention.

Finally, if you assume RGB to be stored in unsigned 8-bit integers in the 0-255 range, you should correct for it as the formulae you have require RGB to be in the [0, 1] range.

Some code (using only NumPy, but should be similar with torch):

import numpy as np

def RGB_to_YDbDr(obs_RGB):

r = obs_RGB[..., 0] / 255

g = obs_RGB[..., 1] / 255

b = obs_RGB[..., 2] / 255

y = 0.299 * r 0.587 * g 0.114 * b

db = -0.450 * r -0.883 * g 1.333 * b

dr = -1.333 * r 1.116 * g 0.217 * b

print(y.shape, db.shape, dr.shape)

return np.stack([y, db, dr], -1)

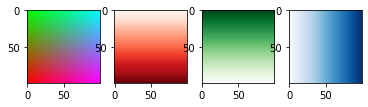

to be tested with:

import matplotlib.pyplot as plt

x = np.zeros((100, 100), dtype=np.uint8)

y = np.linspace(0, 255, 100).astype(np.uint8)

img_RGB = np.stack([x y[:, None], x y[::-1, None], x y[None, :]], -1)

img_YDbDr = RGB_to_YDbDr(img_RGB)

# Plot RGB

fig, axs = plt.subplots(1, 4)

axs[0].imshow(img_RGB)

axs[1].imshow(img_RGB[..., 0], cmap=plt.cm.Reds)

axs[2].imshow(img_RGB[..., 1], cmap=plt.cm.Greens)

axs[3].imshow(img_RGB[..., 2], cmap=plt.cm.Blues)

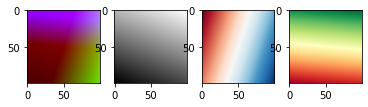

# Plot YDbDr

fig, axs = plt.subplots(1, 4)

axs[0].imshow(img_YDbDr) # this does not really work

axs[1].imshow(img_YDbDr[..., 0], cmap=plt.cm.gray)

axs[2].imshow(img_YDbDr[..., 1], cmap=plt.cm.RdBu)

axs[3].imshow(img_YDbDr[..., 2], cmap=plt.cm.RdYlGn)

The pixelation you observe is most likely within the input array you feed to the function.

CodePudding user response:

Lok closely. Your source image is compressed already. What you see is the "chroma subsampling" that is done by image compression. You see blocks of color distortion.

That is exactly what you see in your color (chroma) planes.

Nooo this has nothing to do with plotting or anything. Your source image is compressed. You can only fix that if you have an uncompressed source image, or one that isn't so severely compressed.