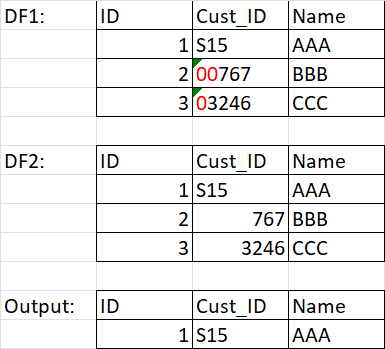

I want to join two dataframes by removing the matching records using column cust_id. Some of the cust_id have leading zeros. So I need to match by removing zeros in the 'ON' clause. Tried the below query, but it's giving error in Databricks notebook.

PS: I don't want to create another DF1 with zeros removed.

Query:

df1 = df1.join(df2,[regexp_replace("cust_id", r'^[0]*','')], "leftanti")

Py4j.Py4JException: Method and Class java.lang.string does not exist

CodePudding user response:

No Need of square brackets [ ]

df1.join(df2, regexp_replace(df2("cust_id"), r'^[0]*', lit("")))

see documentatin here regexp_replace

CodePudding user response:

The following works, but the output that you provided will not be reached using "leftanti" join: S15 matches S15 from another table, so it is removed too. In the example that you provided, "leftanti" join does not return any row.

from pyspark.sql import functions as F

df1 = spark.createDataFrame(

[(1, 'S15', 'AAA'),

(2, '00767', 'BBB'),

(3, '03246', 'CCC')],

['ID', 'cust_id', 'Name'])

df2 = spark.createDataFrame(

[(1, 'S15', 'AAA'),

(2, '767', 'BBB'),

(3, '3246', 'CCC')],

['ID', 'cust_id', 'Name'])

df = df1.join(df2, df2.cust_id == F.regexp_replace(df1.cust_id, r'^0*', ''), "leftanti")

df.show()

# --- ------- ----

# | ID|cust_id|Name|

# --- ------- ----

# --- ------- ----