This is my text file:

1|16255|usa|Test||TEST806282|||||

2|16255|806282|company_member|False|true

3|16255|my_data

1|18299|usa|Test||TEST260092|||||

2|18299|260092|company_member|False|False

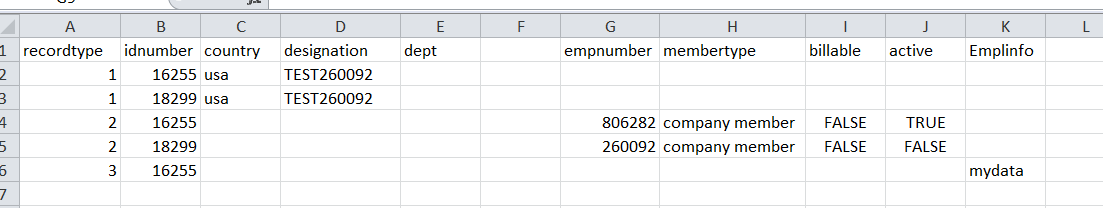

I want to insert this data into different columns in a single table based on the key (1,2,3,1,2) using Spark SQL.

Desired output:

CodePudding user response:

Thank you for the clarifications. Something along the following lines should do the trick. Text files are best read using rdds.

rdd = spark.sparkContext.textFile('my_path/my_file.txt')

rdd = rdd.map(lambda k: k.split('|'))

def relocate(e):

if e[0] == '1':

return [e[0], e[1], e[2], e[5], '', '', '', '', '', '']

elif e[0] == '2':

return [e[0], e[1], '', '', '', e[2], e[3], e[4], e[5], '']

elif e[0] == '3':

return [e[0], e[1], '', '', '', '', '', '', '', e[2]]

rdd = rdd.map(relocate)

df = rdd.toDF(['recordtype', 'idnumber', 'country', 'designation', 'dept', 'empnumber', 'membertype', 'billable', 'active', 'Emplinfo'])

df.show()

# ---------- -------- ------- ----------- ---- --------- -------------- -------- ------ --------

# |recordtype|idnumber|country|designation|dept|empnumber| membertype|billable|active|Emplinfo|

# ---------- -------- ------- ----------- ---- --------- -------------- -------- ------ --------

# | 1| 16255| usa| TEST806282| | | | | | |

# | 2| 16255| | | | 806282|company_member| False| true| |

# | 3| 16255| | | | | | | | my_data|

# | 1| 18299| usa| TEST260092| | | | | | |

# | 2| 18299| | | | 260092|company_member| False| False| |

# ---------- -------- ------- ----------- ---- --------- -------------- -------- ------ --------