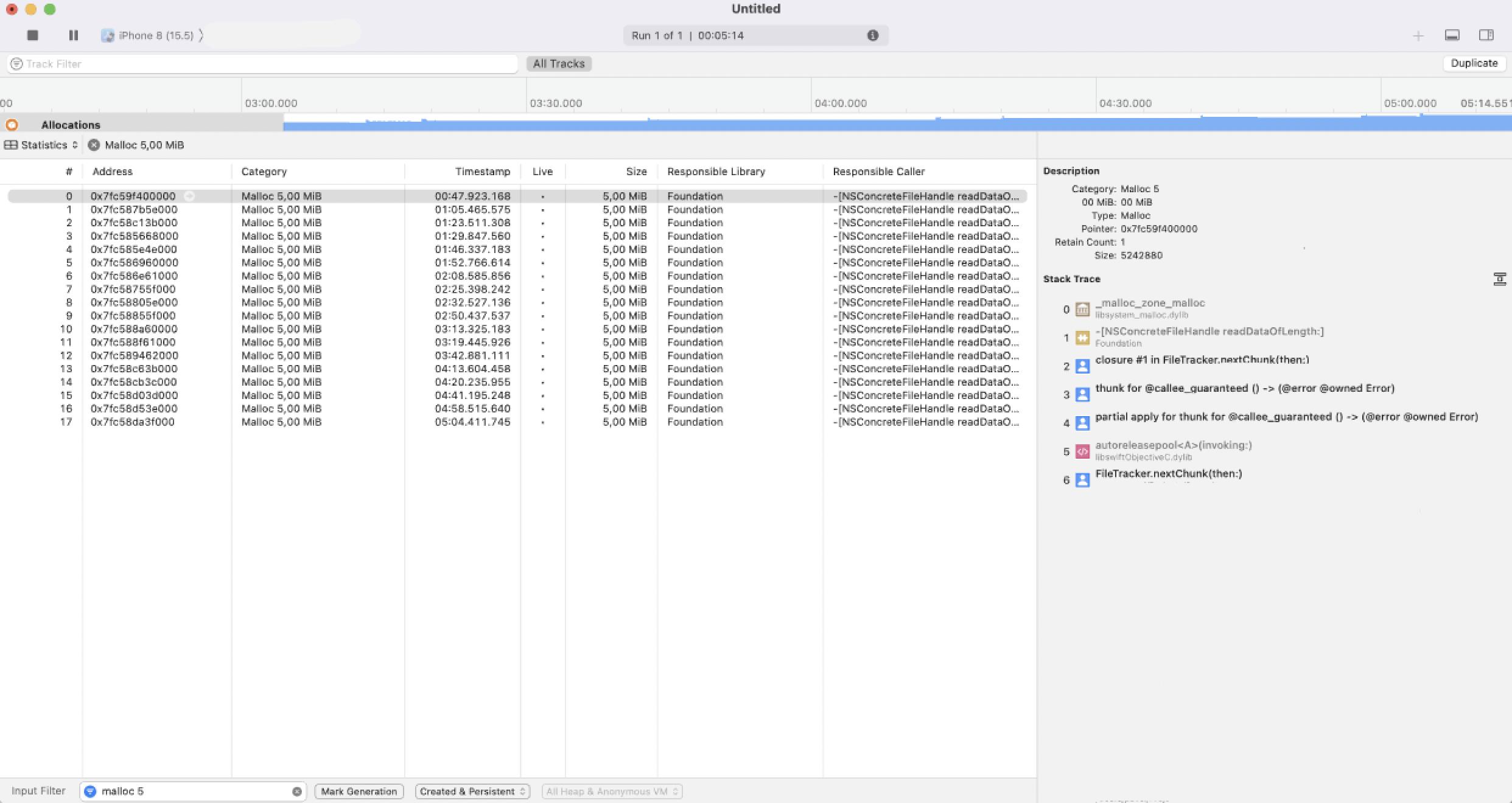

I'll send large file to server. The file will be separated to chunks. I receive high memory consumption when I call FileHandle.readData(ofLength:). Memory for chunk don't deallocate, and after some time I receive EOM exception and crash. Profiler show problem in FileHandle.readData(ofLength:) (see screenshots)

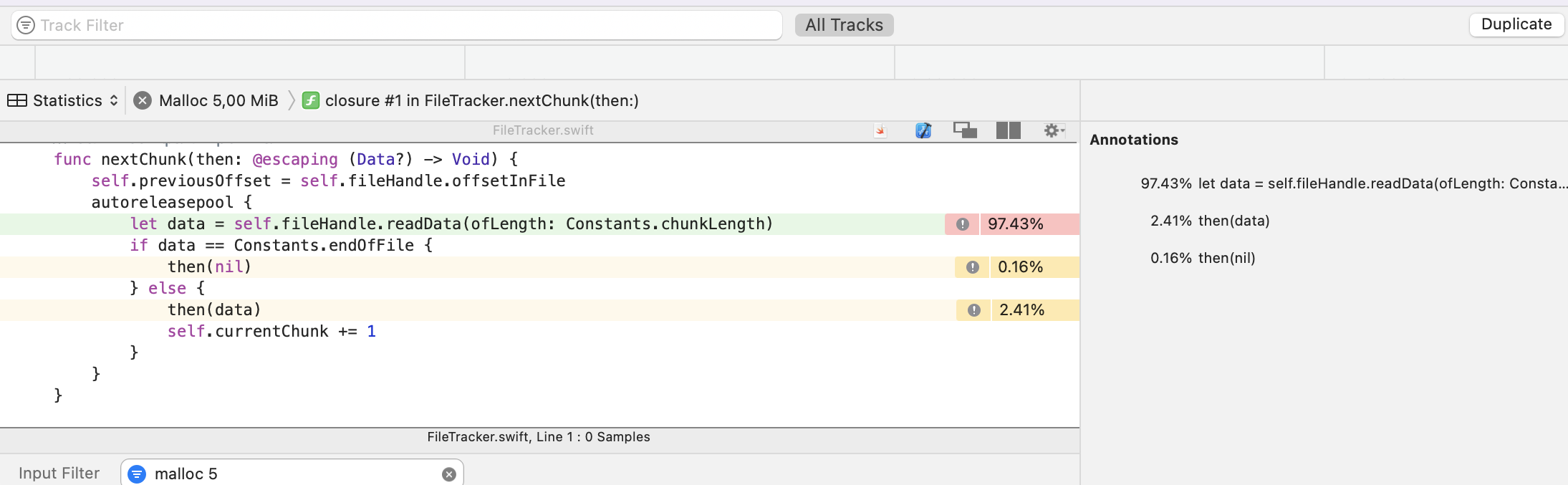

func nextChunk(then: @escaping (Data?) -> Void) {

self.previousOffset = self.fileHandle.offsetInFile

autoreleasepool {

let data = self.fileHandle.readData(ofLength: Constants.chunkLength)

if data == Constants.endOfFile {

then(nil)

} else {

then(data)

self.currentChunk = 1

}

}

}

CodePudding user response:

The allocations tool is simply showing you where the unreleased memory was initially allocated. It is up to you to figure out what you subsequently did with that object and why it was not released in a timely manner. None of the profiling tools can help you with that. They can only point to where the object was originally allocated, which is only the starting point for your research.

One possible problem might be if you are creating Data-based URLRequest objects. That means that while the associated URLSessionTask requests are in progress, the Data is held in memory. If so, you might consider using a file-based uploadTask instead. That prevents the holding the Data associated with the body of the request in memory.

Once your start using file-based uploadTask, that begs the question as to whether you need/want to break it up into chunks at all. A file-based uploadTask, even when sending very large assets, requires very little RAM at runtime. And, at some future point in time, you may even consider using a background session, so the uploads will continue even if the user leaves the app. The combination of these features may obviate the chunking altogether.

As you may have surmised, the autoreleasepool may be unnecessary. That is intended to solve a very specific problem (where one create and release autorelease objects in a tight loop). I suspect your problem rests elsewhere.