I have a problem. I have an object detection model that detects two classes, what I want to do is:

- Detect class 1 (say c1) on source image (640x640) Draw bounding box and crop bounding box -> (c1 image) and then resize it to (640x640) (DONE)

- Detect class 2 (say c2) on c1 image (640x640) (DONE)

- Now I want to draw bounding box of c2 on source image

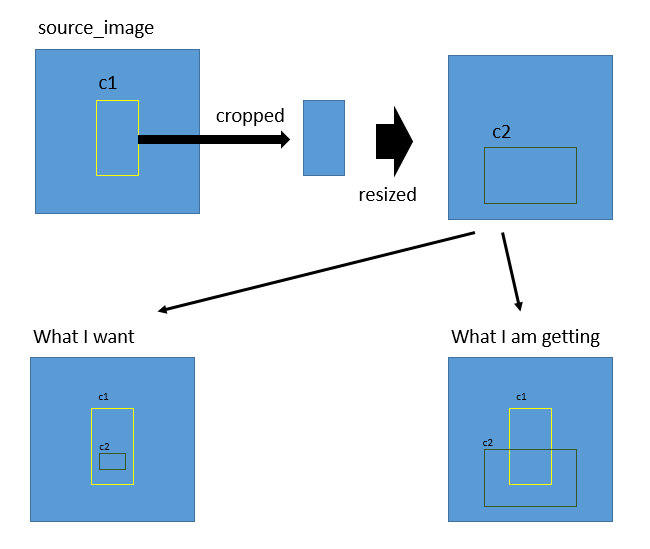

I have tried to explain it here by visualizing it

how can I do it? please help.

Code:

frame = self.REC.ImgResize(frame)

frame, score1, self.FLAG1, x, y, w, h = self.Detect(frame, "c1")

if self.FLAG1 and x > 0 and y > 0:

x1, y1 = w,h

cv2.rectangle(frame, (x, y), (w, h), self.COLOR1, 1)

c1Img = frame[y:h, x:w]

c1Img = self.REC.ImgResize(c1Img)

ratio = c2Img.shape[1] / float(frame.shape[1])

if ratio > 0.35:

c2Img, score2, self.FLAG2, xN, yN, wN, hN = self.Detect(c1Img, "c2")

if self.FLAG2 and xN > 0 and yN > 0:

# What should be the values for these => (__, __),(__,__)

cv2.rectangle(frame, (__, __), (__, __), self.COLOR2, 1)

I had tried a way which could only solve (x,y) coordinates but width and height was a mess what I tried was

first found the rate of width and height at which the cropped c1 image increased after resize.

for example

x1 = 329, y1 = 102, h1 = 637, w1 = 630

r_w = 630/640 -> 0.9843, r_h = 637/640 -> 0.9953

x2 = 158, y2 = 393, h2 = 499, w2 = 588

new_x2 = 158x0.9843 ~ 156

new_y2 = 389x0.9953 ~ 389

new_x2 = x1 new_x2

new_y2 = y1 new_y2

this work for (x,y) but I am still trying to find a way to get (w,h) of the bounding box.

CodePudding user response:

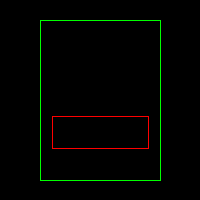

Here is a complete programming example. Please keep in mind that for cv2.rectangle you need to pass top-left corner and bottom-right corner of the rectangle. As you didn't share ImgResize and Detect I made some assumptions:

import cv2

import numpy as np

COLOR1 = (0, 255, 0)

COLOR2 = (0, 0, 255)

DETECT_c1 = (40, 20, 120, 160)

DETECT_c2 = (20, 120, 160, 40)

RESIZE_x, RESIZE_y = 200, 200

frame = np.zeros((RESIZE_y, RESIZE_x, 3), np.uint8)

x1, y1, w1, h1 = DETECT_c1

c1Img = frame[y1:h1, x1:w1]

cv2.rectangle(frame, (x1, y1), (x1 w1, y1 h1), COLOR1, 1)

c1Img = cv2.resize(c1Img, (RESIZE_x, RESIZE_y))

x2, y2, w2, h2 = DETECT_c2

x3 = x1 int(x2 * w1 / RESIZE_x)

y3 = y1 int(y2 * h1 / RESIZE_y)

w3 = int(w2 * w1 / RESIZE_x)

h3 = int(h2 * h1 / RESIZE_y)

cv2.rectangle(frame, (x3, y3), (x3 w3, y3 h3), COLOR2, 1)

cv2.imwrite('out.png', frame)

Output:

CodePudding user response:

I suggest that you treat your bounding box coordinates relatively.

If I understand correctly, your problem is that you have different referential. One way to bypass that is to normalize at each step your bbox coordinates.

c1_box is relative to your image, so :

c1_x = x/640

c1_y = y/640

When you crop, you can record the ratio values between main image and your cropped object.

image_vs_c1_x = c1_x / img_x

image_vs_c1_y = c1_y / img_y

Then you need to multiply your c2 bounding box coordinates by those ratios.