I have a dataframe with a couple thousand rows, I want to create a loop to split the entire dataframe by 90 rows each sub-dataframe and INSERT each subset into SQL server.

my dummy way to split it by a fixed number 90 rows which is not efficient

df.loc[0:89,:]

INSERT_sql()

df.loc[90:179,:]

INSERT_sql()

......

sample data

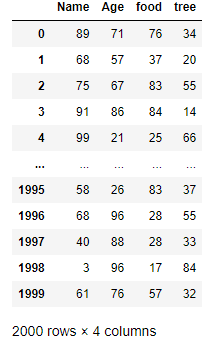

df = pd.DataFrame(np.random.randint(0,100,size=(2000, 4)), columns = ['Name', 'Age','food','tree']) #size control how many rows

because of my sql server has the limitation, I can only insert 90 rows for each Bulk Insert.

CodePudding user response:

Here's a pretty verbose approach. In this case, taking your sample dataframe, it is sliced in increments of 90 rows. The first block will be 0-89, then 90-179, 180-269, etc.

import pandas as pd

import numpy as np

import math

df = pd.DataFrame(np.random.randint(0,100,size=(2000, 4)), columns = ['Name', 'Age','food','tree']) #size control how many rows

def slice_df(dataframe, row_count):

num_rows = len(dataframe)

num_blocks = math.ceil(num_rows / row_count)

for i in range(num_blocks):

df = dataframe[(i * row_count) : ((i * row_count) row_count-1)]

# Do your insert command here

slice_df(df, 90)

CodePudding user response:

np.array_split(arr, indices)

Split an array into multiple sub-arrays using the given indices.

for chunk in np.array_split(df, range(90, len(df), 90)):

INSERT_sql()