I am trying to read a file (csv file) from HDFS using a Java program. I have searched multiple sources resolve the below issue but still could not. Please help.

( Note : The requirement is as above so I am using java and not python or scala or spark or any other)

I tried something like below:

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

public class hadoop2 {

public static void main(String[] args) throws IOException {

Configuration conf = new Configuration();

conf.addResource(new Path("/etc/hadoop/conf/core-site.xml"));

conf.addResource(new Path("/etc/hadoop/conf/hdfs-site.xml"));

Path file = new Path("/usr1/myFile0.csv");

try (FileSystem hdfs = FileSystem.get(conf)) {

FSDataInputStream is = hdfs.open(file);

BufferedReader br = new BufferedReader(new InputStreamReader(is, "UTF-8"));

String lineRead = br.readLine();

while (lineRead != null) {

System.out.println(lineRead);

lineRead = br.readLine();

//do what ever needed

}

br.close();

hdfs.close();

}

}

}

It outputs error :

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/Users/sigmoid/.m2/repository/org/slf4j/slf4j-reload4j/1.7.36/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/Users/sigmoid/.m2/repository/org/slf4j/slf4j-log4j12/1.7.25/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Reload4jLoggerFactory]

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Exception in thread "main" java.io.FileNotFoundException: File /usr1/myFile0.csv does not exist

at org.apache.hadoop.fs.RawLocalFileSystem.deprecatedGetFileStatus(RawLocalFileSystem.java:779)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileLinkStatusInternal(RawLocalFileSystem.java:1100)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:769)

at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:462)

at org.apache.hadoop.fs.ChecksumFileSystem$ChecksumFSInputChecker.<init>(ChecksumFileSystem.java:160)

at org.apache.hadoop.fs.ChecksumFileSystem.open(ChecksumFileSystem.java:372)

at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:976)

at hadoop2.main(hadoop2.java:22)

I do not understand what exactly is going wrong here. And how to tackle it. I have tried using importing from maven SLF4J but its of no use.

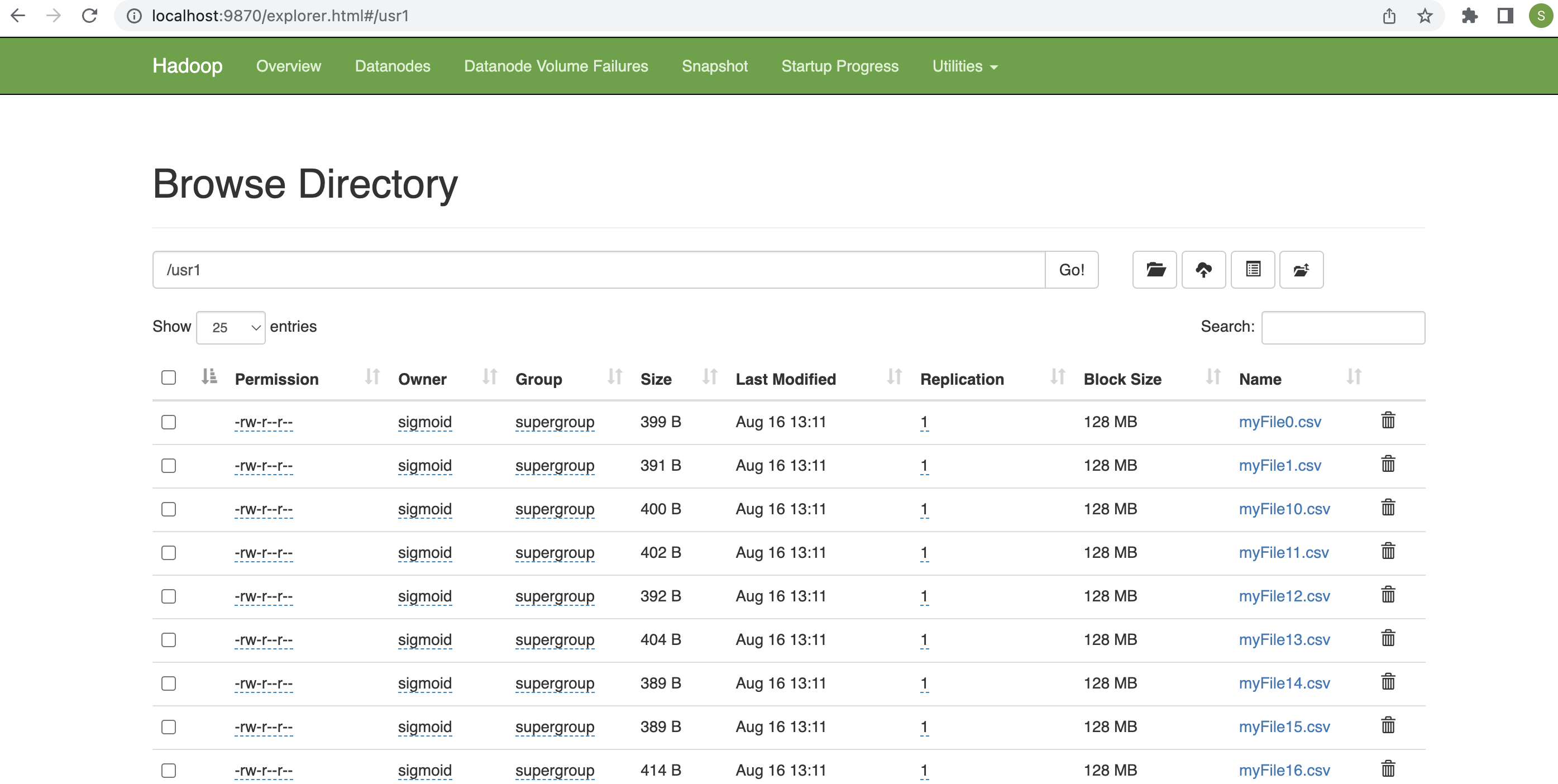

Also the program outputs File /usr1/myFile0.csv does not exist But the file is present. as you can see from the screenshot.

Update:

I was able to eliminate the error slf4j. How I did it was to go to file structure of the project and under maven dependency there were two bindings listed, I deleted both by mistake then I added latest one again.

The error now shows.

Aug 17, 2022 1:40:35 AM org.apache.hadoop.util.NativeCodeLoader <clinit>

WARNING: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Exception in thread "main" java.lang.IllegalArgumentException: Wrong FS: hdfs:/usr1/myFile0.csv, expected: file:///

at org.apache.hadoop.fs.FileSystem.checkPath(FileSystem.java:807)

at org.apache.hadoop.fs.RawLocalFileSystem.pathToFile(RawLocalFileSystem.java:105)

at org.apache.hadoop.fs.RawLocalFileSystem.deprecatedGetFileStatus(RawLocalFileSystem.java:774)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileLinkStatusInternal(RawLocalFileSystem.java:1100)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:769)

at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:462)

at org.apache.hadoop.fs.ChecksumFileSystem$ChecksumFSInputChecker.<init>(ChecksumFileSystem.java:160)

at org.apache.hadoop.fs.ChecksumFileSystem.open(ChecksumFileSystem.java:372)

at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:976)

at hadoop2.main(hadoop2.java:22)

CodePudding user response:

Was able to resolve it:

SLF4J error. Resolution: As stated in question's update. I was able to eliminate the error slf4j. How I did it was to go to file structure of the project and under maven dependency there were two bindings listed, delete one of it.

Error while Read. Resolution: Remove the configuration and add below instead:

From :

conf.addResource(new Path("/etc/hadoop/conf/core-site.xml")); conf.addResource(new Path("/etc/hadoop/conf/hdfs-site.xml"));To

conf.set("fs.defaultFS", "hdfs://localhost:9000");