I am trying to covert a string column (yr_built) of my csv file to Integer data type (yr_builtInt). I have tried to use the "cast()" method. But I am still getting an error:

%python code using pyspark

from pyspark.sql.types import IntegerType

from pyspark.sql.functions import col

house5=house4.withColumn("yr_builtInt", col("yr_built").cast(IntegerType))

Below is the output error I am getting

TypeError: unexpected type:

TypeError Traceback (most recent call last) in

1 house5=house4.withColumn("yr_builtInt", col("yr_built").cast(IntegerType))

/databricks/spark/python/pyspark/sql/column.py in cast(self, dataType)

788 jc = self._jc.cast(jdt)

789 else:

--> 790 raise TypeError("unexpected type: %s" % type(dataType))

791 return Column(jc)

792

TypeError: unexpected type: <class 'pyspark.sql.types.DataTypeSingleton'>

CodePudding user response:

You can use any of the following approaches:

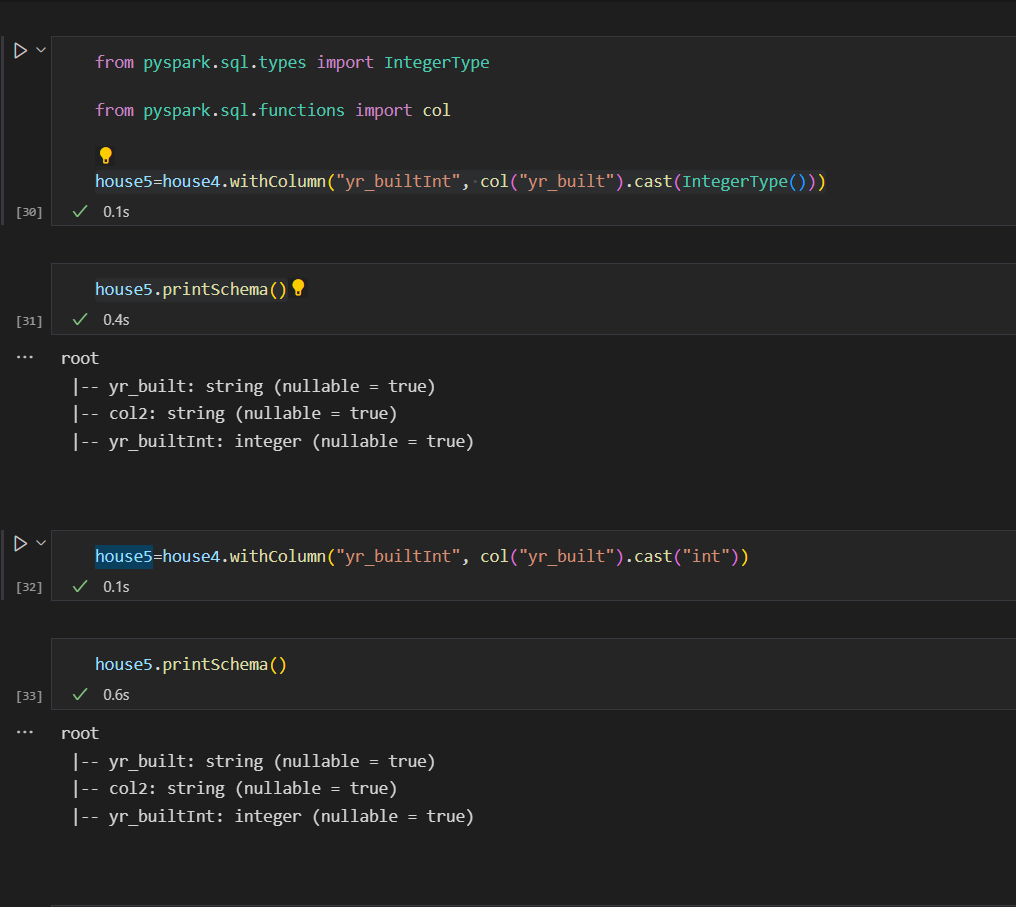

Approach1 :

house5=house4.withColumn("yr_builtInt", col("yr_built").cast(IntegerType()))

Approach2 :

house5=house4.withColumn("yr_builtInt", col("yr_built").cast("int"))