I'm facing a pretty interesting task to convert an arbitrary CSV file to a JSON structure following this schema:

{

"Data": [

["value_1", "value_2"],

["value_3", "value_4"]

]

}

In this case, the input file will look like this:

value_1,value_2

value_3,value_4

The requirement is to use Azure Data Factory and I won't be able to delegate this task to Azure Functions or other services.

I'm thinking about using 'Copy data' activity but can't get my mind around the configuration. TabularTranslator seems to only work with a definite number of columns but the CSV that I can receive can contain any number of columns.

Maybe DataFlows can help me but their setup doesn't look to be an easy one either. Plus, if I get it correctly, DataFlows take more time to start up.

So, basically, I just need to take the CSV content and put it into "Data" 2d array.

Any ideas on how to accomplish this?

CodePudding user response:

To achieve this requirement, using Copy data or TabularTranslator is complicated. This can be achieved using dataflows in the following way.

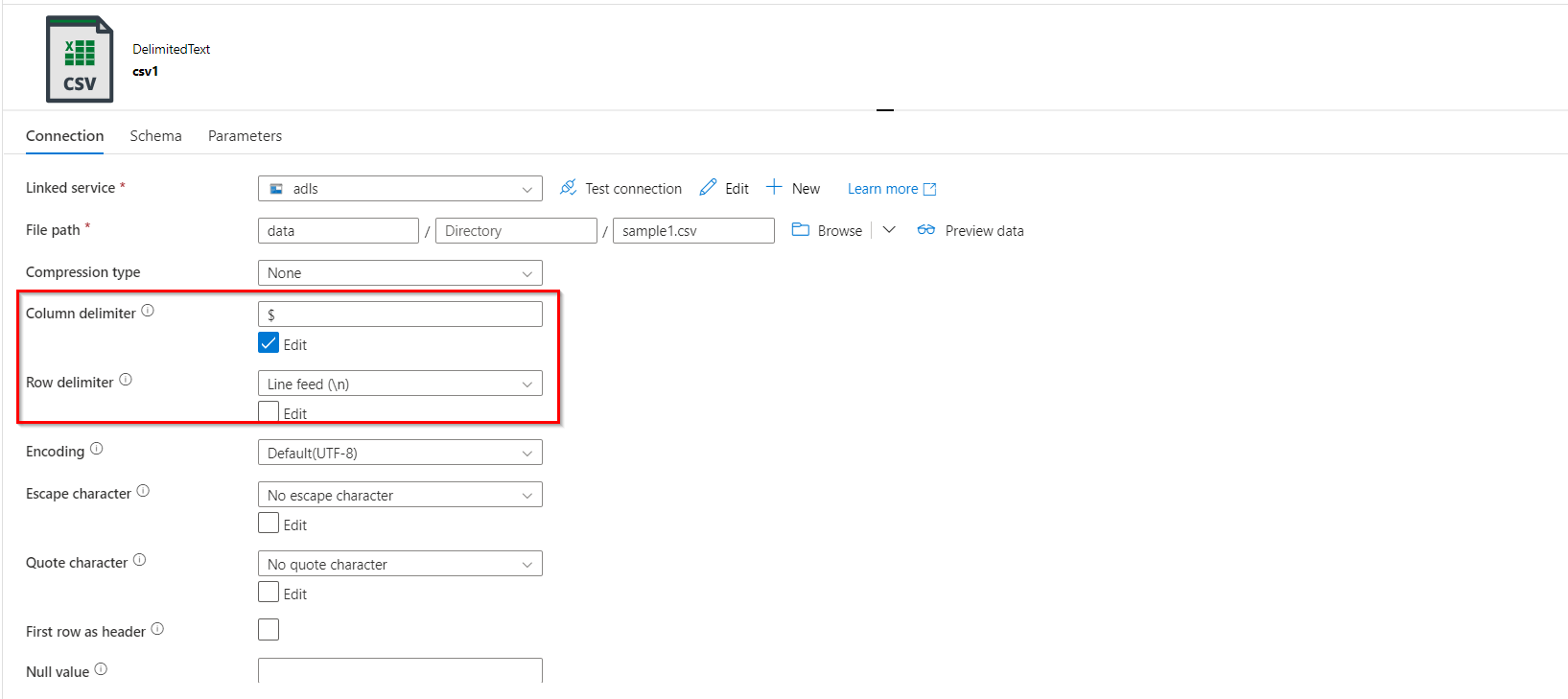

- First create a source dataset using the following configurations. This allows us to read entire row as a single column value (string):

- Import the projection and name the column as

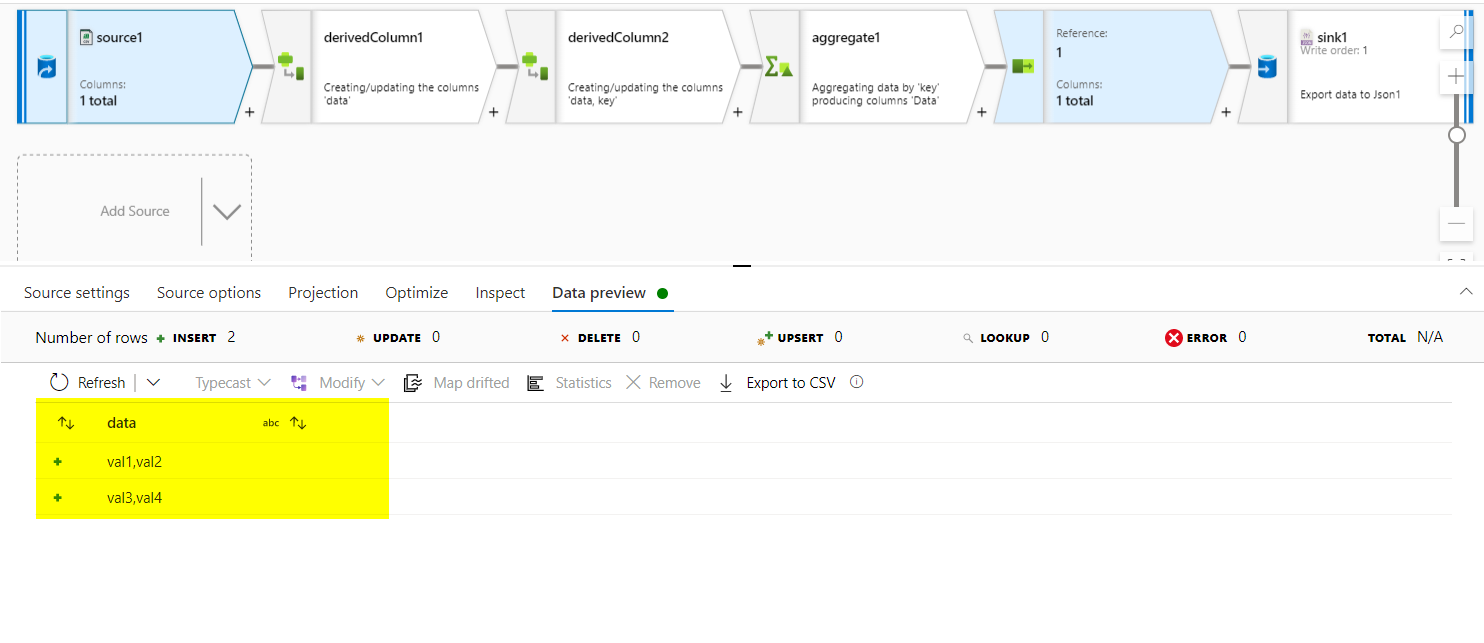

data. The following is how the data preview looks like:

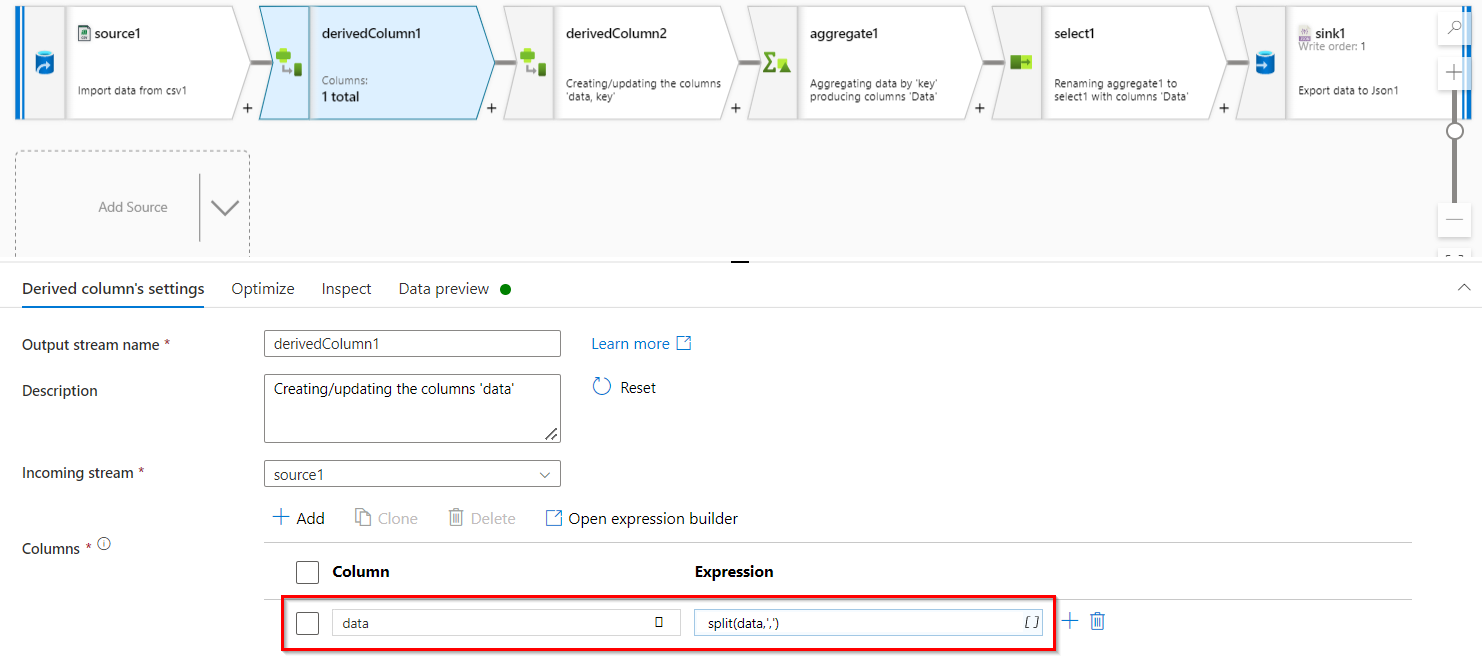

- Now, first split these column values using

splitfunction inderived columntransformations. I am replacing the same column usingsplit(data,',').

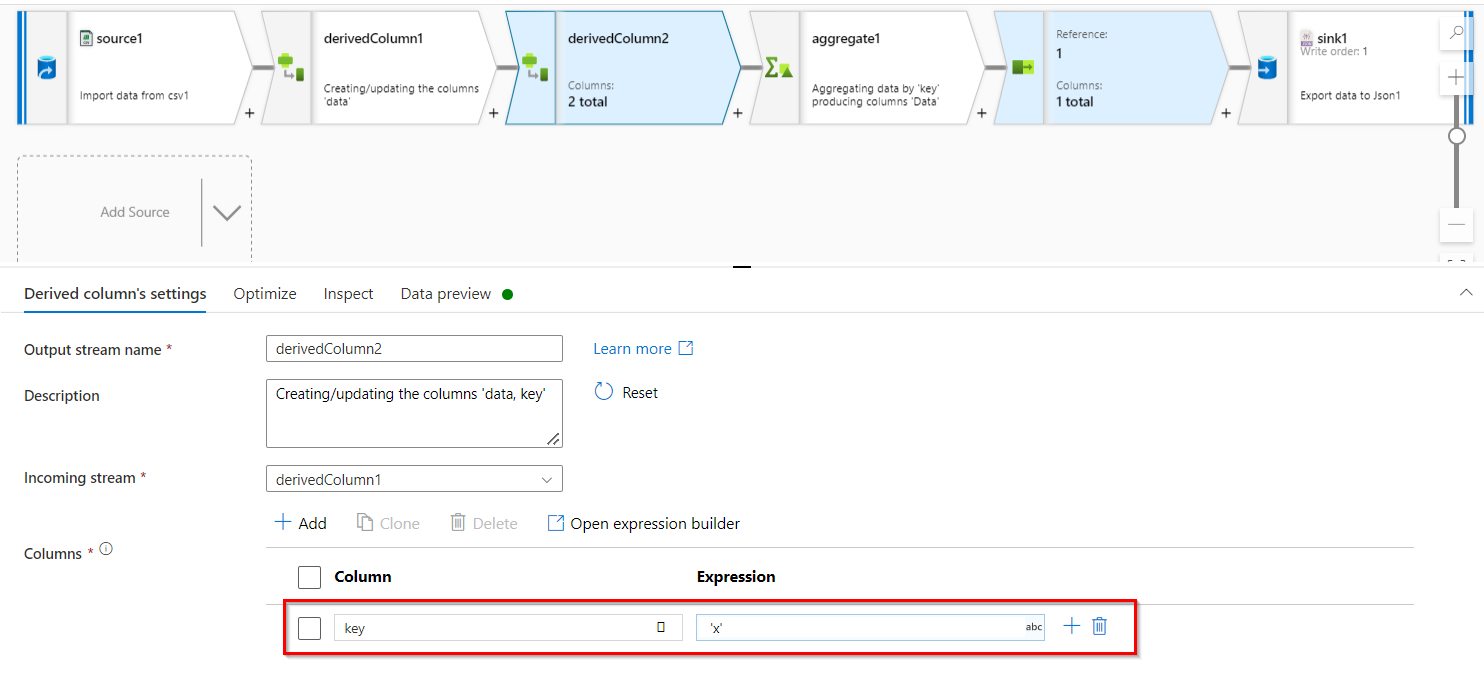

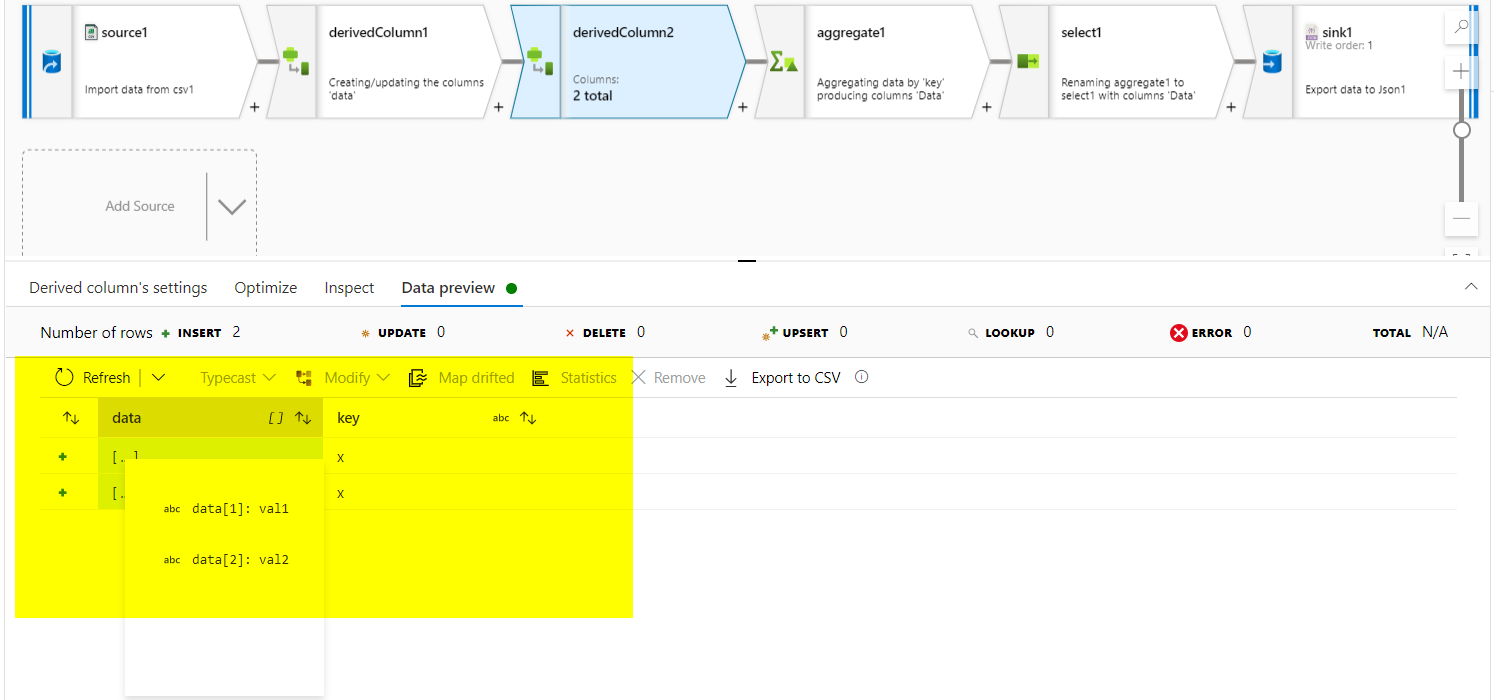

- Then, I have added a

keycolumn with a constant value 'x' so that I can group all rows and covert the grouped data into array of arrays.

- The data would look like this after the above step:

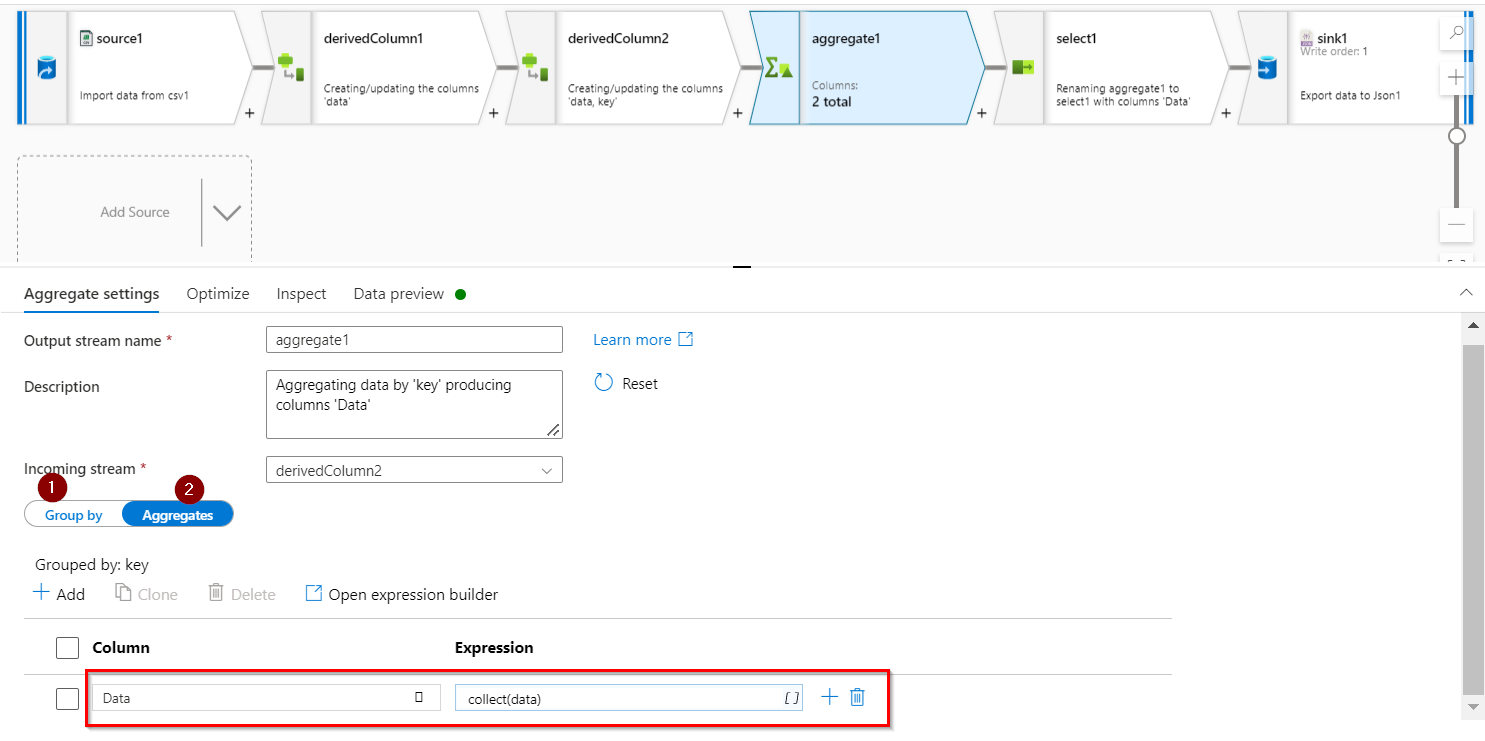

- Use

aggregatetransformation to group by the above created column and usecollectaggregate function to create array of arrays (collect(data)).

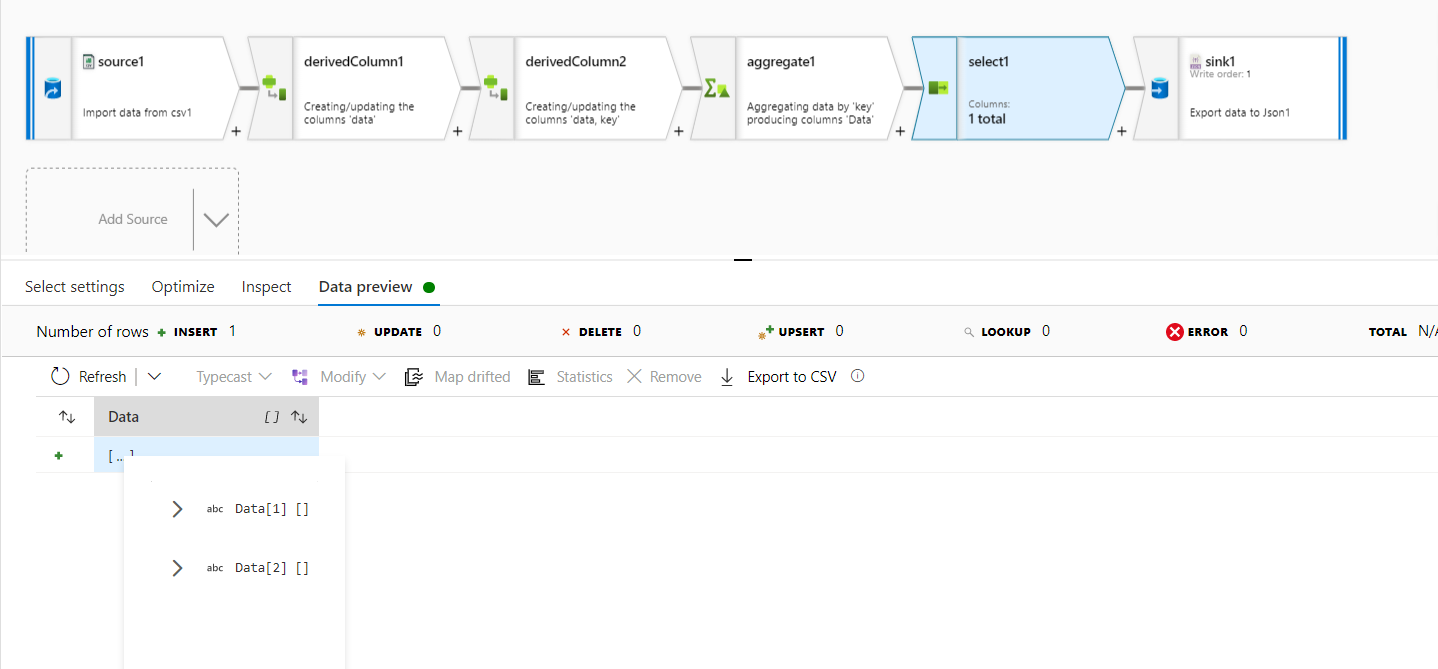

- Use

selecttransformation to select only the above created columnData.

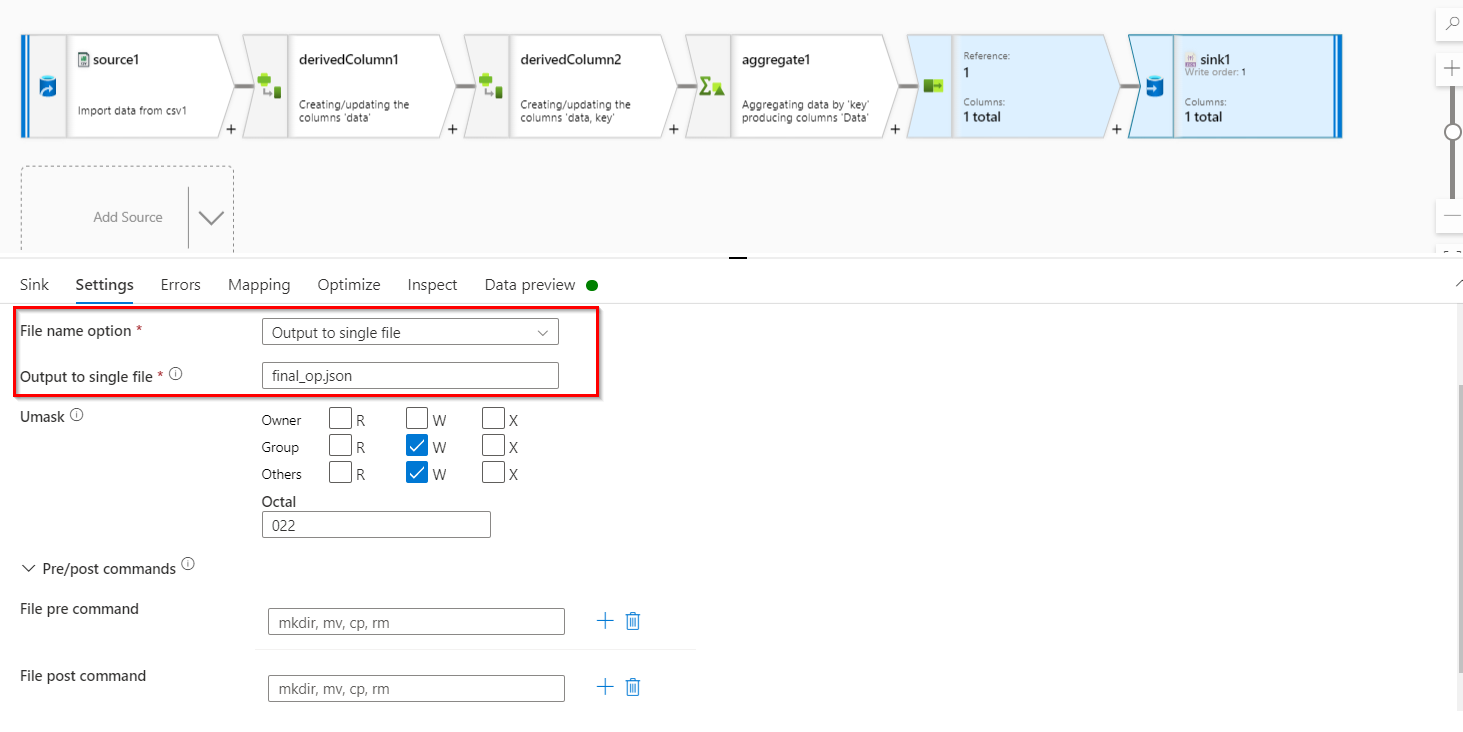

- Finally, in the sink, select your destination and create a sink JSON dataset. Choose

output to single filein settings and give a file name.

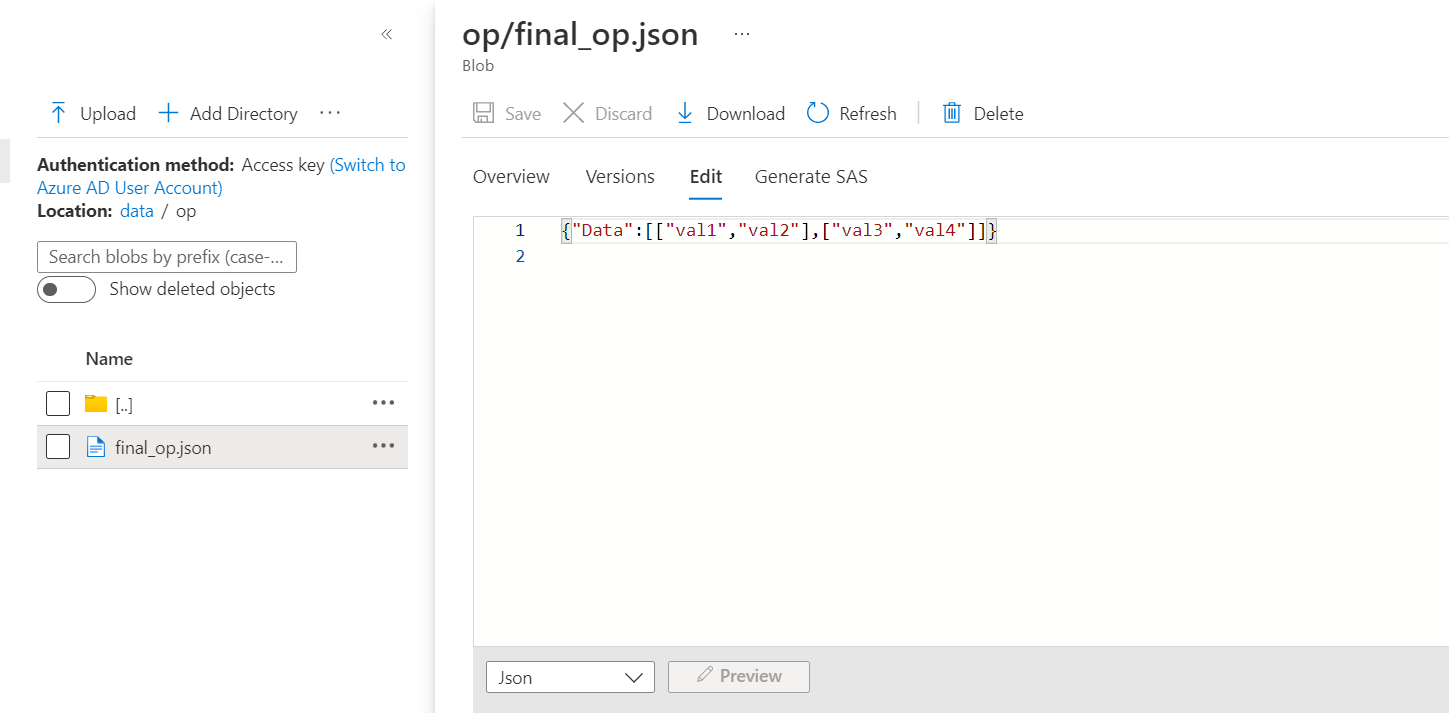

- Create dataflow pipeline activity and run the above dataflow. The file will be created, and it looks like the following: