I have over 1 million row (1,125,000 to be exact) and 150 column numpy array. I want to be able to extract certain portion of this array for all of its columns however only certain rows. For example, the first subset array (df) to have all the columns of the original big array but only include the (:, 56:67, 56:67) [basically all the 50 in the first dimensions, but for the i and j dimensions, select slices 56 to 68 of them, only, which would be 50 times 11 times 11 = 6050] of each column when reshaped into a numpy 3d array. Is there any pythonic way to perform such operation, given the size of my original bdata (1125000, 150)?

Below is my sample code for a sample 3 column array:

import numpy as np

import pandas as pd

data_1 = np.random.random((50,150, 150))

data_2 = np.random.random((50,150, 150))

data_3 = np.random.random((50,150, 150))

big_array = np.concatenate((data_1, data_2, data_3), axis=1).reshape(1125000, 3)

df= pd.DataFrame()

for i in range(big_array.shape[1]):

df_1 = big_array[:,i]

print(df_1.shape)

df_1 = df_1.reshape(50, 150, 150)

df_1 = df_1[:, 56:67, 56:67].reshape(-1)

print(df_1.shape)

df_2 = pd.DataFrame(df_1)

df_2[i] = df_2

print(df_2.shape)

#df = pd.concat([df_2[i]], axis=1)

df = df.append(df_2[i])

print(i)

df.T

CodePudding user response:

IIUC, it looks like you can reshape big_array so that you have a shape of (50,150, 150,3), so that the last dimension is the number of column in big_array or 150 in your real case but you can use big_array.shape[1] as well. Then select all the data you want with the same slicing that you do in the loop except take all in the last dimension. Then reshape again to get X rows and 3 (or big_array.shape[1]) columns. so something like.

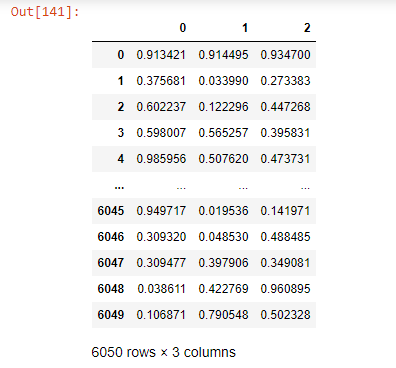

res = pd.DataFrame(big_array.reshape(50, 150, 150,big_array.shape[1])[:,56:67, 56:67,:]

.reshape(-1,big_array.shape[1]))

I tested with my random numbers and np.allclose(df.T,res) gives True (where df was created with your loop method).