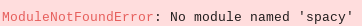

I am attempting to perform some entity extraction, using a custom NER spaCy model. The extraction will be done over a Spark Dataframe, and everything is being orchestrated in a

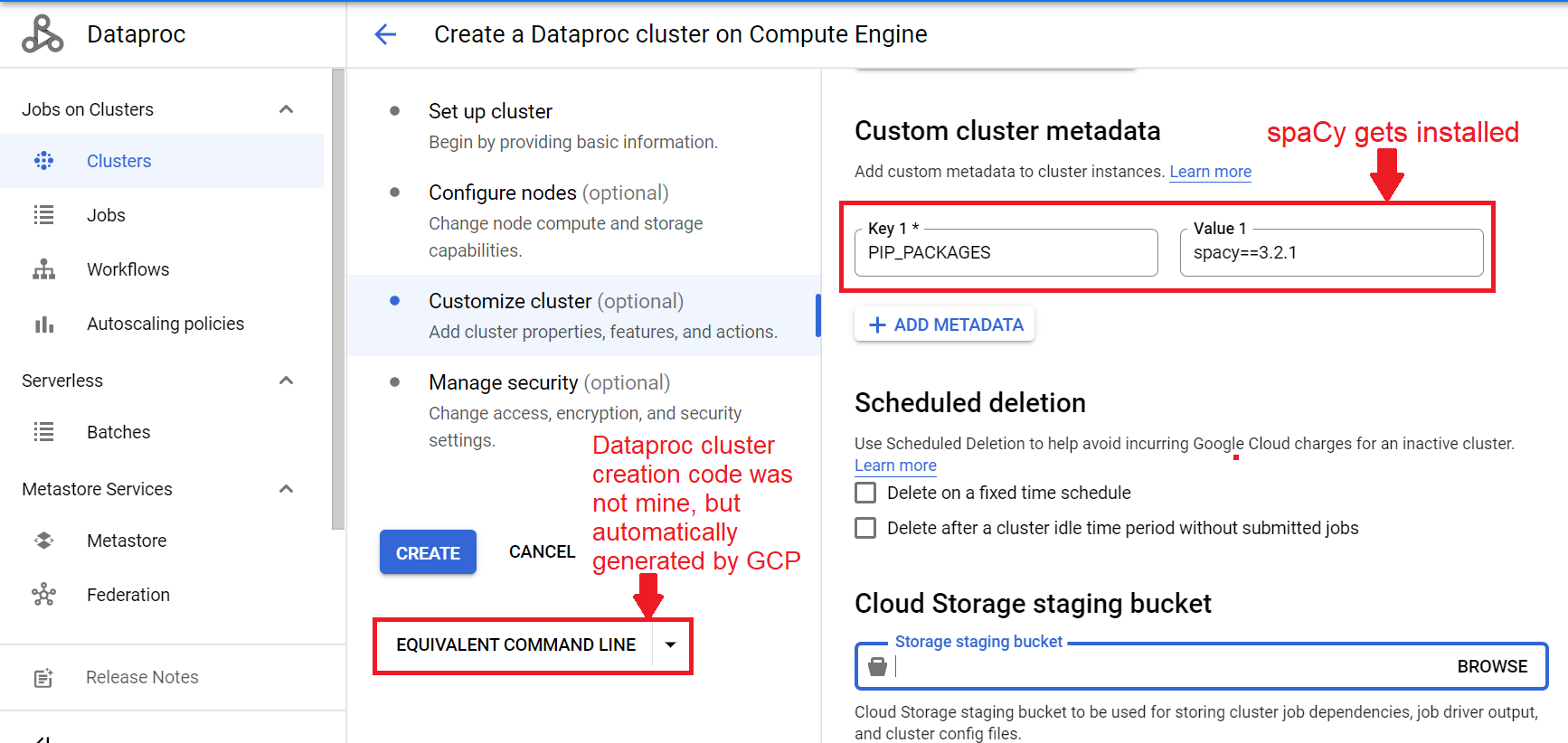

In my case, the Dataproc cluster creation code (automatically generated by GCP) is:

gcloud dataproc clusters create my-cluster \

--enable-component-gateway \

--region us-central1 \

--zone us-central1-c \

--master-machine-type n1-standard-4 \

--master-boot-disk-size 500 \

--num-workers 2 \

--worker-machine-type n1-standard-4 \

--worker-boot-disk-size 500 \

--image-version 2.0-debian10 \

--optional-components JUPYTER \

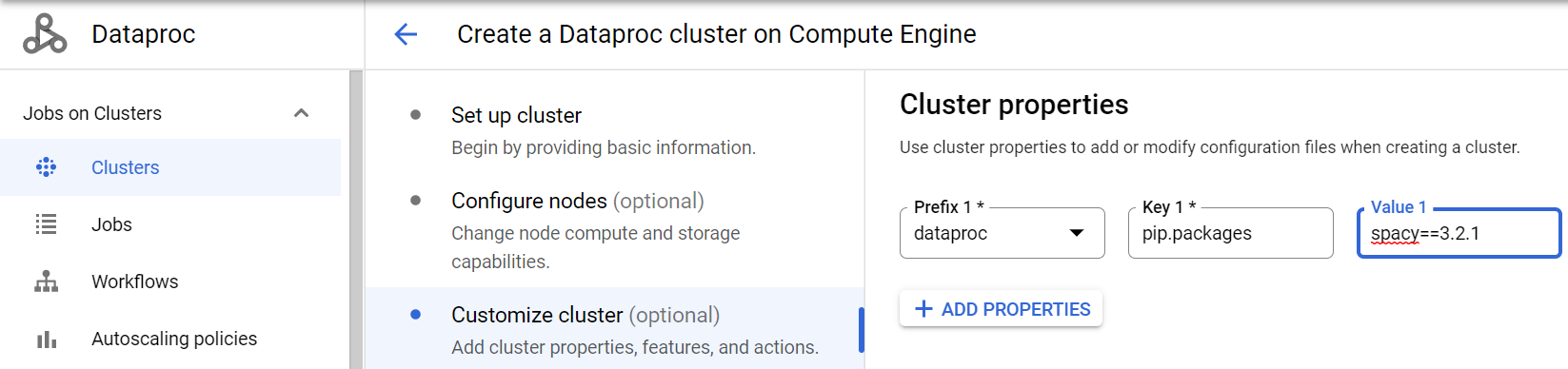

--metadata PIP_PACKAGES=spacy==3.2.1 \

--project hidden-project-name

Notice how spaCy is installed in the metadata (following

I used this pip command and it worked:

pip install -U spacy

But after installing, I got a JAVA_HOME is not set error, so I used these commands:

conda install openjdk conda install -c

conda-forge findspark

!python3 -m spacy download en_core_web_sm

I just included it in case you might also encounter it.

Note: I used spacy.load("en_core_web_sm").

CodePudding user response:

I managed to solve this issue, by combining 2 pieces of information:

- "Configure Dataproc Python environment", "Dataproc image version 2.0" (as that is the version I am using): available

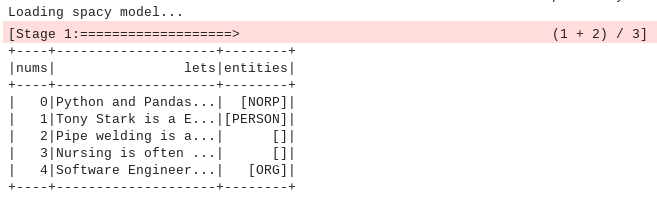

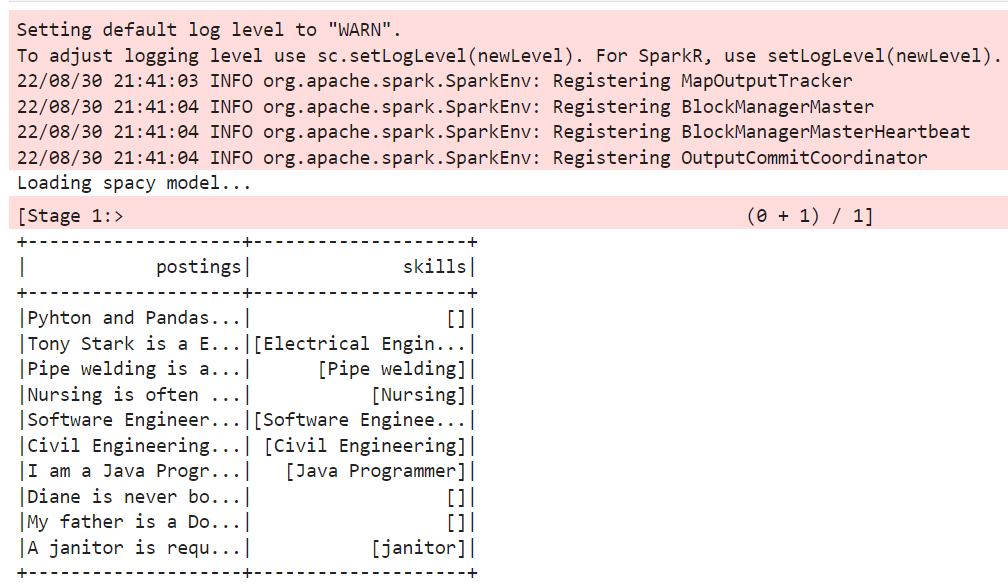

And when the cluster was already created, I ran the code mentioned in my original post (NO modifications) with the following result:

That solves my original question. I am planning to apply my solution on a larger dataset, but I think whatever happen there, is subject of a different thread.