I am attempting to scrape the artists' Spotify streaming rankings from Kworb.net into a CSV file and I've nearly succeeded except I'm running into a weird issue.

The code below successfully scrapes all 10,000 of the listed artists into the console:

import requests

from bs4 import BeautifulSoup

import csv

URL = "https://kworb.net/spotify/artists.html"

result = requests.get(URL)

src = result.content

soup = BeautifulSoup(src, 'html.parser')

table = soup.find('table', id="spotifyartistindex")

header_tags = table.find_all('th')

headers = [header.text.strip() for header in header_tags]

rows = []

data_rows = table.find_all('tr')

for row in data_rows:

value = row.find_all('td')

beautified_value = [dp.text.strip() for dp in value]

print(beautified_value)

if len(beautified_value) == 0:

continue

rows.append(beautified_value)

The issue arises when I use the following code to save the output to a CSV file:

with open('artist_rankings.csv', 'w', newline="") as output:

writer = csv.writer(output)

writer.writerow(headers)

writer.writerows(rows)

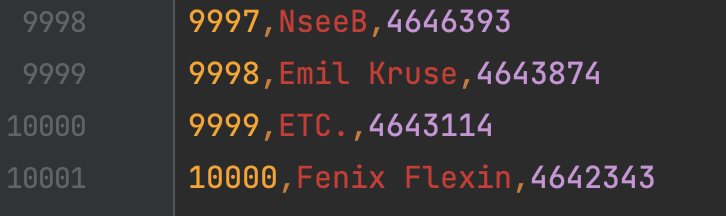

For whatever reason, only 738 of the artists are saved to the file. Does anyone know what could be causing this?

Thanks so much for any help!

CodePudding user response:

As an alternative approach, you might want to make your life easier next time and use pandas.

Here's how:

import requests

import pandas as pd

source = requests.get("https://kworb.net/spotify/artists.html")

df = pd.concat(pd.read_html(source.text, flavor="bs4"))

df.to_csv("artists.csv", index=False)

This outputs a .csv file with 10,000 artists.

CodePudding user response:

The issue with your code is that you are using the print statement to display the data on the console, but this is not included in the rows list that you are writing to the CSV file. Instead, you need to append the data to the rows list before writing it to the CSV file.

Here is how you can modify your code to fix this issue:

import requests

from bs4 import BeautifulSoup

import csv

URL = "https://kworb.net/spotify/artists.html"

result = requests.get(URL)

src = result.content

soup = BeautifulSoup(src, 'html.parser')

table = soup.find('table', id="spotifyartistindex")

header_tags = table.find_all('th')

headers = [header.text.strip() for header in header_tags]

rows = []

data_rows = table.find_all('tr')

for row in data_rows:

value = row.find_all('td')

beautified_value = [dp.text.strip() for dp in value]

# Append the data to the rows list

rows.append(beautified_value)

Write the data to the CSV file

with open('artist_rankings.csv', 'w', newline="") as output:

writer = csv.writer(output)

writer.writerow(headers)

writer.writerows(rows)

In this modified code, the data is first appended to the rows list, and then it is written to the CSV file. This will ensure that all of the data is saved to the file, and not just the first 738 rows.

Note that you may also want to add some error handling to your code in case the request to the URL fails, or if the HTML of the page is not in the expected format. This will help prevent your code from crashing when it encounters unexpected data. You can do this by adding a try-except block to your code, like this:

import requests

from bs4 import BeautifulSoup

import csv

URL = "https://kworb.net/spotify/artists.html"

try:

result = requests.get(URL)

src = result.content

soup = BeautifulSoup(src, 'html.parser')

table = soup.find('table', id="spotifyartistindex")

if table is None:

raise Exception("Could not find table with id 'spotifyartistindex'")

header_tags = table.find_all('th')

headers = [header.text.strip() for header in header_tags]

rows = []

data_rows = table.find_all('tr')

for row in data_rows:

value = row.find_all('td')

beautified_value = [dp.text.strip() for dp in value]

# Append the data to the rows list

rows.append(beautified_value)

# Write the data to the CSV file

with open('artist_rankings.csv', 'w', newline="") as output:

writer = csv.writer(output)