I would like to use the COPY INTO statement to copy a table with it's column names from an external storage.

Would like to achieve it using a generic procedure which can use for different tables.

Here below you find a draft. The input parameters could be temp_file_path, schema, table name and list of column names of destination table.

Variable temp_file_path is location of files in Azure data lake(dl).

The variable on column list is a placeholder. Need to know how we can implement it.

Other suggestions are welcome.

CREATE PROC [copy_into_sql_from_dl_columns]

@temp_file_path [VARCHAR](4096)

, @dest_schema [VARCHAR](255)

, @dest_table [VARCHAR](255)

, @dest_columns [VARCHAR](255)

AS

IF @temp_file_path IS NULL OR @dest_schema IS NULL OR @dest_table IS NULL OR @dest_columns IS NULL

BEGIN

PRINT 'ERROR: You must specify temp_file_path, destination schema, table and column names.'

END

ELSE

BEGIN

DECLARE @dest_temp_table AS VARCHAR(4096)

SET @dest_temp_table = '[' @dest_schema '].[' @dest_table ']'

-- set target column names into a target temp variable @dest_temp_columns_list

DECLARE @copy_into_query AS VARCHAR(8000)

SET @copy_into_query = 'COPY INTO ' @dest_temp_table ' (' @dest_temp_columns ')' ' FROM ''' @temp_file_path ''' WITH (FILE_TYPE = ''parquet'', AUTO_CREATE_TABLE = ''OFF'' ) ';

EXEC (@copy_into_query)

END

GO

Environment is Azure cloud synapse

DB is SQL dedicated pool (Azure Synapse Analytics)

CodePudding user response:

Can you use SELECT INTO?

SELECT *

INTO newtable [IN externaldb]

FROM oldtable

WHERE condition;

CodePudding user response:

Create procedure COPY INTO statement with column names from external storage

I repro'd this and below is the approach.

- Two sample parquet files are taken in azure data lake storage. This is considered as source.

- Similarly, Target tables are created with the same structure as source files in the dedicated SQL pool.

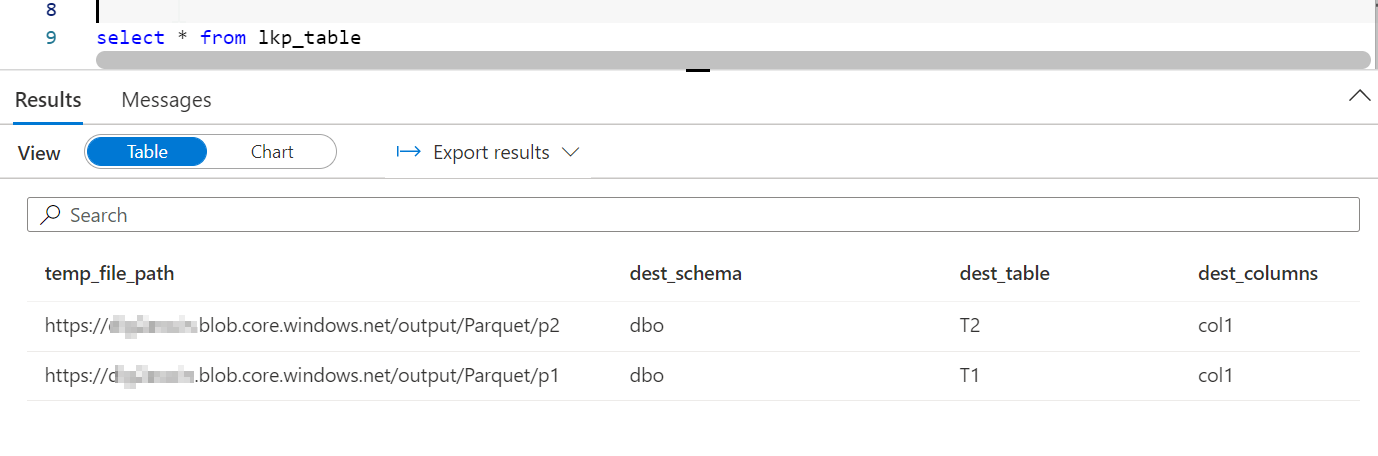

- A lookup table is created in dedicated SQL pool to store the details like File Path of source data lake, Schema and table name of target and column names of target table.

create table lkp_table

(temp_file_path VARCHAR(255),

dest_schema VARCHAR(100),

dest_table varchar(100),

dest_columns varchar(100) )

- Then Stored procedure script is written in dedicated SQL pool to copy data from external storage to dedicated SQL pool. (Few changes are made in the Q's stored procedure script).

Create PROC [copy_into_sql_from_dl_columns]

@temp_file_path [VARCHAR](4096)

, @dest_schema [VARCHAR](255)

, @dest_table [VARCHAR](255)

, @dest_columns [VARCHAR](255)

AS

IF @temp_file_path IS NULL OR @dest_schema IS NULL OR @dest_table IS NULL OR @dest_columns IS NULL

BEGIN

PRINT 'ERROR: You must specify temp_file_path, destination schema, table and column names.'

END

ELSE

BEGIN

DECLARE @dest_temp_table AS VARCHAR(4096)

SET @dest_temp_table = '[' @dest_schema '].[' @dest_table ']'

-- set target column names into a target temp variable @dest_temp_columns_list

DECLARE @copy_into_query AS VARCHAR(8000)

SET @copy_into_query = 'COPY INTO ' @dest_temp_table ' (' @dest_columns ')' ' FROM ''' @temp_file_path ''' WITH (FILE_TYPE = ''parquet'', AUTO_CREATE_TABLE = ''OFF'' ) ';

EXEC (@copy_into_query)

END

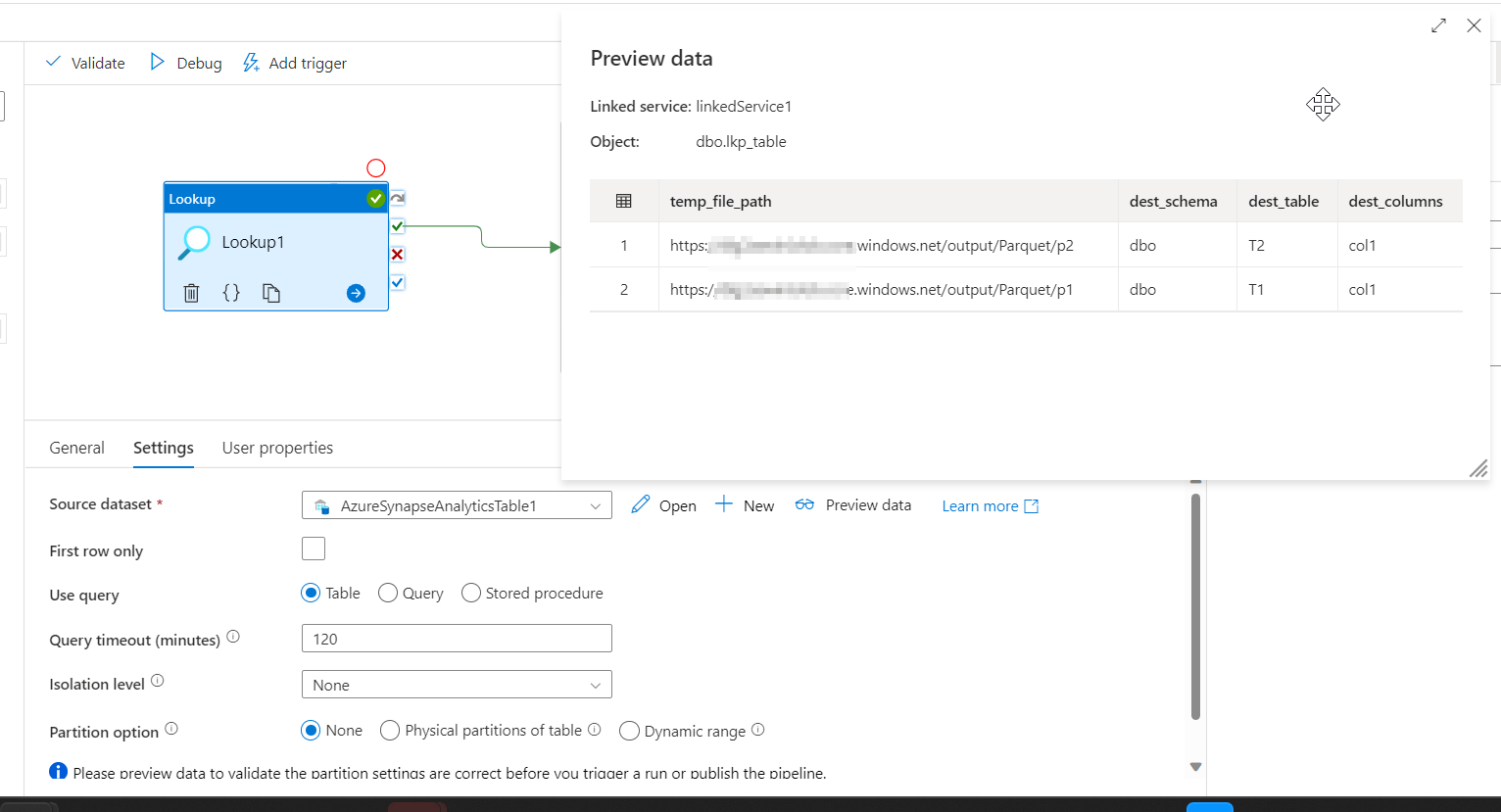

- Then, In Azure Synapse/data factory, Lookup activity is taken and dataset for the lookup activity is referred to lookup table that is created in dedicated SQL pool.

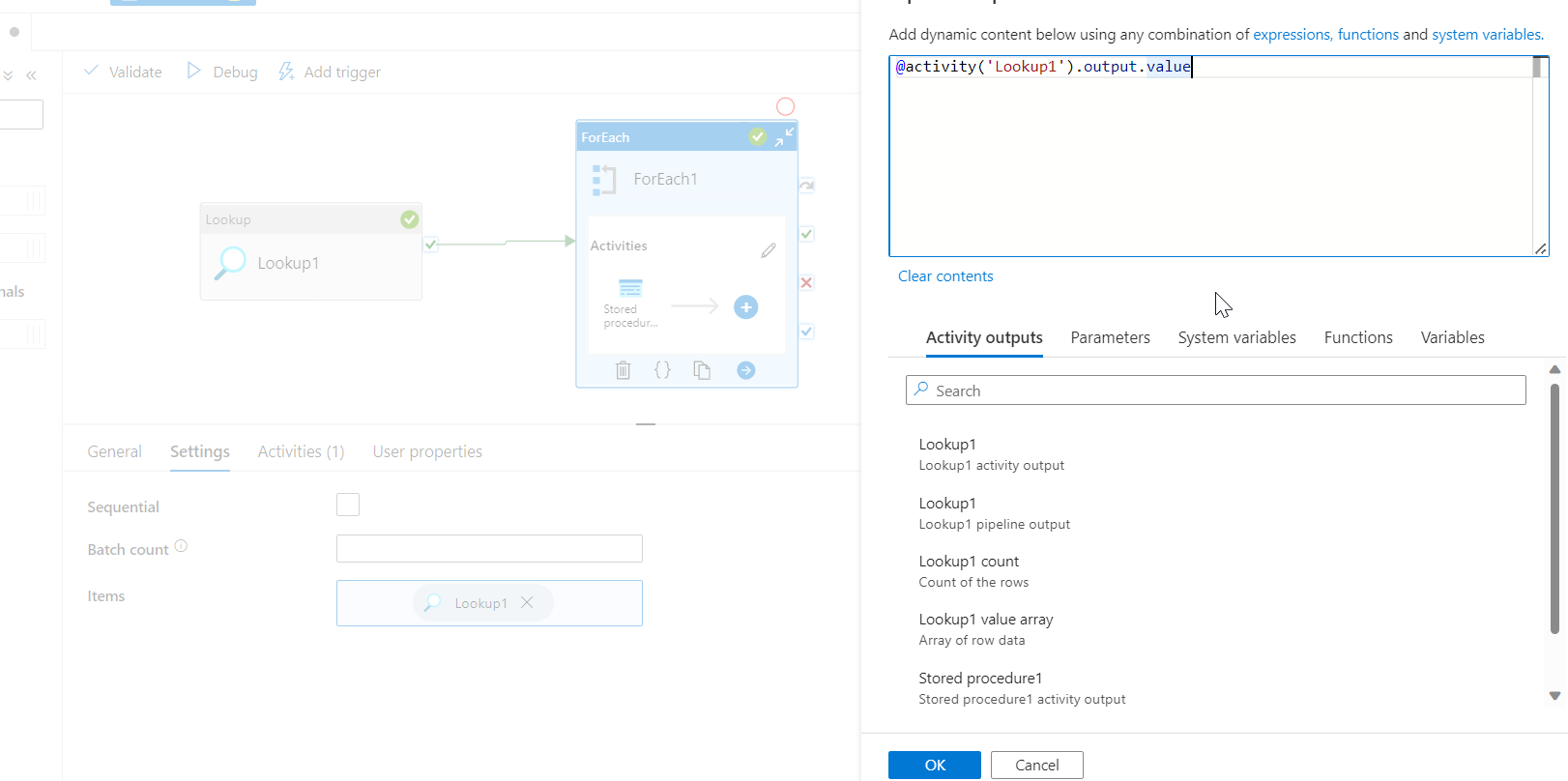

- Then For-each activity is created and in items of for-each activity,

@activity('Lookup1').output.valueis given.

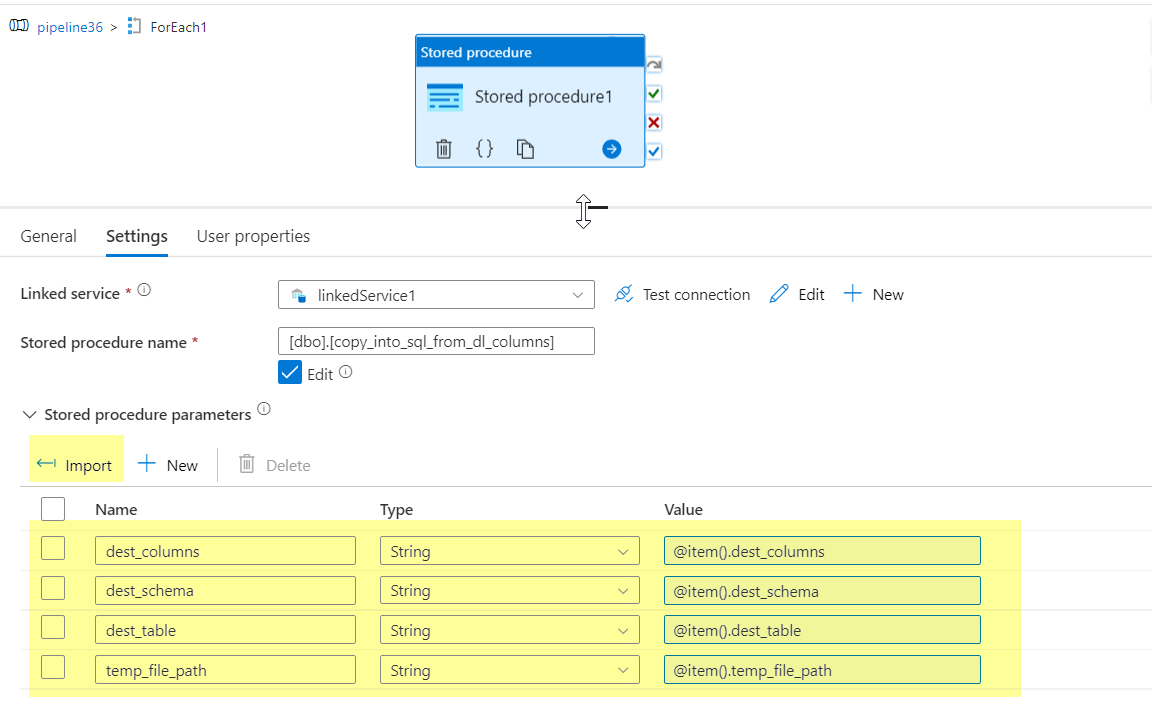

- Then inside foreach activity, Execute stored procedure activity is added

- Linked Service is selected and Stored procedure name is given.

- Import Parameter is selected and value is given in the parameters

dest_columns: @item().dest_columns

dest_schema: @item().dest_schema

dest_table: @item().dest_table

temp_file_path: @item().temp_file_fath

- Once pipeline is run, all files from ADLS are copied to Dedicated SQL pool.