SOS I'm trying to deploy ELK stack on my Kubernetes**a

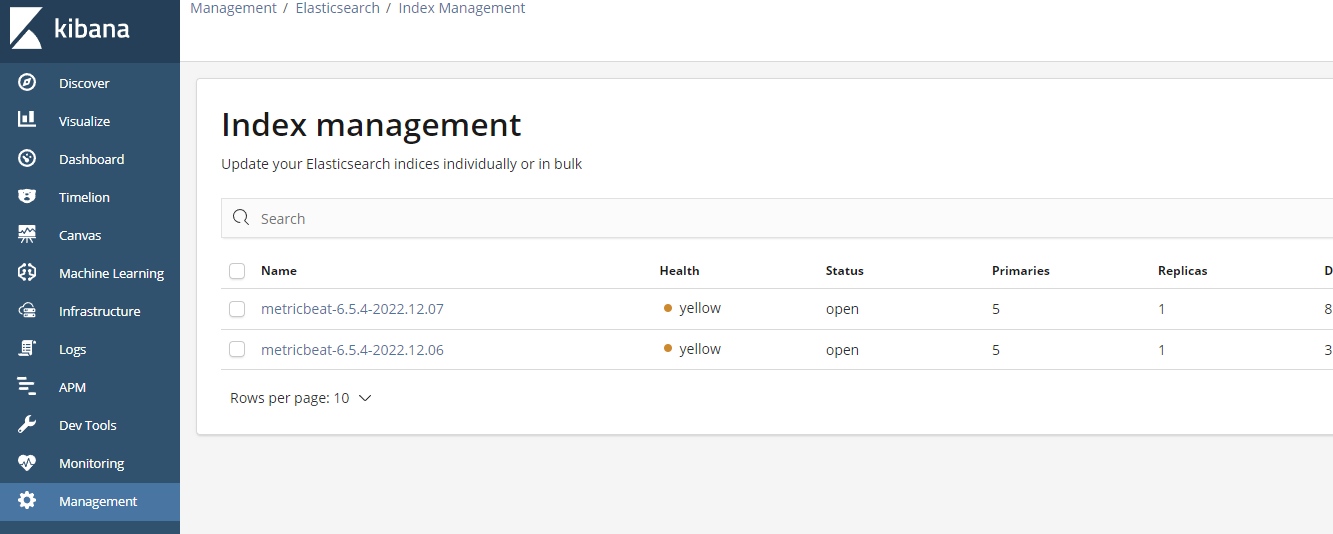

ElasticSearch, Metricbeat, Filebeat and Kibana running on Kubernetes, but in Kibana there is no Filebeat index logs

Kibana accessable: URL

I don't know where the issue please help me to figure out. Any idea???

Pods:

NAME READY STATUS RESTARTS AGE

counter 1/1 Running 0 21h

es-mono-0 1/1 Running 0 19h

filebeat-4446k 1/1 Running 0 11m

filebeat-fwb57 1/1 Running 0 11m

filebeat-mk5wl 1/1 Running 0 11m

filebeat-pm8xd 1/1 Running 0 11m

kibana-86d8ccc6bb-76bwq 1/1 Running 0 24h

logstash-deployment-8ffbcc994-bcw5n 1/1 Running 0 24h

metricbeat-4s5tx 1/1 Running 0 21h

metricbeat-sgf8h 1/1 Running 0 21h

metricbeat-tfv5d 1/1 Running 0 21h

metricbeat-z8rnm 1/1 Running 0 21h

SVC

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch LoadBalancer 10.245.83.99 159.223.240.9 9200:31872/TCP,9300:30997/TCP 19h

kibana NodePort 10.245.229.75 <none> 5601:32040/TCP 24h

kibana-external LoadBalancer 10.245.184.232 <pending> 80:31646/TCP 24h

logstash-service ClusterIP 10.245.113.154 <none> 5044/TCP 24h

Logstash logs logstash (Raw)

filebeat logs (Raw)

kibana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: elk

labels:

run: kibana

spec:

replicas: 1

selector:

matchLabels:

run: kibana

template:

metadata:

labels:

run: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:6.5.4

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch.elk:9200/

- name: XPACK_SECURITY_ENABLED

value: "true"

#- name: CLUSTER_NAME

# value: elasticsearch

#resources:

# limits:

# cpu: 1000m

# requests:

# cpu: 500m

ports:

- containerPort: 5601

name: http

protocol: TCP

#volumes:

# - name: logtrail-config

# configMap:

# name: logtrail-config

---

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: elk

labels:

#service: kibana

run: kibana

spec:

type: NodePort

selector:

run: kibana

ports:

- port: 5601

targetPort: 5601

---

apiVersion: v1

kind: Service

metadata:

name: kibana-external

spec:

type: LoadBalancer

selector:

app: kibana

ports:

- name: http

port: 80

targetPort: 5601

filebeat.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: elk

labels:

k8s-app: filebeat

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""] # "" indicates the core API group

resources:

- namespaces

- pods

verbs:

- get

- watch

- list

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: elk

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: elk

labels:

k8s-app: filebeat

data:

filebeat.yml: |-

filebeat.config:

prospectors:

# Mounted `filebeat-prospectors` configmap:

path: ${path.config}/prospectors.d/*.yml

# Reload prospectors configs as they change:

reload.enabled: false

modules:

path: ${path.config}/modules.d/*.yml

# Reload module configs as they change:

reload.enabled: false

output.logstash:

hosts: ['logstash-service:5044']

setup.kibana.host: "http://kibana.elk:5601"

setup.kibana.protocol: "http"

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-prospectors

namespace: elk

labels:

k8s-app: filebeat

data:

kubernetes.yml: |-

- type: docker

containers.ids:

- "*"

processors:

- add_kubernetes_metadata:

in_cluster: true

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

namespace: elk

labels:

k8s-app: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

image: docker.elastic.co/beats/filebeat:6.5.4

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

env:

securityContext:

runAsUser: 0

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: prospectors

mountPath: /usr/share/filebeat/prospectors.d

readOnly: true

#- name: data

# mountPath: /usr/share/filebeat/data

subPath: filebeat/

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0600

name: filebeat-config

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: prospectors

configMap:

defaultMode: 0600

name: filebeat-prospectors

#- name: data

# persistentVolumeClaim:

# claimName: elk-pvc

---

Metricbeat.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: metricbeat-config

namespace: elk

labels:

k8s-app: metricbeat

data:

metricbeat.yml: |-

metricbeat.config.modules:

# Mounted `metricbeat-daemonset-modules` configmap:

path: ${path.config}/modules.d/*.yml

# Reload module configs as they change:

reload.enabled: false

processors:

- add_cloud_metadata:

cloud.id: ${ELASTIC_CLOUD_ID}

cloud.auth: ${ELASTIC_CLOUD_AUTH}

output.elasticsearch:

hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

username: ${ELASTICSEARCH_USERNAME}

password: ${ELASTICSEARCH_PASSWORD}

setup.kibana:

host: "kibana.elk:5601"

---

apiVersion: v1

kind: ConfigMap

metadata:

name: metricbeat-daemonset-modules

namespace: elk

labels:

k8s-app: metricbeat

data:

system.yml: |-

- module: system

period: 10s

metricsets:

- cpu

- load

- memory

- network

- process

- process_summary

#- core

#- diskio

#- socket

processes: ['.*']

process.include_top_n:

by_cpu: 5 # include top 5 processes by CPU

by_memory: 5 # include top 5 processes by memory

- module: system

period: 1m

metricsets:

- filesystem

- fsstat

processors:

- drop_event.when.regexp:

system.filesystem.mount_point: '^/(sys|cgroup|proc|dev|etc|host|lib)($|/)'

kubernetes.yml: |-

- module: kubernetes

metricsets:

- node

- system

- pod

- container

- volume

period: 10s

hosts: ["localhost:10255"]

---

# Deploy a Metricbeat instance per node for node metrics retrieval

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: metricbeat

namespace: elk

labels:

k8s-app: metricbeat

spec:

selector:

matchLabels:

k8s-app: metricbeat

template:

metadata:

labels:

k8s-app: metricbeat

spec:

serviceAccountName: metricbeat

terminationGracePeriodSeconds: 30

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: metricbeat

image: docker.elastic.co/beats/metricbeat:6.5.4

args: [

"-c", "/etc/metricbeat.yml",

"-e",

"-system.hostfs=/hostfs",

]

env:

- name: ELASTICSEARCH_HOST

value: elasticsearch

- name: ELASTICSEARCH_PORT

value: "9200"

- name: ELASTICSEARCH_USERNAME

value: elastic

- name: ELASTICSEARCH_PASSWORD

value: changeme

- name: ELASTIC_CLOUD_ID

value:

- name: ELASTIC_CLOUD_AUTH

value:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

securityContext:

runAsUser: 0

resources:

limits:

memory: 100Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/metricbeat.yml

readOnly: true

subPath: metricbeat.yml

- name: modules

mountPath: /usr/share/metricbeat/modules.d

readOnly: true

- name: dockersock

mountPath: /var/run/docker.sock

- name: proc

mountPath: /hostfs/proc

readOnly: true

- name: cgroup

mountPath: /hostfs/sys/fs/cgroup

readOnly: true

- name: data

mountPath: /usr/share/metricbeat/data

subPath: metricbeat/

volumes:

- name: proc

hostPath:

path: /proc

- name: cgroup

hostPath:

path: /sys/fs/cgroup

- name: dockersock

hostPath:

path: /var/run/docker.sock

- name: config

configMap:

defaultMode: 0600

name: metricbeat-config

- name: modules

configMap:

defaultMode: 0600

name: metricbeat-daemonset-modules

- name: data

persistentVolumeClaim:

claimName: elk-pvc

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: metricbeat

subjects:

- kind: ServiceAccount

name: metricbeat

namespace: elk

roleRef:

kind: ClusterRole

name: metricbeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: metricbeat

labels:

k8s-app: metricbeat

rules:

- apiGroups: [""] # "" indicates the core API group

resources:

- namespaces

- events

- pods

verbs:

- get

- watch

- list

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metricbeat

namespace: elk

labels:

k8s-app: metricbeat

Logstash.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: logstash-configmap

namespace: elk

data:

logstash.yml: |

http.host: "0.0.0.0"

path.config: /usr/share/logstash/pipeline

logstash.conf: |

input {

beats {

port => 5044

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

date {

match => [ "timestamp" , "dd/MMM/yyyy:HH:mm:ss Z" ]

}

geoip {

source => "clientip"

}

}

output {

elasticsearch {

hosts => ["elasticsearch.elk:9200"]

}

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: logstash-deployment

namespace: elk

spec:

replicas: 1

selector:

matchLabels:

app: logstash

template:

metadata:

labels:

app: logstash

spec:

containers:

- name: logstash

image: docker.elastic.co/logstash/logstash:6.3.0

ports:

- containerPort: 5044

volumeMounts:

- name: config-volume

mountPath: /usr/share/logstash/config

- name: logstash-pipeline-volume

mountPath: /usr/share/logstash/pipeline

volumes:

- name: config-volume

configMap:

name: logstash-configmap

items:

- key: logstash.yml

path: logstash.yml

- name: logstash-pipeline-volume

configMap:

name: logstash-configmap

items:

- key: logstash.conf

path: logstash.conf

---

kind: Service

apiVersion: v1

metadata:

name: logstash-service

namespace: elk

spec:

selector:

app: logstash

ports:

- protocol: TCP

port: 5044

targetPort: 5044

Full src files(GitHub)

CodePudding user response:

Try to use FluentD as log transportation

fluentd.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd

namespace: elk

labels:

app: fluentd

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluentd

labels:

app: fluentd

rules:

- apiGroups:

- ""

resources:

- pods

- namespaces

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd

roleRef:

kind: ClusterRole

name: fluentd

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: fluentd

namespace: elk

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

namespace: elk

labels:

app: fluentd

spec:

selector:

matchLabels:

app: fluentd

template:

metadata:

labels:

app: fluentd

spec:

serviceAccount: fluentd

serviceAccountName: fluentd

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: fluentd

image: fluent/fluentd-kubernetes-daemonset:v1.4.2-debian-elasticsearch-1.1

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch.elk.svc.cluster.local"

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

- name: FLUENT_ELASTICSEARCH_SCHEME

value: "http"

- name: FLUENTD_SYSTEMD_CONF

value: disable

- name: FLUENT_CONTAINER_TAIL_PARSER_TYPE

value: /^(?<time>. ) (?<stream>stdout|stderr) [^ ]* (?<log>.*)$/

resources:

limits:

memory: 512Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers