Problem

I get the same output everytime regardless of the input.

Context

I have a .csv with IDs that represent a team of 5 persons (previously formed teams) like this:

0, 1, 2, 3, 4

5, 6, 7, 3, 8

2, 5, 6, 7, 3

9, 1, 2, 6, 4

9, 0, 1, 2, 4

...

My goal with the following code is to be able to input 4 IDs and get a prediction of what the 5th member should be.

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.neighbors import KNeighborsClassifier

import pandas as pd

file = 'People.csv'

# Read dataset without a header row:

dataset = pd.read_csv(file, header=None)

# return the first 5 rows:

dataset.head()

# Convert input to a two-dimensional array:

X = dataset.iloc[:, :-1].values

y = dataset.iloc[:, 4].values

# Split dataset into random train and test subsets:

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.20)

# Standardize - removes mean and scales to unit variance:

scaler = StandardScaler()

scaler.fit(X_train)

X_train = scaler.transform(X_train)

X_test = scaler.transform(X_test)

# Use the KNN classifier to fit data:

classifier = KNeighborsClassifier(n_neighbors=5)

classifier.fit(X_train, y_train)

# Uses the classifier to predict the value of the fifth column:

y_pred = classifier.predict([[5, 6, 7, 3]])

# Print the predicted value:

print(y_pred)

CodePudding user response:

Mainstream statistical machine learning assumes that it's possible to predict an attribute of an object based on other observed attributes.

In the problem presented here: there are no attributes. Each row represents a previously observed team, and each column represents an identifier attribute of a team member. In other words: it is not clear how we would build a model.

There's an alternate way to frame this problem though: "Which people prefer to work together?" or "

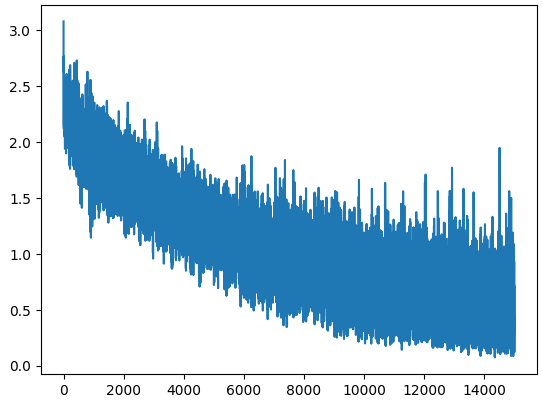

We now have a model that should be able to predict "who is the missing person?"

At prediction time we want to answer: who is missing from the set [0, 1, 2, 3]? But remember: we trained on examples where we permuted the inputs, so there's a chance that passing [3, 2, 1, 0] through the network gives a different answer.

One way to remedy this could be by permuting a test example 100 times, passing all of them through the network, and observing the average result across the permutations (these examples are small enough you could exhaustively test every case, but this would not scale to larger sets).

from matplotlib.ticker import MaxNLocator

def make_predictions(team, ax=None, title=None):

# team: np.array([[5, 6, 7, 3]])

# Warning: mutates `team`

predictions = []

for _ in range(100):

rng.shuffle(team, axis=1)

predictions.append(model.predict_proba(team).flatten())

predictions = np.asarray(predictions).mean(axis=0)

ax.bar(range(10), predictions)

ax.set_title(title)

ax.xaxis.set_major_locator(MaxNLocator(integer=True))

return predictions

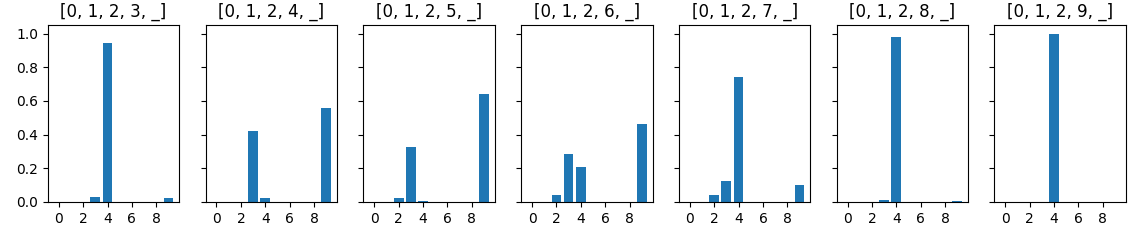

We now have enough written to see what the model predicts across a variety of inputs. We observed [0, 1, 2, 3, 4] in the input data, but what does this model predict when given: [0, 1, 2, _, _]?

Let's find out:

fig, axs = plt.subplots(1, 7, sharey=True)

for i, ax in zip([3, 4, 5, 6, 7, 8, 9], axs):

_ = make_predictions(np.array([[0, 1, 2, i]]), ax, title=f'[0, 1, 2, {i}, _]')

plt.show()

This gives some insight to where the problem is less certain. The case of [0, 1, 2, 4, _] suggests that 3 has ~40% and 9 has ~55%. This also reflects that in the training data, we saw both: [0, 1, 2, 3, 4] and [0, 1, 2, 4, 9].

Is this a good approach?

Probably not. This assumes we have exactly 10 people that we are repeatedly making teams of 5 for.

The final example also helps show that there is inherent uncertainty in this problem, and a simpler approach like the one used in the Apriori answer took far less work, is more interpretable, and can be tuned with heuristic background knowledge as necessary.