Goal

Is there a way to (1) download every file named index.json in an Azure Storage container (in directories, subdirectories, sub-subdirectories, and on) and (2) rename each index.json with the name of its source directory?

Proposed solution

I'm pursuing a solution that (1) uses az storage fs file download in the az CLI and (2) applies a wildcard or pattern that returns every index.json in every directory level of the container, but I am not successful with (2). See failed examples below.

Current inefficient solution

My current solution is inefficient and doesn't return index.json from all levels of the container - only the top level.

I can add details around the use case if that will help. Thank you for any help or ideas on better approaches.

Example of Azure Blob structure

container/

product-1/

articles/

page-1.html

page-2.html

fonts/

fontawesome.ttf

images/

product-image-1.png

product-image-2.png

node-modules/

...

styles/

style.css

style.js

index.html

index.json

product-2/

articles/

page-3.html

page-4.html

fonts/

fontawesome.ttf

images/

product-image-3.png

product-image-4.png

node-modules/

...

product-2-a/

articles/

page-3.html

page-4.html

fonts/

fontawesome.ttf

images/

product-image-3.png

product-image-4.png

node-modules/

...

styles/

style.css

style.js

index.html

index.json

styles/

style.css

style.js

index.html

index.json

index.html

Desired result (local machine) - each .json file is a renamed index.json file:

localIndexes/

product-1.json

product-2.json

product-2-a.json

Current undesirable strategy

- Create a JSON file that is a list of all directories in the blob (note that it does not download subdirectories, sub-subdirectories, and so on, which is not desired).

az storage fs directory list -f wwwroot --recursive false --account-name $storageAccountName --account-key $accountKey > dirs.json

Result: dirs.json (incomplete - just an example of objects included)

[

{

"contentLength": 0,

"etag": "123",

"group": "$abc",

"isDirectory": true,

"lastModified": "2022-01-13T23:20:19",

"name": "product-1",

"owner": "$abc",

"permissions": "abc---"

},

{

"contentLength": 0,

"etag": "345",

"group": "$abc",

"isDirectory": true,

"lastModified": "2022-01-13T23:20:19",

"name": "product-2",

"owner": "$abc",

"permissions": "abc---"

}

{

"contentLength": 0,

"etag": "456",

"group": "$abc",

"isDirectory": true,

"lastModified": "2022-01-13T23:20:19",

"name": "styles",

"owner": "$abc",

"permissions": "abc---"

}

]

- Remove objects for each unneeded

.name(aka directory) fromdirs.jsonusing jq. Using my inefficient method, the script in step 3 breaks if it encounters a directory that doesn't includeindex.json:

for excludeDir in css \

fonts \

images \

js \

node_modules \

styles ; do

jq --arg excludeDir $excludeDir '[.[] | select(.name != $excludeDir)]' dirs.json > temp.tmp && mv temp.tmp dirs.json

done

Result: dirs.json (incomplete - just an example of objects included)

[

{

"contentLength": 0,

"etag": "123",

"group": "$abc",

"isDirectory": true,

"lastModified": "2022-01-13T23:20:19",

"name": "product-1",

"owner": "$abc",

"permissions": "abc---"

},

{

"contentLength": 0,

"etag": "345",

"group": "$abc",

"isDirectory": true,

"lastModified": "2022-01-13T23:20:19",

"name": "product-2",

"owner": "$abc",

"permissions": "abc---"

}

]

- Loop over each

.name(aka directory) indirs.jsonto (1) downloadindex.jsonin that directory and (2) renameindex.jsonwith the name of the directory.

jq -r '.[] | "\(.name)"' dirs.json |

while IFS="|" read -r name; do

for dir in $name ; do

blobName=`echo $name | tr -d '\r'`

az storage blob download --container-name $containerName --file localIndexes/$blobName.json --name $blobName/index.json --account-key $accountKey --account-name $storageAccountName

done

done

Incomplete result

Note that product-2-a.json is missing, as would further nested subdirectories.

localIndexes/

product-1.json

product-2.json

Failed attempts at using the az CLI to download index.json using wildcards/patterns

Various iterations of:

az storage fs file download -p */index.json -f wwwroot --account-name $storageAccountName --account-key $accountKey

az storage fs file download -p /**/index.json -f wwwroot --account-name $storageAccountName --account-key $accountKey

az storage fs file download -p /--pattern index.json -f wwwroot --account-name $storageAccountName --account-key $accountKey

CodePudding user response:

There is no specific File filter from Azure side. We need to fetch all the files and the files need to be filtered at client side based on requirement.

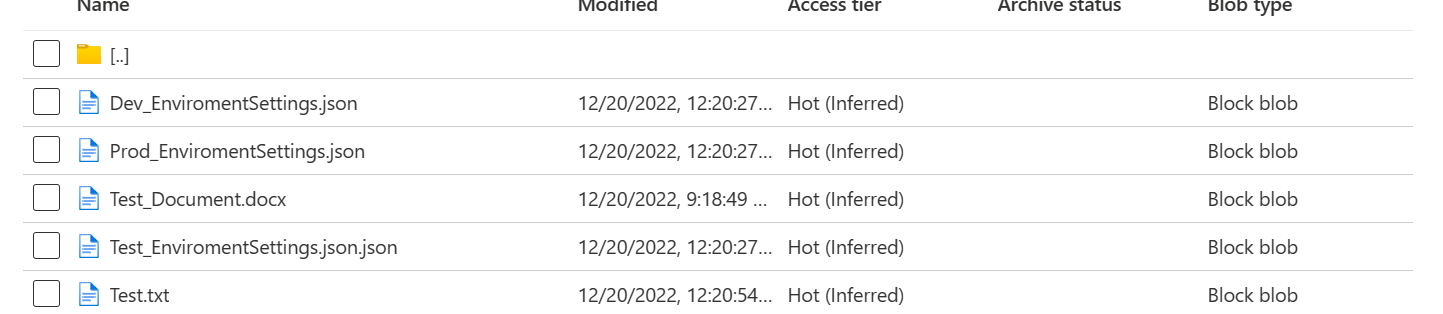

Different file types uploaded in Azure

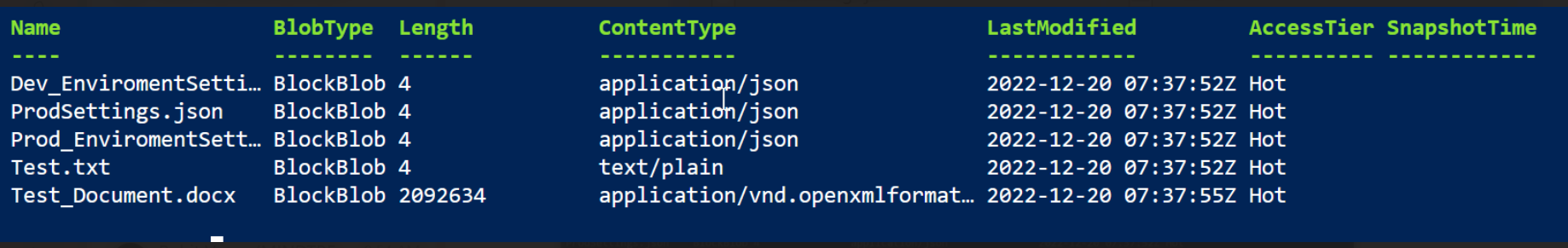

Files fetching from azure using C# and Power shell script

Below is the Power shell script to fetch the files

Install-Module Az.Storage

Connect-AzAccount

$MaxReturn = 20000

$Container_Name = "container_Name"

$Token = $Null

$Storage_Context = New-AzureStorageContext -StorageAccountName 'storageaccount' -StorageAccountKey 'Key'

$Container = Get-AzureStorageContainer -Name $Container_Name -Context $Storage_Context

$Blobs = Get-AzStorageBlob -Container $Container_Name -MaxCount $MaxReturn -ContinuationToken $Token -Context $Storage_Context

Echo $Blobs

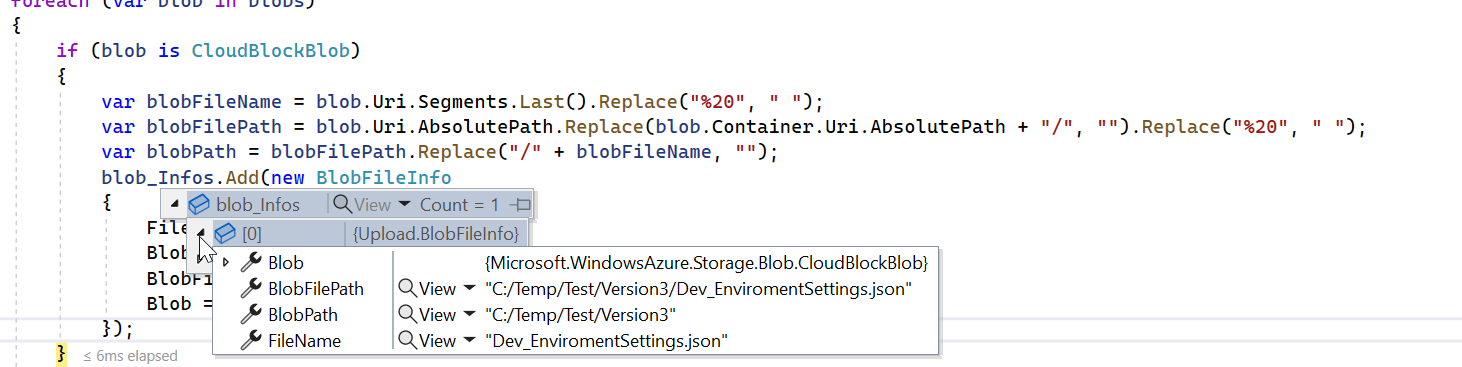

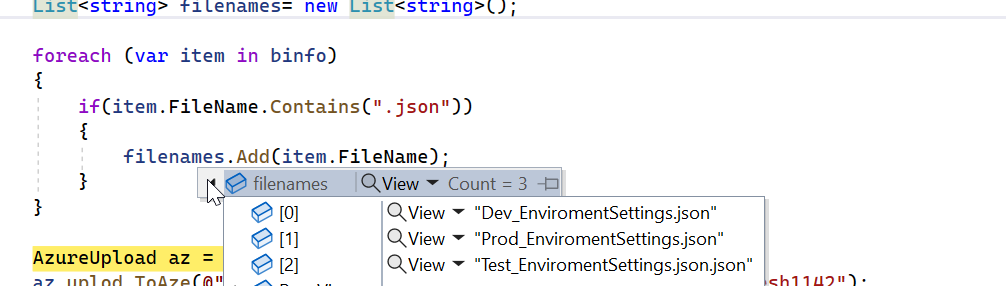

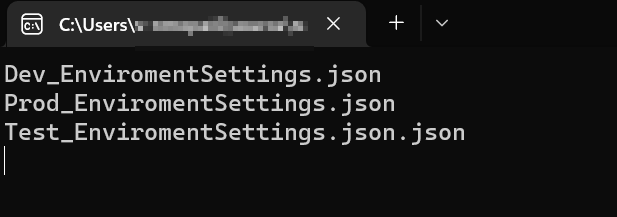

Below is the filtering of filetypes using C# code

foreach (var blob in blobs)

{

if (blob is CloudBlockBlob)

{

var blob_FileName = blob.Uri.Segments.Last().Replace(" ", " ");

var blob_FilePath = blob.Uri.AbsolutePath.Replace(blob.Container.Uri.AbsolutePath "/", "").Replace(" ", " ");

var blob_Path = blob_FilePath.Replace("/" blob_FileName, "");

blob_Infos.Add(new BlobFileInfo

{

File = blob_FileName,

Path = blob_Path,

Blob_FilePath = blob_FilePath,

Blob = blob

});

}

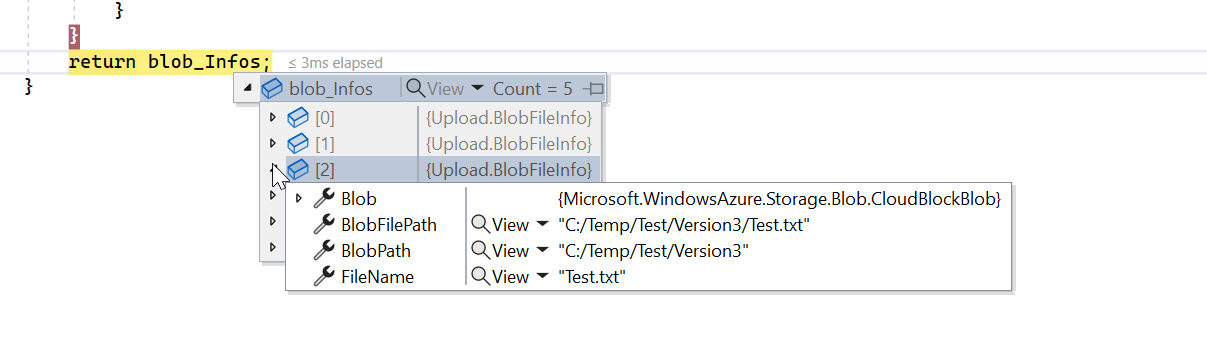

if (blob is CloudBlobDirectory)

{

var blob_Dir = blob.Uri.OriginalString.Replace(blob.Container.Uri.OriginalString "/", "");

blob_Dir = blob_Dir.Remove(blob_Dir.Length - 1);

var subBlobs = ListFolderBlobs(containerName, blob_Dir);

blob_Infos.AddRange(subBlobs);

}

}

return blob_Infos;