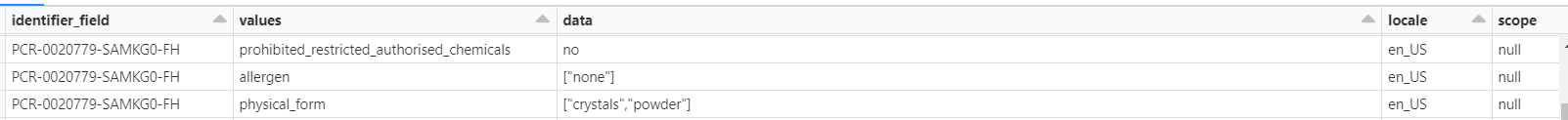

I have df where I have the column called data. In the data column we can expect the single values per identifier_filed column or list values. This is shown as [ ]brackets under the data column. For example Allegren under the values column can have different data type, but this specific identifie_field has only one value but other identifie_field can more than one .

Moreoverphysical_form value can have multiple data type values also. I would like to explode on data column and presnt each value as a seperate row.

schema of the df:

root

|-- identifier_field: string (nullable = true)

|-- values: string (nullable = false)

|-- data: string (nullable = true)

|-- locale: string (nullable = true)

|-- scope: string (nullable = true)

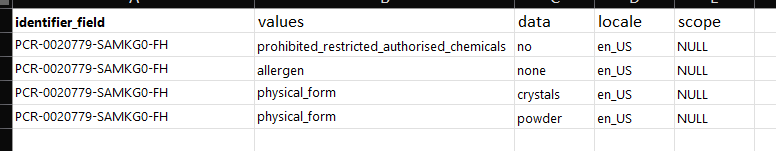

Desired OUTPUT:

CodePudding user response:

Alternative solution without udf.

df = (df

.withColumn('data', F.when(F.col('data').substr(0, 1) != '[',

F.concat(F.lit('["'), F.col('data'), F.lit('"]')))

.otherwise(F.col('data')))

.withColumn('data', F.from_json('data', 'array<string>'))

.select(F.explode_outer('data'))

)

First when statement might require a change based on what other data you have in the data column. The reason is the first row is not a array looking string, so you need to align all string to be the array like string. Then, you can use from_json to convert it to ArrayType which then you can do explode on.

CodePudding user response:

UPDATE: Without UDF

df.withColumn("data", split(col("data"), ","))

.withColumn("data", explode_outer("data"))

.withColumn("data", regexp_replace(col("data"), '\"|\[|\]','')).show()

-------------------- -------------------- --------

| identifier_field| values| data|

-------------------- -------------------- --------

|PCR-0020779-SAMKG...|prohibited_restri...| no|

|PCR-0020779-SAMKG...| physical_form|crystals|

|PCR-0020779-SAMKG...| physical_form| powder|

|PCR-0020779-SAMKG...| region_country| Emea_EU|

-------------------- -------------------- --------

You can use UDF to convert string value to array in column data and then explode.

data = [("PCR-0020779-SAMKGO-FH", "prohibited_restricted_authorised_chemicals", "no"),

("PCR-0020779-SAMKGO-FH", "physical_form", '["crystals", "powder"]'),

("PCR-0020779-SAMKGO-FH", "region_country", ' ["Emea_EU"]')]

columns = ["identifier_field", "values", "data"]

spark.createDataFrame(data, columns)

--------------------- ------------------------------------------ ----------------------

|identifier_field |values |data |

--------------------- ------------------------------------------ ----------------------

|PCR-0020779-SAMKGO-FH|prohibited_restricted_authorised_chemicals|no |

|PCR-0020779-SAMKGO-FH|physical_form |["crystals", "powder"]|

|PCR-0020779-SAMKGO-FH|region_country | ["Emea_EU"] |

--------------------- ------------------------------------------ ----------------------

def string_to_array(c):

out = eval(c) if c.strip().startswith('[') else [c]

return out

string_to_array_udf = udf(string_to_array, ArrayType(StringType()))

out_df = df.withColumn("data", string_to_array_udf(col("data"))).withColumn("data", explode_outer("data"))

-------------------- -------------------- --------

| identifier_field| values| data|

-------------------- -------------------- --------

|PCR-0020779-SAMKG...|prohibited_restri...| no|

|PCR-0020779-SAMKG...| physical_form|crystals|

|PCR-0020779-SAMKG...| physical_form| powder|

|PCR-0020779-SAMKG...| region_country| Emea_EU|

-------------------- -------------------- --------