I am trying to plot gradient descent cost_list with respect to epoch, but when I am trying to do so, I am getting lost with basic python function structure. I am appending my code structure what I am trying to do.

def gradientDescent(x, y, theta, alpha, m, numIterations):

xTrans = x.T

cost_list=[]

for i in range(0, numIterations):

hypothesis = np.dot(x, theta)

loss = hypothesis - y

cost = np.sum(loss ** 2) / (2 * m)

cost_list.append(cost)

print("Iteration %d | Cost: %f" % (i, cost))

# avg gradient per example

gradient = np.dot(xTrans, loss) / m

# update

theta = theta - alpha * gradient

#a = plt.plot(i,theta)

return theta,cost_list

what I am trying to do is I am return the "cost_list" at each step and creating a list of cost and I am trying to plot now with the below Line of codes.

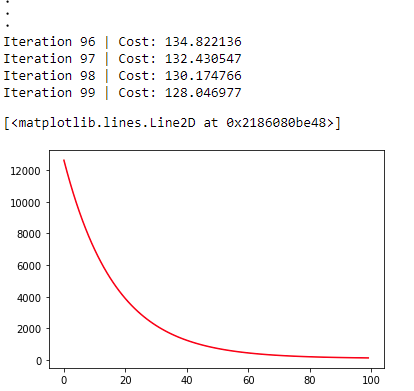

theta,cost_list=gradientDescent(x,y,bias,0.000001,len(my dataframe),100)

plt.plot(list(range(numIterations)), cost_list, '-r')

but it's giving me error with numIterations not defined. what should be the possible edit to the code

CodePudding user response:

I tried your code with sample data;

df = pd.DataFrame(np.random.randint(1,50, size=(50,2)), columns=list('AB'))

x=df.A

y=df.B

bias = np.random.randn(50,1)

numIterations = 100

theta,cost_list=gradientDescent(x,y,bias,0.000001,len(df),numIterations)

plt.plot(list(range(numIterations)), cost_list, '-r')