Rcently I'm working on a "searching system" and something about memory/cache performance confuse me. assume my machine info : x86 arch(L1-3 cache, 64 bytes cache line), linux OS

CPU reads 64 bytes(cache line) each time, so does CPU read data from memory address(to cache) always 64 multiple? For example 0x00(to 0x3F), 0x40(to 0x7f). If I need data(int32_t) located in 0x20 then system still need to load 0x00--0x3F.

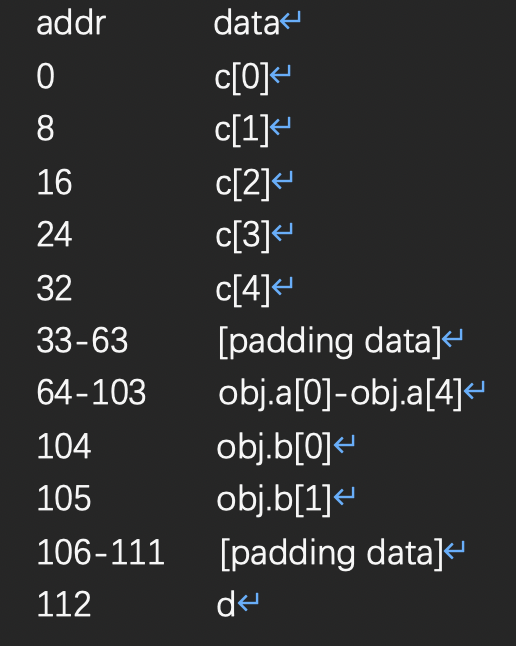

how about this case:

struct Obj{int64_t a[5];char b[2];}; then define

int64_t c[5]; Obj obj; int64_t d;

Will virtual memory (or also physical?) be organized like this?

CodePudding user response:

I think the part you might be missing is the alignment requirement that the compiler imposes for various types.

Integer types are generally aligned to a multiple of their own size (e.g. a 64-bit integer will be aligned to 8 bytes); so-called "natural alignment". This is not a strict architectural requirement of x86; unaligned loads and stores still work, but since they are less efficient, the compiler prefers to avoid them.

An aggregate, like a struct, is aligned according to the highest alignment requirement of its members, and padding will be inserted between members if needed to ensure that each one is properly aligned. Padding will also be added at the end so that the overall size of the struct is a multiple of its required alignment.

So in your example, struct Obj has alignment 8, and its size will be rounded up to 48 (with 6 bytes of padding at the end). So there is no need for 24 bytes of padding to be inserted after c[4] (I think you meant to write the padding at addresses 40-63); your obj can be placed at address 40. d can then be placed at address 88.

Note that none of this has anything to do with the cache line size. Objects are not by default aligned to cache lines, though "natural alignment" will ensure that no integer load or store ever has to cross a cache line.