I am running airflow with docker.

I want to go query some data in my PostgresSQl which on my local host.

this is my connection in my dag:

def queryPostgresql():

conn_string="dbname='datawarehouse' host='localhost' user='postgres' password='admin'"

conn=db.connect(conn_string)

df=pd.read_sql("select name,city from test",conn)

df.to_csv('data.csv')

print("-------Data Saved------")

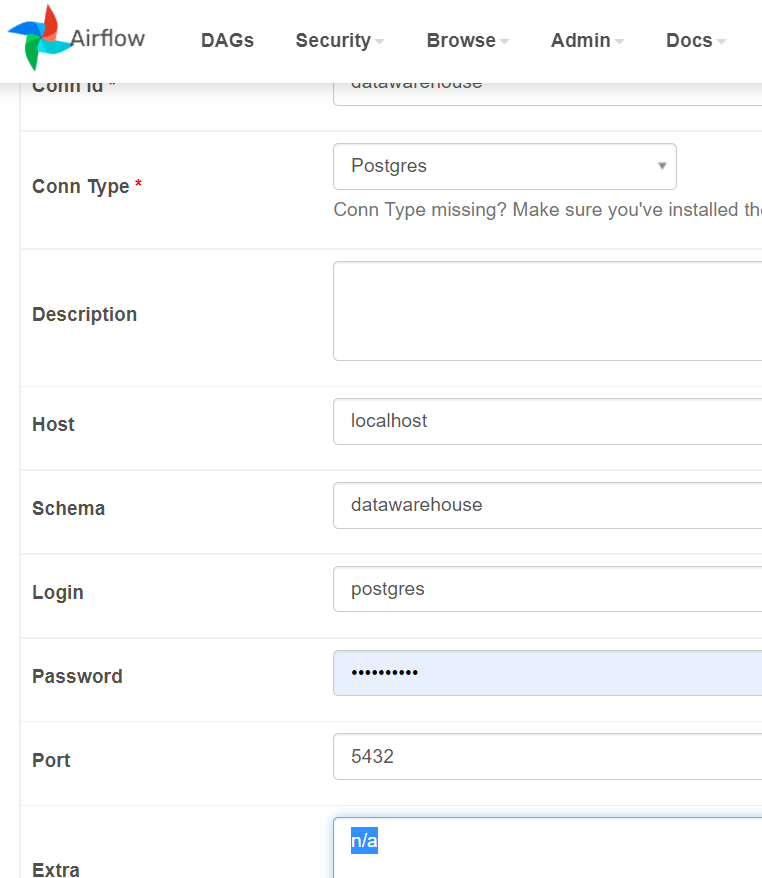

I am adding a connection to airflow:

psycopg2.OperationalError: could not connect to server: Connection refused

Is the server running on host "localhost" (127.0.0.1) and accepting

TCP/IP connections on port 5432?

Is it possible to query in my PgSQL from my Airflow Docker? Should I instead install PgSQL in docker?

CodePudding user response:

When you try to access localhost in Airflow, it's trying to connect to Postgres running on the Airflow container, which is not there. localhost from a container does not route to the Docker host machine by default.

Couple of options:

- Connect to the Docker host with

host.docker.internalinstead oflocalhost - Run Airflow and Postgres in a Docker Compose network and connect via container name (

psqletc.)

There are a few other methods with hosts files etc. but the above is likely your easiest options.