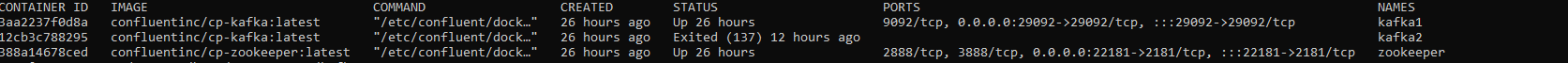

i have one Zookeeper and 2 kafka brokers running in a docker environment. i am able to get the zookeeper and both kafka brokers up and running successfully (with producers/consumers able to connect and send/receive data) but after a while (maybe a day later), one of the brokers stopped. below are the last log of the stopped kafka server.

[2021-10-14 16:15:23,553] INFO [GroupCoordinator 2]: Preparing to rebalance group console-consumer-95901 in state PreparingRebalance with old generation 1 (__consumer_offsets-35) (reason: removing member consumer-console-consumer-95901-1-66524e7c-561d-49f8-882e-93e5ee9732fa on heartbeat expiration) (kafka.coordinator.group.GroupCoordinator)

[2021-10-14 16:15:23,553] INFO [GroupCoordinator 2]: Group console-consumer-95901 with generation 2 is now empty (__consumer_offsets-35) (kafka.coordinator.group.GroupCoordinator)

[2021-10-14 16:23:09,577] INFO [GroupMetadataManager brokerId=2] Group console-consumer-95901 transitioned to Dead in generation 2 (kafka.coordinator.group.GroupMetadataManager)

[2021-10-15 02:04:23,654] WARN Client session timed out, have not heard from server in 15654ms for sessionid 0x10005a177990003 (org.apache.zookeeper.ClientCnxn)

[2021-10-15 02:05:35,005] INFO Client session timed out, have not heard from server in 15654ms for sessionid 0x10005a177990003, closing socket connection and attempting reconnect (org.apache.zookeeper.ClientCnxn)

and these are the last logs from zookeeper

[2021-10-15 02:04:54,812] INFO Expiring session 0x10005a177990003, timeout of 18000ms exceeded (org.apache.zookeeper.server.ZooKeeperServer)

[2021-10-15 02:05:28,649] INFO Expiring session 0x10005a177990002, timeout of 18000ms exceeded (org.apache.zookeeper.server.ZooKeeperServer)

[2021-10-15 02:07:27,106] WARN CancelledKeyException causing close of session 0x10005a177990002 (org.apache.zookeeper.server.NIOServerCnxn)

[2021-10-15 02:14:44,252] INFO Invalid session 0x10005a177990002 for client /172.18.0.3:36926, probably expired (org.apache.zookeeper.server.ZooKeeperServer)

i am not fully able to understand what happened, but it looks like broker wasn't able to communicate to the zookeeper anymore for some reason.

below is my docker-compose

version: '3.0'

services:

zookeeper-1:

image: confluentinc/cp-zookeeper:latest

container_name: zookeeper

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

ports:

- 22181:2181

kafka1:

image: confluentinc/cp-kafka:latest

container_name: kafka1

depends_on:

- zookeeper-1

ports:

- 29092:29092

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: zookeeper-1:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka1:9092,PLAINTEXT_HOST://my-hostname-here:29092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

KAFKA_HEAP_OPTS: -Xmx512M -Xms512M

kafka2:

image: confluentinc/cp-kafka:latest

container_name: kafka2

depends_on:

- zookeeper-1

ports:

- 29093:29093

environment:

KAFKA_BROKER_ID: 2

KAFKA_ZOOKEEPER_CONNECT: zookeeper-1:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka2:9092,PLAINTEXT_HOST://my-hostname-here:29093

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

KAFKA_HEAP_OPTS: -Xmx512M -Xms512M

below is the status of containers

CodePudding user response:

In docker ps, you see the exit code 137

This is an OOMKilled code, which means the container needs more memory.

I suggest you remove KAFKA_HEAP_OPTS and let the JVM be limited to the container's full available memory space