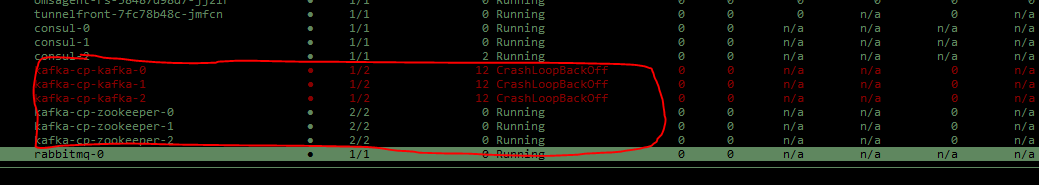

I have a set of Kubernetes pods (Kafka). They have been created by Terraform but somehow, they "fell" out of the state (Terraform does not recognize them) and are wrong configured (I don't need them anyways anymore).

I now want to remove the pods from the cluster completely now. The main problem is, that even after I kill/delete them they keep being recreated/restarting.

I tried:

kubectl get deployments --all-namespaces

and then deleted the namespace the pods were in with

kubectl delete -n <NS> deployment <DEPLOY>

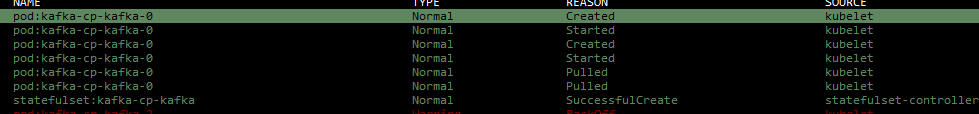

This namespace got removed correctly. Still, if I now try to remove/kill the pods (forced and with cascade) they still re-appear. In the events, I can see they are re-created by kubelet but I don't know why nor how I can stop this behavior.

I also checked

kubectl get jobs --all-namespaces

But there are no resources found. And also

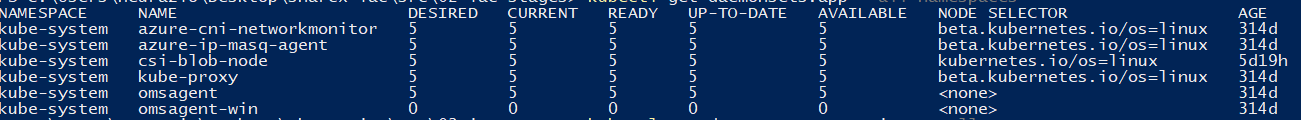

kubectl get daemonsets.app --all-namespaces

kubectl get daemonsets.extensions --all-namespaces

But I don't think that one of those is relevant for the Kafka deployment at all.

What else can I try to remove those pods? Any help is welcome.

CodePudding user response:

Ok, I was able to find the root cause.

With:

kubectl get all --all-namespaces

I looked up everything that is related to the name of the pods. In this cause, I found services that were related. After I deleted those services, the pods did not get recreated again.

I still think this is not a good solution to the problem ("Just delete everything that has the same name" ...) and I would be happy if someone can suggest a better solution to resolve this.

CodePudding user response:

To me they look like an statefulset, did you try also the following command?

kubectl get statefulset --all-namespaces