I'm running microservices on GKE and using skaffold for management.

Everything works fine for a week and suddenly all services are killed (not sure why).

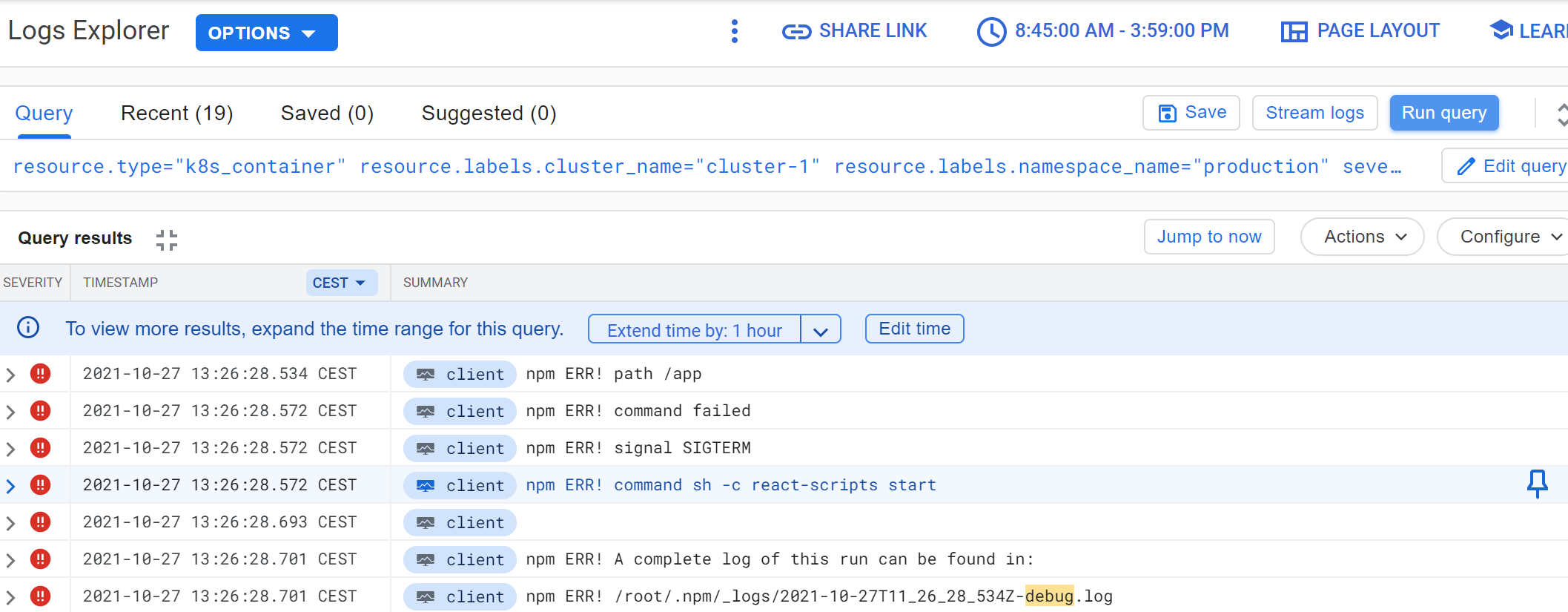

Logging shows this same info for all services:

There is no indication that something is going wrong in the logs before all services fail. It looks like the pods are all killed at the same time by GKE for whatever reason.

What confuses me is why the services do not restart.

kubectl describe pod auth shows a "imagepullbackoff" error.

When I simulate this situation on the test system (same setup) by deleting a pod manually, all services restart just fine.

To deploy the microservices, I use skaffold.

---deployment.yaml for one of the microservices---

apiVersion: apps/v1

kind: Deployment

metadata:

name: auth-depl

namespace: development

spec:

replicas: 1

selector:

matchLabels:

app: auth

template:

metadata:

labels:

app: auth

spec:

volumes:

- name: google-cloud-key

secret:

secretName: pubsub-key

containers:

- name: auth

image: us.gcr.io/XXXX/auth

volumeMounts:

- name: google-cloud-key

mountPath: /var/secrets/google

env:

- name: NATS_CLIENT_ID

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: NATS_URL

value: 'http://nats-srv:4222'

- name: NATS_CLUSTER_ID

value: XXXX

- name: JWT_KEY

valueFrom:

secretKeyRef:

name: jwt-secret

key: JWT_KEY

- name: GOOGLE_APPLICATION_CREDENTIALS

value: /var/secrets/google/key.json

Any idea why the microservices don't restart? Again, they run fine after deploying them with skaffold and also after simulating pod shutdown on the test system ... what changed here?

---- Update 2021.10.30 -------------

After some further digging in the cloud log explorer, I figured out that the pod is trying to pull the previously build image but fails. If I pull the image on cloud console manually using the image name plus tag as listed in the logs, it works just fine (thus the image is there).

The log gives the following reason for the error:

Failed to pull image "us.gcr.io/scout-productive/client:v0.002-72-gaa98dde@sha256:383af5c5989a3e8a5608495e589c67f444d99e7b705cfff29e57f49b900cba33": rpc error: code = NotFound desc = failed to pull and unpack image "us.gcr.io/scout-productive/client@sha256:383af5c5989a3e8a5608495e589c67f444d99e7b705cfff29e57f49b900cba33": failed to copy: httpReaderSeeker: failed open: could not fetch content descriptor sha256:4e9f2cdf438714c2c4533e28c6c41a89cc6c1b46cf77e54c488db30ca4f5b6f3 (application/vnd.docker.image.rootfs.diff.tar.gzip) from remote: not found"

Where is that "sha256:4e9f2cdf438714c2c4533e28c6c41a89cc6c1b46cf77e54c488db30ca4f5b6f3" coming from that it cannot find according to the error message?

Any help/pointers are much appreciated!

Thanks

CodePudding user response:

The imagepullbackoff means that kubernetes couldn't download the images from the registry - that could mean that the image with that name/tag doesen't exist, OR the the credentials to the registry is wrong/expired.

From what I see in your deployment.yml there aren't provided any credentials to the registry at all. You can do it by providing imagePullSecret. I never used Skaffold, but my assumptions is that you login to private registry in Skaffold, and use it to deploy the images, so when Kubernetes tries to redownload the image from the registry by itself, it fails because because of lack of authorization.

I can propose two solutions:

1. ImagePullSecret

You can create Secret resource witch will contain credentials to private registry, then define that secret in the deployment.yml, that way Kubernetes will be able to authorize at your private registry and pull the images.

https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/

2. ImagePullPolicy

You can prevent re-downloading your images, by setting ImagePullPolicy:IfNotPresent in the deployment.yml . It will reuse the one whitch is available locally. Using this method requires defining image tags properly. For example using this with :latest tag can lead to not pulling the "newest latest" image, because from cluster perspective, it already has image with that tag so it won't download a new one.

https://kubernetes.io/docs/concepts/containers/images/#image-pull-policy

To resolve problem with pods beeing killed, can you share Kubernetes events from the time when pods are being killed? kubectl -n NAMESPACE get events This would maybe give more information.

CodePudding user response:

When Skaffold causes an image to be built, the image is pushed to a repository (us.gcr.io/scout-productive/client) using a tag generated with your specified tagging policy (v0.002-72-gaa98dde; this tag looks like the result of using Skaffold's default gitCommit tagging policy). The remote registry returns the image digest of the received image, a SHA256 value computed from the image metadata and image file contents (sha256:383af5c5989a3e8a5608495e589c67f444d99e7b705cfff29e57f49b900cba33). The image digest is unique like a fingerprint, whereas a tag is just name → image digest mapping, and which may be updated to point to a different image digest.

When deploying your application, Skaffold rewrites your manifests on-the-fly to reference the specific built container images by using both the generated tag and the image digest. Container runtimes ignore the image tag when an image digest is specified since the digest identifies a unique image.

So the fact that your specific image cannot be resolved means that the image has been deleted from your repository. Are you, or someone on your team, deleting images?

Some people run image garbage collectors to delete old images. Skaffold users do this to delete the interim images generated by skaffold dev between dev loops. But some of these collectors are indiscriminate, such as only keeping images tagged with latest. Since Skaffold tags images using a configured tagging policy, such collectors can delete your Skaffold-built images. To avoid problems, either tune your collector (e.g., only deleted untagged images), or have Skaffold build your dev and production images to different repositories. For example, GCP's Artifact Registry allows having multiple independent repositories in the same project, and your GKE cluster can access all such repositories.