I'm working with prometheus to scrape k8s service metrics.

I created a service monitor for my service as below :

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: scmpoll-service-monitor-{{ .Release.Name }}

namespace: {{ .Release.Namespace }}

labels:

app: scmpoll-{{ template "jenkins-exporter.name" . }}

chart: {{ template "jenkins-exporter.chart" . }}

release: {{ .Release.Name }}

heritage: {{ .Release.Service }}

spec:

namespaceSelector:

matchNames:

- {{ .Release.Namespace }}

selector:

matchLabels:

app: scmpoll-{{ template "jenkins-exporter.name" . }}

chart: {{ template "jenkins-exporter.chart" . }}

release: {{ .Release.Name }}

endpoints:

- interval: 1440m

targetPort: 9759

path: /metrics

port: http

I set interval: 1440m because I want prometheus to scrape data once a day.

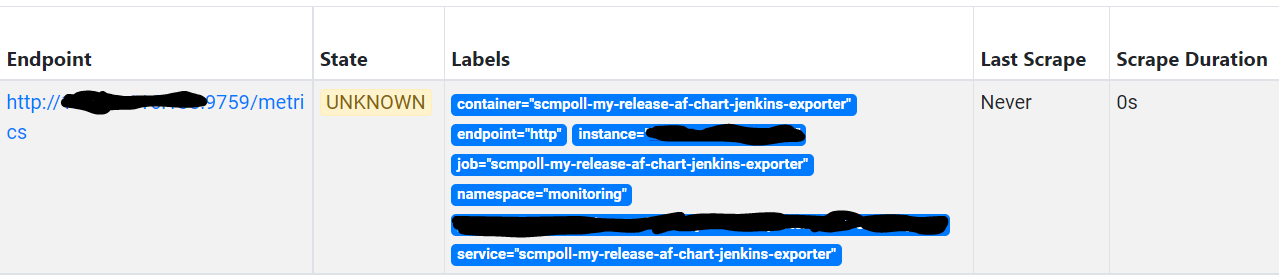

After deploying the chart, the service monitor was added to prometheus targets but with status unknown and scrape duration 0s. The screenshot below:

Also I have the service monitor added to prometheus config:

- job_name: monitoring/scmpoll-service-monitor-my-release/0

honor_timestamps: true

scrape_interval: 1d

scrape_timeout: 10s

metrics_path: /metrics

scheme: http

I can see that scrape-intervel is set to 1d (24h) but the state is unknown in the screenshot above. Do I have to wait for the next 24h and check or does this mean the configuration is wrong?

Test : i made test with scrape 20minutes and it was the same thing : status unknow with no metrics until the 20 minutes passed and status became UP and metrics were scrapped.

I'm not working on prometheus-operator chart, it's an independent chart.

CodePudding user response:

It's not recommended to use Prometheus for such long scrape_interval. 2 minutes is suggested by many. Read this for details- Staleness.

If you want to scrape data with interval exceeding 2 minutes, you can use VictoriaMetrics. It supports time series with arbitrary long scrape intervals.

CodePudding user response:

As mentioned, in general it's not advisable to use scrape intervals of more than 2 minutes in Prometheus (e.g. see here). This is due to the default staleness period of 5 minutes, which means that a scrape interval of 2 minutes allows for one failed scrape without the metrics being treated as stale.

There's nothing wrong with scraping a target more often. So, you can leave the scrape interval at e.g. 60 seconds, even if the metrics don't change often.

If you can't scrape the target so often for some reason, you can use a Pushgateway. The target pushes its metrics to the Pushgateway at its own pace (e.g. once per day) and Prometheus scrapes the metrics from the Pushgateway in its own interval (e.g. every 60 seconds).