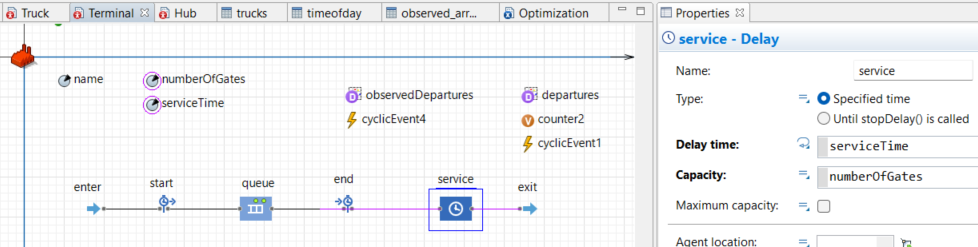

In my Anylogic model I have a population of agents (4 terminals) were trucks arrive at, are being served and depart from. The terminals have two parameters (numberOfGates and servicetime) which influence the departures per hour of trucks leaving the terminals. Now I want to tune these two parameters, so that the amount of departures per hour is closest to reality (I know the actual departures per hour). I already have two datasets within each terminal agent, one with de amount of departures per hour that I simulate, and one with the observedDepartures from the data.

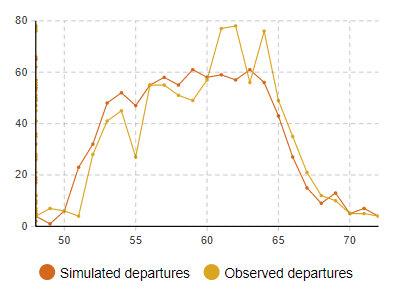

I already compare these two datasets in plots for every terminal:

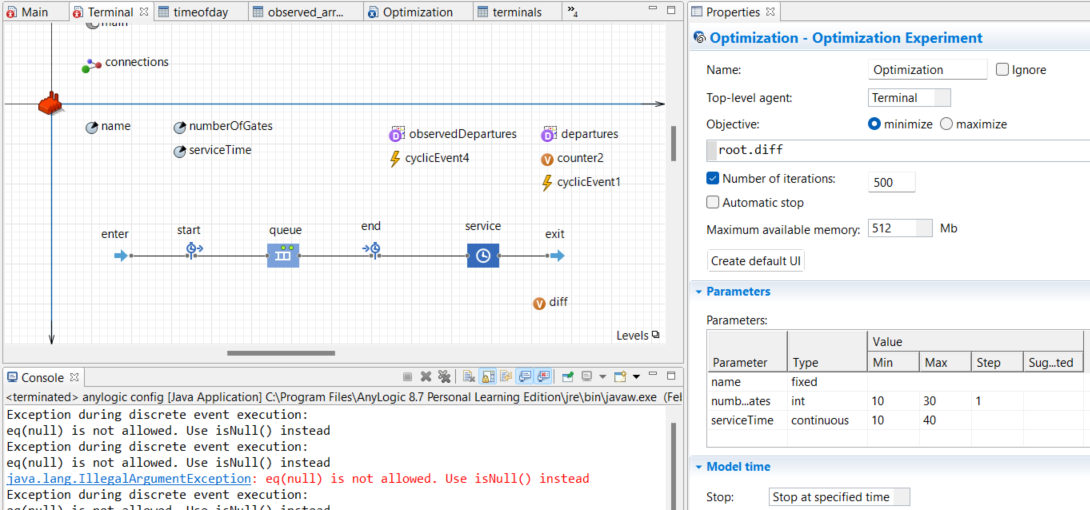

Now I want to create an optimization experiment to tune the numberOfGates and servicetime of the terminals so that the departure dataset is the closest to the observedDepartures dataset. Does anyone know how to do create a(n) (objective) function for this optimization experiment the easiest way?

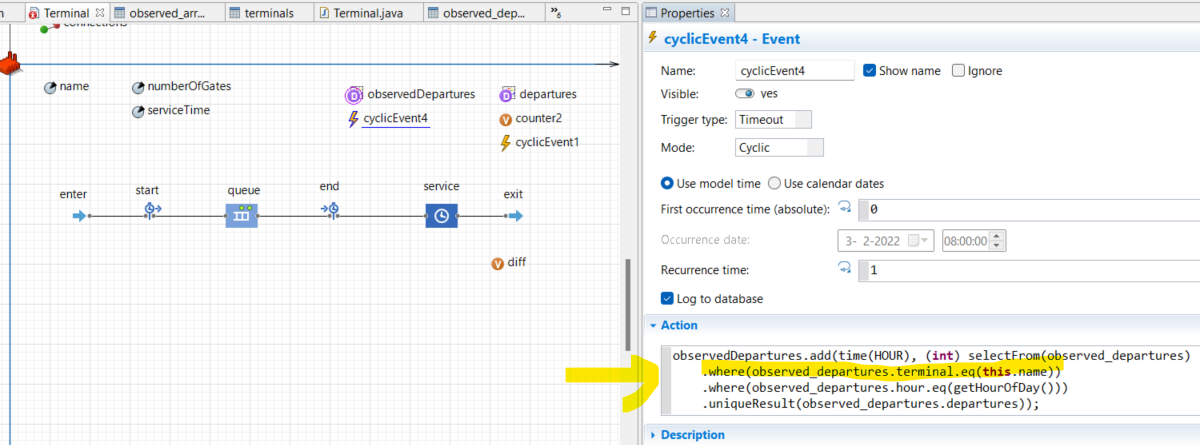

When I add a variable diff that is updated every hour by abs( departures - observedDepartures) and put root.diff in the optimization experiment, it gives me the eq(null) is not allowed. Use isNull() instead error, in a line that reads the database for the observedDepartures (see last picture), but it works when I run the simulation normally, it only gives this error when running the optimization experiment (I don't know why).

CodePudding user response:

You can use the absolute value of the sum of the differences for each replication. That is, create a variable that logs the | difference | for each hour, call it diff. Then in the optimization experiment, minimize the value of the sum of that variable. In fact this is close to a typical regression model's objectives. There they use a more complex objective function, by minimizing the sum of the square of the differences.

CodePudding user response:

A Calibration experiment already does (in a more mathematically correct way) what you are trying to do, using the in-built difference function to calculate the 'area between two curves' (which is what the optimisation is trying to minimise). You don't need to calculate differences or anything yourself. (There are two variants of the function to compare either two Data Sets (your case) or a Data Set and a Table Function (useful if your empirical data is not at the same time points as your synthetic simulated data).)

In your case it (the objective function) will need to be a sum of the differences between the empirical and simulated datasets for the 4 terminals (or possibly a weighted sum if the fit for some terminals is considered more important than for others).

So your objective is something like

difference(root.terminals(0).departures, root.terminals(0).observedDepartures)

difference(root.terminals(1).departures, root.terminals(1).observedDepartures)

difference(root.terminals(2).departures, root.terminals(2).observedDepartures)

difference(root.terminals(3).departures, root.terminals(2).observedDepartures)

(It would be better to calculate this for an arbitrary population of terminals in a function but this is the 'raw shape' of the code.)

A Calibration experiment is actually just a wizard which creates an Optimization experiment set up in a particular way (with a UI and all settings/code already created for you), so you can just use that objective in your existing Optimization experiment (but it won't have a built-in useful UI like a Calibration experiment). This also means you can still set this up in the Personal Learning Edition too (which doesn't have the Calibration experiment).