This is my first question on this forum.

I am conducting experiments in which I measure the current-voltage curve of a device applying different experimental conditions.

The different experimental condition are encoded into a parameter K

I am performing measurements of the current I using back & forth voltage sweeps with V varying from O to 2V then from 2V to -2V and then back to 0V.

Measurements are conducted several times for each value of Kto get an average of the current at each voltage point (backward and forward values). Each measurement is ascribed to a parameter named iter (varying from 0 to 3 for instance).

I have collected all data into a pandas dataframe df and I am putting below a code able of produce the typical dfI have (the real one is way too large):

import numpy as np

import pandas as pd

K_col=[]

iter_col=[]

V_col=[]

I_col=[]

niter = 3

V_val = [0,1,2,1,0,-1,-2,-1,0]

K_val = [1,2]

for K in K_val:

for it in range(niter):

for V in V_val:

K_col.append(K)

iter_col.append(it 1)

V_col.append(V)

I_col.append((2*K np.random.random())*V)

d={'K':K_col,'iter':iter_col,'V':V_col,'I':I_col}

df=pd.DataFrame(d)

I would like to compute the average value of I at each voltage and compare the impact of the experimental condition K.

For example let's look at 2 measurements conducted for K=1:

df[(df.K==1)&(df.iter.isin([1,2]))]

output:

K iter V I

0 1 1 0 0.000000

1 1 1 1 2.513330

2 1 1 2 4.778719

3 1 1 1 2.430393

4 1 1 0 0.000000

5 1 1 -1 -2.705487

6 1 1 -2 -4.235055

7 1 1 -1 -2.278295

8 1 1 0 0.000000

9 1 2 0 0.000000

10 1 2 1 2.535058

11 1 2 2 4.529292

12 1 2 1 2.426209

13 1 2 0 0.000000

14 1 2 -1 -2.878359

15 1 2 -2 -4.061515

16 1 2 -1 -2.294630

17 1 2 0 0.000000

We can see that for experiment 1 (iter=1) V goes multiple times at 0 (indexes 0, 4 and 8). i do not want to loose these different datapoints.

the first data point for I_avg should be (I[0] I[9])/2 which would correspond to the first measurement at 0V. The second data point should be (I[1] I[10])/2 that would correspond the the avg_I measured at 1V with increasing values of V etc...up to (I[8] I[17])/2 which would be my last data point at 0V.

My first thought was to use the groupby() method using K and V as keys but this wouldn't work because V is varying back & forth hence we have duplicate values of V for each measurements and the groupby would just focus on unique values of V.

The final dataframe I would like to have should looks like this:

K V avg_I

0 1 0 0.000000

1 1 1 2.513330

2 1 2 4.778719

3 1 1 2.430393

4 1 0 0.000000

5 1 -1 -2.705487

6 1 -2 -4.235055

7 1 1 -2.278295

8 1 0 0.000000

9 1 0 0.000000

10 2 1 2.513330

11 2 2 4.778719

12 2 1 2.430393

13 2 0 0.000000

14 2 -1 -2.705487

15 2 -2 -4.235055

16 2 1 -2.278295

17 2 0 0.000000

Would anyone have an idea on how doing this?

CodePudding user response:

In order to compute the mean taking into consideration also the position of each observation during the iterations you could add an extra column containing this information like this:

len_iter = 9

num_iter = len(df['iter'].unique())

num_K = len(df['K'].unique())

df['index'] = np.tile(np.arange(len_iter), num_iter*num_K)

And then compute the group by and mean to get the desired result:

df.groupby(['K', 'V', 'index'])['I'].mean().reset_index().drop(['index'], axis=1)

K V I

0 1 -2 -5.070126

1 1 -1 -2.598104

2 1 -1 -2.576927

3 1 0 0.000000

4 1 0 0.000000

5 1 0 0.000000

6 1 1 2.232128

7 1 1 2.359398

8 1 2 4.824657

9 2 -2 -9.031487

10 2 -1 -4.125880

11 2 -1 -4.350776

12 2 0 0.000000

13 2 0 0.000000

14 2 0 0.000000

15 2 1 4.535478

16 2 1 4.492122

17 2 2 8.569701

CodePudding user response:

If I understand this correctly, you want to have a new datapoint that represents the average I for each V category. We can achieve this by getting the average value of I for each V and then map it on the full dataframe.

avg_I = df.groupby(['V'], as_index=False).mean()[['V', 'I']]

df['avg_I'] = df.apply(lambda x: float(avg_I['I'][avg_I['V'] == x['V']]), axis=1)

df.head()

output:

K iter V I avg_I

0 1 1 0 0.00 0.00

1 1 1 1 2.34 3.55

2 1 1 2 4.54 6.89

3 1 1 1 2.02 3.55

4 1 1 0 0.00 0.00

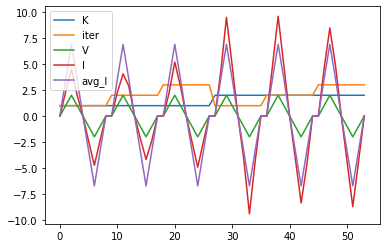

df.plot()