How to export large data from bigquery to apps scripts? I ve tried method BigQuery.TableData.list() but with large data it just doesnt work. Also i ve tried to do it with simple Query

SELECT * FROM table

But it returns only 70000 of 1.2M. How to export this data in json file with job?Or what is other solutions

CodePudding user response:

You may use BigQuery.Jobs.query() function to get your data and export it to JSON.

However, this function only retrieves ALL rows from one page of the query result.

For you to retrieve all other data from all pages of the query result, you will need to add the below while loop using pageToken for jobs.getQueryResults() parameter to loop and concatenate the retrieve rows per page as shown on below code. The jobs.getQueryResults() can only return up to 20MB that is why we will be needing to loop and concatenate through pages. You may refer to this

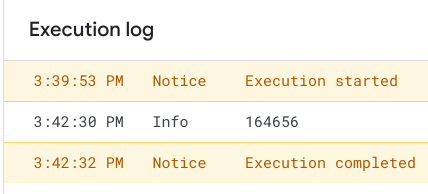

Below is the execution result of the code that is using the while loop. I was able to retrieve all 164656 out of 164656 total rows from the shakespeare table.

UPDATE: Please see below updated code:

function myFunction() {

var projectId = '<project_ID>';

var request = {

query: 'SELECT * '

'FROM `bigquery-public-data.census_bureau_usa.population_by_zip_2010`;',

useLegacySql: false

};

var max = 10000;

var queryResults = BigQuery.Jobs.query(request, projectId, {

maxResults: max

});

var jobId = queryResults.jobReference.jobId;

queryResults = BigQuery.Jobs.getQueryResults(projectId, jobId, {

maxResults: max

});

var rows = queryResults.rows;

let i = 0;

while (i < 5) {

queryResults = BigQuery.Jobs.getQueryResults(projectId, jobId, {

pageToken: queryResults.pageToken,

maxResults: max

});

i ;

rows = rows.concat(queryResults.rows);

}

console.log(rows.length);

}

I used the bigquery-public-data.census_bureau_usa.population_by_zip_2010 since it has 1.6M records to replicate the big data similar to your scenario. I also added an iteration in order to confirm that we are able to retrieve specific number of rows from a big dataset.

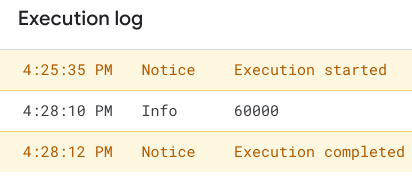

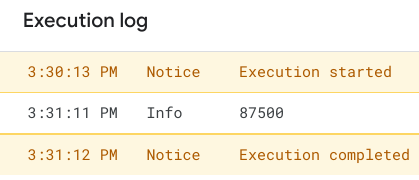

See below result of successful execution that was able to retrieve 60000 rows out of 1.6M records based on our loop condition.

However, when we remove the iteration and use the while (queryResults.pageToken) as done on my first answer, we will be encountering out of memory or runtime exit (when we use BigQuery.TableData.list() method) since it will get all rows from a big dataset in which App Script might not handle due to certain limits. You may see this App Script Quota Documentation for your reference.

I suggest to use a Virtual Machine where you can host your code and be able to handle big data retrieval.