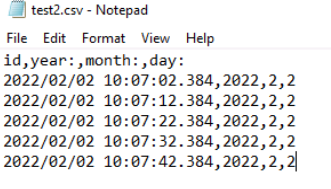

I receive a .csv export every 10 minutes that I'd like to import into a postgreSQL server. Working with a test csv, I got everything to work, but didn't take notice that my actual csv file has a forced ":" at the end of each column header (but not on the first header for some reason)(built into the back-end of the exporter, so I cant get it removed, already asked the company). So I added the ":"s to my test csv as shown in the link,

My insert into functions no longer work and give me syntax errors. First I'm trying to add them using the following code,

print("Reading file contents and copying into table...")

with open('C:\\Users\\admin\\Desktop\\test2.csv') as csvfile:

readCSV = csv.reader(csvfile, delimiter=',')

columns = next(readCSV) #skips the header row

query = 'insert into test({0}) values ({1})'

query = query.format(','.join(columns), ','.join('?' * len(columns)))

for data in readCSV:

cursor.execute(query, data)

con.commit()

Resulting in '42601' error near ":" in the second column header.

The results are the same while actually listing column headers and ? ? ?s out in the INSERT INTO section.

What is the syntax to get the script to accept ":" on column headers? If there's no way, is there a way to scan through headers and remove the ":" at the end of each?

CodePudding user response:

Because : is a special character, if your column is named year: in the DB, you must double quote its name --> select "year:" from test;

You are getting a PG error because you are referencing the unquoted column name (insert into test({0})), so add double quotes there.

query = 'insert into test("year:","day:", "etc:") values (...)'

That being said, it might be simpler to remove every occurrence of : in your csv's 1st line

CodePudding user response:

Much appreciated JGH and Adrian. I went with your suggestion to remove every occurrence of : by adding the following line after the first columns = ... statement

columns = [column.strip(':') for column in columns]

It worked well.