The below is the code for sandbox, - original obviously quite larger...

the main problem is, - I cannot figure out how to calc timedelta if LogOut was done after midnight, - however, the event should be treated as if it happened a day before...

Example, Log In was @ 22/03/2022 18:00:00, Log Out was @ 23/03/2022 01:00:00 The result should show time delta 7 hours, the Log Day - Tuesday, Date - 22/03/2022.

At the moment, I am thinking to create new Date column and offset 4 hours in it df['NewDate'] = (pd.to_datetime(df['LogDateTime']) - timedelta(hours=4)).dt.strftime('%d:%m:%Y') later split and merge using new Date, but I am not sure if it is the bes way to move forward...

Any ideas or directions would be very helpful... (thanks in advance)

import pandas as pd

data = [[515, '2022-03-16 17:01:11', 'In', 'Wed', '16/03/2022'],

[515, '2022-03-17 00:16:36', 'Out', 'Thu', '17/03/2022'],

[515, '2022-03-17 00:16:49', 'Out', 'Thu', '17/03/2022'],

[515, '2022-03-17 16:42:40', 'In', 'Thu', '17/03/2022'],

[515, '2022-03-17 23:48:09', 'Out', 'Thu', '17/03/2022'],

[333, '2022-03-17 17:16:36', 'In', 'Thu', '17/03/2022'],

[333, '2022-03-17 17:16:45', 'In', 'Thu', '17/03/2022'],

[333, '2022-03-17 17:16:51', 'In', 'Thu', '17/03/2022'],

[333, '2022-03-17 22:55:03', 'Out', 'Thu', '17/03/2022']]

df = pd.DataFrame(data, columns=['ID', 'LogDateTime', 'EventType', 'Log Day', 'Date'])

df.LogDateTime = pd.to_datetime(df.LogDateTime)

df.dtypes

df = df.sort_values(by=['LogDateTime', 'ID'])

dfIn = df.loc[(df.EventType == "In")]

dfOut = df.loc[(df.EventType == "Out")]

# dfIn = dfIn.drop_duplicates(subset=['ID', 'Date', 'Log Day'], keep='first') # Cannot be used due to midnight issue...

# dfOut = dfIn.drop_duplicates(subset=['ID', 'Date', 'Log Day'], keep='first') # Cannot be used due to midnight issue...

FinDF = pd.merge(dfIn, dfOut, on=['ID', 'Log Day', 'Date'], how='left')

FinDF.rename(columns={'LogDateTime_x': 'Log In', 'LogDateTime_y': 'Log Out'}, inplace=True)

FinDF['Hours'] = round((FinDF['Log Out'] - FinDF['Log In']).astype('timedelta64[m]') / 60, 2)

FinDF.to_excel(r'C:\Test\test.xlsx', index=False)

As per example above, data has quite a lot duplicated events, I tried to use max values in Out events and oposite in In events, - however due to midnight issue, - I loose data..

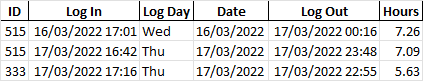

The end result should look like:

CodePudding user response:

Before you merge your DataFrames, you will have to keep only one the first "In" and last "Out" when there are consecutive occurrences. You can then continue on with your existing logic of merging the two dataframes and finding the time difference.

Try:

groups = ((df["ID"].eq(df["ID"].shift())&df["EventType"].ne(df["EventType"].shift()))|(df["ID"].ne(df["ID"].shift()))).cumsum()

#minimum timestamp to be used for EventType = "In"

mins = df.groupby(groups)["LogDateTime"].transform("min")

#maximum timestamp to be used for EventType = "In"

maxs = df.groupby(groups)["LogDateTime"].transform("max")

condensed = df[df["LogDateTime"].eq(mins.where(df["EventType"].eq("In"), maxs))].reset_index(drop=True)

condensed["Group"] = condensed.index//2

FinDF = condensed[condensed["EventType"].eq("In")].merge(condensed.loc[condensed["EventType"].eq("Out"), ["LogDateTime", "Group"]], on="Group").drop(["EventType", "Group"], axis=1)

FinDF = FinDF.rename(columns={'LogDateTime_x': 'Log In', 'LogDateTime_y': 'Log Out'})

FinDF['Hours'] = FinDF["Log Out"].sub(FinDF["Log In"]).dt.total_seconds().div(3600).round(2)

>>> FinDF

ID Log In Log Day Date Log Out Hours

0 333 2022-03-17 17:16:36 Thu 17/03/2022 2022-03-17 22:55:03 5.64

1 515 2022-03-16 17:01:11 Wed 16/03/2022 2022-03-17 00:16:49 7.26

2 515 2022-03-17 16:42:40 Thu 17/03/2022 2022-03-17 23:48:09 7.09