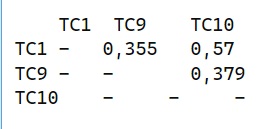

I have several test cases that I want to optimize by a similarity-based test case selection method using the Jaccard matrix. The first step is to choose a pair with the highest similarity index and then keep one as a candidate and remove the other one.

My question is: based on which strategy do you choose which of the two most similar test cases to remove? Size? Test coverage? Or something else? For example here TC1 and TC10 have the highest similarity. which one will you remove and why?

CodePudding user response:

It depends on why you're doing this, and a static code metric can only give you suggestions.

If you're trying to make the tests more maintainable, look for repeated test code and extract it into shared code. Two big examples are test setup and expectations.

For example, if you tend to do the same setup over and over again you can extract it into fixtures or test factories. You can share the setup using something like setup/teardown methods and shared contexts.

If you find yourself doing the same sort of code over and over again to test condition, extract that into a shared example like a test method or a matcher or a shared example. If possible, replace custom test code with assertions from an existing library.

Another metric is to find tests which are testing too much. If unit A calls units B and C, the test might do the setup for and testing of B and C. This is can be a lot of extra work, makes the test more complex, and makes the units interdependent.

Since all unit A cares about is whether their particular calls to B and C work, consider replacing the calls to B and C with mocks. This can greatly simplify test setup and test performance and reduces the scope of what you're testing.

However, be careful. If B or C changes A might break but the unit test for A won't catch that. You need to add integration tests for A, B and C together. Fortunately, this integration test can be very simple. It can trust that A, B, and C work individually, they've been unit tested, it only has to test that they work together.

If you're doing it to make the tests faster, first profile the tests to determine why they're slow.

Consider if parallelization would help. Consider if the code itself is too slow. Consider if the code is too interdependent and has to do too much work just to test one thing. Consider if you really have to write everything to a slow resource such as a disk or database, or if doing it in memory sometimes would be ok.

It's deceptive to consider tests redundant because they have similar code. Similar tests might test very, very different branches of the code. For example, something as simple as passing in 1 vs 1.1 to the same function might call totally different classes, one for integers and one for floats.

Instead, find redundancy by looking for similarity in what the tests cover. There's no magic percentage of similarity to determine if tests are redundant, you'll have to determine for yourself.

This is because just because a line of code is covered doesn't mean it is tested. For example...

def test_method

call_a

assert(call_b, 42)

end

Here call_a is covered, but it is not tested. Test coverage only tells you what HAS NOT been tested, it CANNOT tell you what HAS been tested.

Finally, test coverage redundancy is good. Unit tests, integration tests, acceptance tests, and regression tests can all cover the same code, but from different points of view.

All static analysis can offer you is candidates for redundancy. Remove redundant tests only if they're truly redundant and only with a purpose. Tests which simply overlap might be serve as regression tests. Many a time I've been saved when the unit tests pass, but some incidental integration test failed. Overlap is good.