So I have a project for a trail camera to count people entering the trail by detecting their faces. There isn't any power available so I am stuck using a Raspberry Pi 3 B for power reasons. I am currently using opencv and Haar cascades to detect faces. My problem is two-fold.

- The first one is that my counter behaves more like a timer. It continues to increment the entire time it is locked on whatever it thinks is a face. The behavior I need is for it to increment only once when it gets a detection and not again until that detection is lost and then re-initialized. I also need this to work if there is multiple faces detected.

- The second problem is that Haar cascades isnt great at detecting faces. I've been playing with the parameters, but cant seem to get a great result. Also tried other methods like Dlib, but the framerate makes it almost unusable on the pi 3.

I'll post my code below (cobbled together by combining a few examples). Currently, it is also set-up to use threading (another experiment trying to squeeze out some more performance). As far as I can tell, the threading is working, but doesnt really seem to improve anything. Any help you guys could provide towards solving either the counter issue or optimizing Haar Cascades for use on the Pi would be much appreciated. ** Should also note using Rasp Pi High quality camera and Ardu Cam lenses.

from __future__ import print_function

from imutils.video import VideoStream

from imutils.video.pivideostream import PiVideoStream

from imutils.video import FPS

from picamera.array import PiRGBArray

from picamera import PiCamera

import argparse

import imutils

import time

import cv2

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-c", "--cascade", type=str,

default="haarcascade_frontalface_default.xml",

help="path to haar cascade face detector")

args = vars(ap.parse_args())

detector = cv2.CascadeClassifier(args["cascade"])

size = 40

counter = 0

# created threaded video

print("[INFO] using threaded frames")

vs = PiVideoStream().start()

time.sleep(2.0)

# loop over some frames...this time using the threaded stream

while True:

# grab the frame from the threaded video stream and resize it

# to have a maximum width of 400 pixels (trying larger frame size)

frame = vs.read()

frame = imutils.resize(frame, width=450)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# perform face detection

rects = detector.detectMultiScale(gray, scaleFactor=1.05,

minNeighbors=6, minSize=(size, size),

flags=cv2.CASCADE_SCALE_IMAGE)

# loop over the bounding boxes

for (x, y, w, h) in rects:

# draw the face bounding box on the image

cv2.rectangle(frame, (x, y), (x w, y h), (0, 255, 0), 2)

# Increment counter when face is found

counter = 1

print(counter)

# show the output frame

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# do a bit of cleanup

cv2.destroyAllWindows()

vs.stop()

CodePudding user response:

First, you can use Dlib as you said but you have to use "HOG" method (Histogram of oriented gradients) instead of "CNN" for performance.

locations = face_recognition.face_locations(frame, model="hog")

But, if you really wanna get the faster performance I will recommend you to use Mediapipe for that purpose.

Download Mediapipe on your rpi3:

sudo pip3 install mediapipe-rpi3

Here is an example code from Mediapipe documentation for faces detector:

import cv2

import mediapipe as mp

mp_face_detection = mp.solutions.face_detection

mp_drawing = mp.solutions.drawing_utils

# For webcam input:

cap = cv2.VideoCapture(0)

with mp_face_detection.FaceDetection(

model_selection=0, min_detection_confidence=0.5) as face_detection:

while cap.isOpened():

success, image = cap.read()

if not success:

print("Ignoring empty camera frame.")

# If loading a video, use 'break' instead of 'continue'.

continue

# To improve performance, optionally mark the image as not writeable to

# pass by reference.

image.flags.writeable = False

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

results = face_detection.process(image)

# Draw the face detection annotations on the image.

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

if results.detections:

for detection in results.detections:

mp_drawing.draw_detection(image, detection)

# Flip the image horizontally for a selfie-view display.

cv2.imshow('MediaPipe Face Detection', cv2.flip(image, 1))

if cv2.waitKey(5) & 0xFF == 27:

break

cap.release()

I am not sure about how much FPS you will get (but surely better than Dlib and very accurately), but you can speed up the performance by detecting faces on every third frame instead of on all of them.

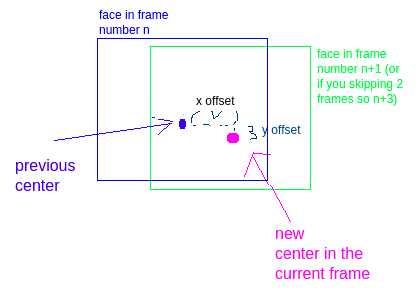

Secondly, you can do a naive way that probably will work fine. You can extract the centers of the bounding boxes in the last detection and if a center from the previous frame is inside a bounding box of a face in the current frame, it's probably the same person.

You can do it more accurately by determining if the new center of the face in the current frame (the center of his bounding box) is close enough to one of the last centers in the last frame by an offset that you choose. If it does, it's probably the same face so just don't count it.

Visualization:

Hope it will work fine for you!