I would like to try out test-driven development, but the project I am working on involves a lot of randomness and I am very unsure about how I can test it. Here is a toy example of the kind of algorithm I may want to write:

Write a function taking no argument and returning a list of random integers satisfying the following properties

- Each integer is between 0 and 10

- The same number doesn’t appear twice

- The list is of length 3 90% of the time, and of length 4 10% of the time

- There is a 50% chance for the number 3 to appear

I do not need to test exact statistical distribution, but obviously I would like tests that will fail if someone completely removes the corresponding code.

I am using an external RNG that you can assume is correct, and I am pretty free in how to structure the code, so I can use dependency injection to have tests use a fake RNG instead, but I still don’t really see how that would help. For instance even if I always use the same seed for the tests, as soon as I refactor the algorithm to pick random numbers in a different order, all the tests become meaningless.

I guess that the first two points could be tested by generating many cases and checking that the constraints are satisfied, but that doesn’t really feel like TDD.

For the last two points, I’m thinking of having tests with different configurations, where for instance the 90% is either 100% or 0%, and then I can test if the length of the list is indeed 3 or 4. I guess it would work, but it seems maybe a bit weak.

Is there any guidelines or other techniques to use when using TDD to test algorithms involving randomness?

CodePudding user response:

There are several ways you can go about a problem like this, and I may add another answer in the future, but the approach that I immediately found most compelling would be to combine test-driven development (TDD) with property-based testing.

You can do this in many languages, with various frameworks. Here, I'm going to use the original property-based testing library,

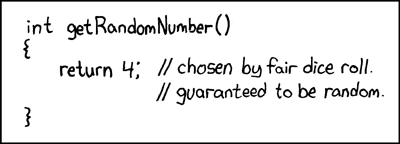

How would you reliably distinguish between this implementation and a "real" random number generator that just happens to be emitting a finite sequence of 4s before changing to some other number?

So we get to choose between stable tests that don't actually express all of our constraints or more precise tests that occasionally report incorrect results.

One design approach here it to lean into "testability" - behind the facade of our interface will be an implementation that combines a general purpose source of random bits with a deterministic function that maps a bit sequence to some result.

def randomListOfIntegers():

seed_bits = generalPurpose.random_bits()

return determisticFunction(seed_bits)

def deterministicFunction(seed_bits):

???

The claim being that randomListOfIntegers is "so simple that there are obviously no deficiencies", so we can establish its correctness by inspection, and concentrate our effort on the design of deterministicFunction.

Now, we run into a second problem: the mapping of seed_bits to some observable result is arbitrary. Most business domain problems (ex: a payroll system) have a single expected output for any given input, but in random systems you still have some extra degrees of freedom. If you write a function that produces an acceptable answer given any sequence of bits, then my function, which reverses the bits then calls your function, will also produce acceptable answers -- even though my answers and your answers are different.

In effect, if we want a suite of tests that alert when a code change causes a variation in behavior, then we have to invent the specification of the behavior that we want to lock in.

And unless we have a good guess as to which arbitrary behaviors will support a clean implementation, that can be pretty painful.

(Alternatively, we just lean on our pool of "acceptance" tests, which ignore code changes that switch to a different arbitrary behavior -- it's trade offs all the way down).

One of the simpler implementations we might consider is to treat the seed_bits as an index into a sequence of candidate responses

def deterministicFunction(seed_bits):

choices = ???

n = computeIndex(seed_bits, len(choices))

return choices[n]

This exposes yet another concern: k seed_bits means 2^k degrees of freedom; unless len(choices) happens to be a power of 2 and not bigger than 2^k, there's going to be some bias in choosing. You can make the bias error arbitrarily small by choosing a large enough value for k, but you can't eliminate it with a finite number of bits.

Breaking down the problem further, we can split the work into two elements, one responsible for producing the pool of candidates, another for actually choosing one of them.

def deterministicFunction(seed_bits):

return choose(seed_bits, weighted_candidates())

def choose(seed_bits, weighted_candidates):

choices = []

# note: the order of elements in this enumeration

# is still arbitrary

for candidate, weight in weighted_candidates:

for _ in range(weight):

choices.add(candidate)

# technically, this is also still arbirary

n = computeIndex(seed_bits, len(choices))

return choices[n]

At this point, we can decide to use "simplest thing that could possibly work" to implement computeIndex (test first, if you like), and this new weighted_candidates() function is also easy to test, since each test of it is just "count the candidates and make sure that the problem constraints are satisfied by the population as a whole". choose can be tested using much simpler populations as inputs.

This kind of an implementation could be unsatisfactory - after all, we're building this data structure of candidates, and then another of choices, only to pick a single one. That may be the best we can do. Often, however, different implementation is possible.

The problem specification, in effect, defines for us the size of the (weighted) population of responses. In other words, len(choices) is really some constant L.

choices = [ generate(n) for n in range(L)]

n = computeIndex(seed_bits, L)

return choices[n]

which in turn can be simplified to

n = computeIndex(seed_bits, L)

return generate(n)

Which is to say, we don't need to pass around a bunch of data structures if we can calculate which response is in the nth place.

Notice that while generate(n) still has arbitrary behavior, there are definitive assertions we can make about the data structure [generate(n) for n in range(L)].

Refactoring a bit to clean things up, we might have

def randomListOfIntegers():

seed_bits = generalPurpose.random_bits()

n = computeIndex(seed_bits, L)

return generateListOfIntegers(n)

Note that this skeleton hasn't "emerged" from a writing out a bunch of tests and refactoring, but instead from thinking about the problem and the choices that we need to consider in order to "control the gap between decision and feedback".

It's probably fair to call this a "spike" - a sandbox exercise that we use to better understand the problem we are trying to solve.

The same number doesn’t appear twice

An awareness of combinatorics is going to help here.

Basic idea: we can compute the set of all possible arrangements of 4 unique elements of the set [0,1,2,3,4,5,6,7,8,9,10], and we can use a technique called squashed ordering to produce a specific subset of them.

Here, we'd probably want to handle the special case of 3 a bit more carefully. The rough skeleton is going to look something like

def generateListOfIntegers(n):

other_numbers = [0,1,2,4,5,6,7,8,9,10]

has3, hasLength3, k = decode(n)

if has3:

if hasLength3:

# 45 distinct candidates

assert 0 <= k < 45

return [3] choose(other_numbers, 2, k)

else:

# 120 distinct candidates

assert 0 <= k < 120

return [3] choose(other_numbers, 3, k)

else:

if hasLength3:

# 120 distinct candidates

assert 0 <= k < 120

return choose(other_numbers, 3, k)

else:

# 210 distinct candidates

assert 0<= k < 210

return choose(other_numbers, 4, k)

Where choose(other_numbers, j, k) returns the kth subset of other_numbers with j total elements, and decode(n) has the logic necessary to ensure that the population weights come out right.

The behavior of choose is arbitrary, but there is a "natural" order to the progression of subsets (ie, you can "sort" them), so it's reasonable to arbitrarily use the sorted order.

It's probably also worth noticing that choose is very general purpose - the list we pass in could be just about anything, and it really doesn't care what you do with the answer. Compare that with decode, where our definition of the "right" behavior is tightly coupled to its consumption by generateListOfNumbers.

You may want to review Peter Seiber's Fischer Chess Exercise, to see the different approaches people were taking when TDD was new. Warning, the threading is horribly broken now, so you may need to sift through multiple threads to find all the good bits.