I am attempting to detect an image of a certain type on a page of degraded quality, that has rotational and translational variance. I need to "cropped" the detected image out of the page, so I will need the rotation and coords of the detected image. For example an image that has been photocopied on an A4 page.

I am using SIFT to detect objects the scanned page. These images can be rotated and translated but are not sheered or have any perspective distortion. I am using the classic (SIFT, SURF, ORB, etc) approach however it assumes perspective transform in order to create the 4 points of the bounding polygon. The issue here is since the key points dont line up perfectly (due to varying image qualities, the projection assumes spatial distortion and the polygon is rightfully distorted.

- The approach I want to try is to "snap" the detected polygon points to the dimensions/area of the input image. This should allow me to determine the angle of rotation and translation of the image on the page.

Things I have tried are (And Failed):

- Filter key point to remove outliers to minimise distortion.

- Affine/Rotations/etc matrices, however they assume point from the samples are equidistant and dont do approximations.

- ICP: Would probably work, but there is not enough samples and it seems to be more of an approach than a method. I am certain there is a better way.

def detect(img, frame, detector):

frame = frame.copy()

kp1, desc1 = detector.detectAndCompute(img, None)

kp2, desc2 = detector.detectAndCompute(frame, None)

index_params = dict(algorithm=0, trees=5)

search_params = dict()

flann = cv2.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(desc1, desc2, k=2)

good_points = []

for m, n in matches:

if m.distance < 0.5 * n.distance:

good_points.append(m)

if(len(good_points) == 20):

break

# out_img=cv2.drawMatches(img, kp1, frame, kp2, good_points, flags=2, outImg=None)

# plt.figure(figsize = (6*4, 8*4))

# plt.imshow(out_img)

if len(good_points) > 10: # at least 6 matches are required

# Get the matching points

query_pts = np.float32([kp1[m.queryIdx].pt for m in good_points]).reshape(-1, 1, 2)

train_pts = np.float32([kp2[m.trainIdx].pt for m in good_points]).reshape(-1, 1, 2)

matrix, mask = cv2.findHomography(query_pts, train_pts, cv2.RANSAC, 5.0)

matches_mask = mask.ravel().tolist()

h, w = img.shape

pts = np.float32([[0, 0], [0, h], [w, h], [w, 0]]).reshape(-1, 1, 2)

dst = cv2.perspectiveTransform(pts, matrix)

overlayImage = cv2.polylines(frame, [np.int32(dst)], True, (0, 0, 0), 3)

plt.figure(figsize = (6*2, 8*2))

plt.imshow(overlayImage)

orb = cv2.SIFT_create()

for frame in frames:

detect(img, frame, orb)

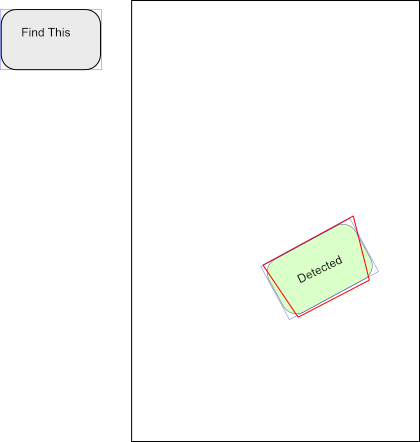

- This is an example of a page with the image we are trying to detect on it.

- Blue line: rectangle with correct size

- Red Line: determines polygon using perspective transform

CodePudding user response:

I stumbled on a post that show you how to extract the minimum bounding box from a set of points. This works really well as it also discloses the rotation.

def detect_ICP(img, frame, detector):

frame = frame.copy()

kp1, desc1 = detector.detectAndCompute(img, None)

kp2, desc2 = detector.detectAndCompute(frame, None)

index_params = dict(algorithm=0, trees=5)

search_params = dict()

flann = cv2.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(desc1, desc2, k=2)

matches = sorted(matches, key = lambda x:x[0].distance 0.5 * x[1].distance)

good_points = []

for m, n in matches:

if m.distance < 0.5 * n.distance:

good_points.append(m)

out_img=cv2.drawMatches(img, kp1, frame, kp2, good_points, flags=2, outImg=None)

plt.figure(figsize = (6*4, 8*4))

plt.imshow(out_img)

if len(good_points) > 10: # at least 6 matches are required

# Get the matching points

query_pts = np.float32([kp1[m.queryIdx].pt for m in good_points]).reshape(-1, 1, 2)

train_pts = np.float32([kp2[m.trainIdx].pt for m in good_points]).reshape(-1, 1, 2)

matrix, mask = cv2.findHomography(query_pts, train_pts, cv2.RANSAC, 5.0)

# matches_mask = mask.ravel().tolist()

h, w = img.shape

pts = np.float32([[0, 0], [0, h], [w, h], [w, 0]]).reshape(-1, 1, 2)

dst = cv2.perspectiveTransform(pts, matrix)

# determine the minimum bounding box

minAreaRect = cv2.minAreaRect(dst) # This will have size and rotation information

rotatedBox = cv2.boxPoints(minAreaRect)

rotatedBox = np.float32(rotatedBox).reshape(-1, 1, 2)

overlayImage = cv2.polylines(frame, [np.int32(rotatedBox)], True, (0, 0, 0), 3)

plt.figure(figsize = (6*2, 8*2))

plt.imshow(overlayImage)