I have run into a delayed processing time for a PHP program, I have a MySQL record with over 1000 tables; Each table is created once a new device is added, e.g assets_data_imeixx - to assets_data_imeixx1000th table

Each table contains about 45,000 rows of records inserted every 10 seconds, Below is my PHP code to query the database and fetch all these records based on datetime.

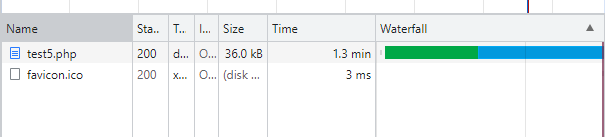

Issue: The program executes without error but it takes about 1.3minutes to 4mins for very large records.

PHP Code:

$ms = mysqli connection string in config.php //$ms is OKAY

$user_id = '5';

$q = "SELECT * FROM `user_assets` WHERE `user`='".$user_id ."' ORDER BY `imei` ASC";

$r = mysqli_query($ms,$q);

$result = array(); //$result array to contain all data

while($row =mysqli_fetch_array($r)){

//fetch 7 days record

for ($i=1; $i < 7; $i ) {

$date = "-" . $i . " days";

$days_ago = date('Y-m-d', strtotime($date, strtotime('today')));

$sql1 = "SELECT * FROM assets_data_" . $row["imei"] . " WHERE dt_time LIKE '" . $days_ago . "%' LIMIT 1"; // its correct

//$result1 = $conn->query($sql1);

$result1 = mysqli_query($ms,$sql1);

$row2 = mysqli_fetch_array($result1);

echo $row['imei']." ".$row2['dt_server']."<br/>";

}

}

Above code fetches over 1000 devices from user_assets table, These IMEI each has its own table that contains over 45,000 records in each table of location data.

The for loop iterates over each IMEI table and records.

Above code runs without error but take so much time to complete, I want to find a solution to optimize and have code execute in a very short time max 5 seconds.

I need help and suggestions on optimizing and running this large scale of data and iteration.

Above code runs without error but take so much time to complete, I want to find a solution to optimize and have code execute in a very short time max 5 seconds.

I need help and suggestions on optimizing and running this large scale of data and iteration.

(from Comment)

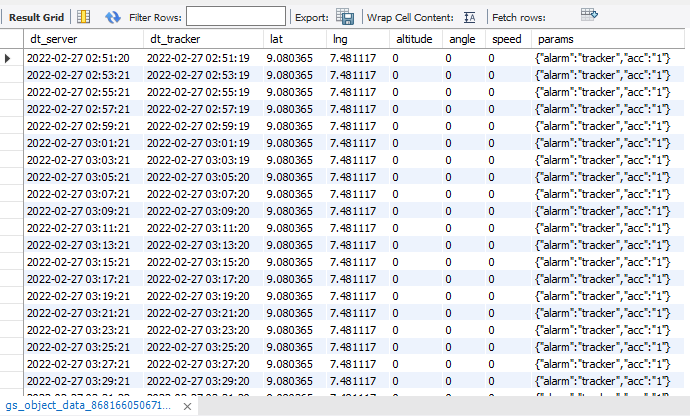

CREATE TABLE gs_object_data_863844052008346 (

dt_server datetime NOT NULL,

dt_tracker datetime NOT NULL,

lat double DEFAULT NULL,

lng double DEFAULT NULL,

altitude double DEFAULT NULL,

angle double DEFAULT NULL,

speed double...

(From Comment)

gs_object_data_072101424612

gs_object_data_072101425049

gs_object_data_072101425486

gs_object_data_072101445153

gs_object_data_111111111111111

gs_object_data_1234567894

gs_object_data_222222222222222

gs_object_data_2716325849

gs_object_data_2716345818

gs_object_data_30090515907

gs_object_data_3009072323

gs_object_data_3009073758

gs_object_data_352093088838221

gs_object_data_352093088839310

gs_object_data_352093088840045

gs_object_data_352121088128697

gs_object_data_352121088132681

gs_object_data_352621109438959

gs_object_data_352621109440203

gs_object_data_352625694095355

gs_object_data_352672102822186

gs_object_data_352672103490900

gs_object_data_352672103490975

gs_object_data_352672103490991

gs_object_data_352887074794052

gs_object_data_352887074794102

gs_object_data_352887074794193

gs_object_data_352887074794417

gs_object_data_352887074794425

gs_object_data_352887074794433

gs_object_data_352887074794441

gs_object_data_352887074794458

gs_object_data_352887074794474

gs_object_data_352887074813696

gs_object_data_352887074813712

gs_object_data_352887074813720

gs_object_data_352887074813753

gs_object_data_352887074813761

gs_object_data_352887074813803

- 900 tables each having different location data.

Requirement: Loop through each table, fetch data for selected date range say:"SELECT dt_server FROM gs_object_data_" . $row["imei"] . " WHERE dt_server BETWEEN '2022-02-05 00:00:00' AND '2022-02-12 00:00:00'";

Expected Result: Return result set containing data from each table containing information for the selected date range. That means having 1000 tables will have to be looped through each table and also fetch data in each table.

CodePudding user response:

I agree with KIKO -- 1 table not 1000. But, if I understand the rest, there are really 2 or 3 main tables.

Looking at your PHP -- It is often inefficient to look up one list, then go into a loop to find more. The better way (perhaps 10 times as fast) is to have a single SELECT with a JOIN to do both selects at once.

Consider some variation of this MySQL syntax; it may avoid most of the PHP code relating to $days_ago:

CURDATE() - INTERVAL 3 DAY

After also merging the Selects, this gives you the rows for the last 7 days:

WHERE date >= CURDATE() - INTERVAL 7 DAY

(I did not understand the need for LIMIT 1; please explain.)

Yes, you can use DATETIME values as strings, but try not to. Usually DateTime functions are more efficient.

Consider "composite" indexes:

INDEX(imei, dt)

which will be very efficient for

WHERE imei = $imei

AND dt >= CURDATE() - INTERVAL 7 DAY

I would ponder ways to have less redundancy in the output; but that should mostly be done after fetching the raw data from the table(s).

Turn on the SlowLog with a low value of long_query_time; it will help you locate the worst query; then we can focus on it.

An IMEI is up to 17 characters, always digits? If you are not already using this, I suggest BIGINT since it will occupy only 8 bytes.

For further discussion, please provide SHOW CREATE TABLE for each of the main tables.

CodePudding user response:

Since all those 1000 tables are the same it would make sense to put all that data into 1 table. Then partition that table on date, use proper indexes, and optimize the query.

See: Normalization of Database

Since you limit results to one user, and one row per device, it should be possible to execute a query in well below one second.