I am using Pyspark 3.1 locally to access data in s3 using AWS access key, password, and "s3a" protocol, it is working fine. I want to use AWS role instead of AWS keys. Can someone tell me the syntax to use this?

I have tried below code but it was giving an error:

spark_context._jsc.hadoopConfiguration().set("fs.s3a.aws.credentials.provider", "org.apache.hadoop.fs.s3a.auth.AssumedRoleCredentialProvider")

spark_context._jsc.hadoopConfiguration().set("fs.s3a.assumed.role.arn", "arn:aws:iam::2:role/admin-admin-admin")

I am getting error as:

Unable to load AWS credentials from environment variables (AWS_ACCESS_KEY_ID (or AWS_ACCESS_KEY) and AWS_SECRET_KEY

Thanks, XI

CodePudding user response:

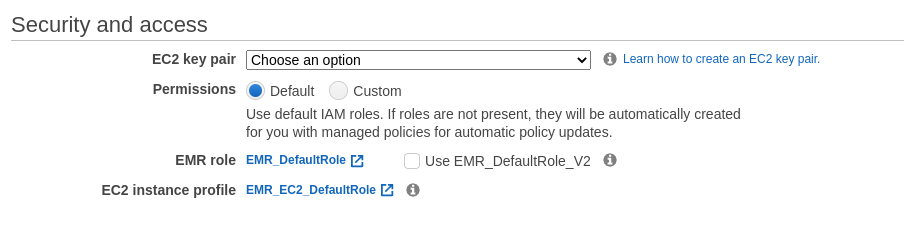

Your EMR instances should already have a role attached to them. When you create the cluster you specify the role to use. So figure out what cluster you are using the role attached to it, and then add the appropriate IAM policy to that role. In fact, I wouldn't expect that you would need to have your code assume a role without a very good reason.

If you are using IAM tokens in production you are doing something wrong.

For more information about roles in EMR and on the underlying ec2 instances please see these docs: https://docs.aws.amazon.com/emr/latest/ManagementGuide/emr-iam-role-for-ec2.htmlthis

CodePudding user response:

- The s3a AssumedRoleCredentialProvider uses your current login secrets to call STS.assumeRole and get some short lived credentials for that role

- you have to make sure that your hadoop binaries are up to date; use hadoop 3.3.1 or higher.

- you do still have to provide those fs.s3a.access.key and fs.s3a.secret.key values otherwise it can't call assume role

- and that login must have the permission to call assumeRole on the given role