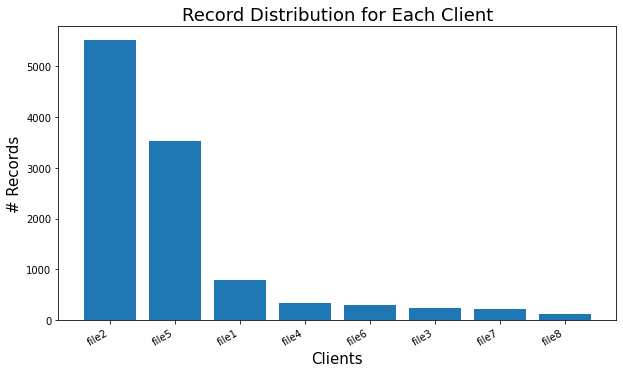

I am applying federated learning on multiple files using Tensoflow Federated. The problem is, that the size of data (number of records) in each file is different.

- Is it a problem in federated learning training to have different sizes for each client? if there is how can I overcome it?

- Is there a way that I can see how each client performing while federated computation training?

CodePudding user response:

Is it a problem in federated learning training to have different sizes for each client? if there is how can I overcome it?

This depends on a variety of factors, a large one being the data distribution on the clients. For example, if each clients data looks very similar (e.g. effectively the same distribution, IID) it doesn't particularly matter which client is used.

If this isn't the case, a common technique is to limit the number of maximum steps a client takes on its dataset each round, to promote more equal participation in the training process. In TensorFlow and TFF this can be accomplished using tf.data.Dataset.take to restrict to a maximum number of iterations. In TFF this can be applied to every client using tff.simulation.datasets.ClientData.preprocess. This is discussed with examples in the tutorial Working with TFF's ClientData.

Is there a way that I can see how each client performing while federated computation training?

Clients can return individual metrics to report how they are performing, but this isn't done by default. In tff.learning.algorithms.build_weighted_fed_avg the metrics_aggregator defaults to tff.learning.metrics.sum_then_finalize which in usually creates global averages of metrics. There isn't an out-of-the-box solution, but one could implement a "finalize-then-sample" that would likely meet this need. Re-using tff.aggregators.federated_sample and looking at the source code for sum_then_finalize as an example would be a good place to start.