My Problem is similar to the problems mentioned here.

Edit 1:

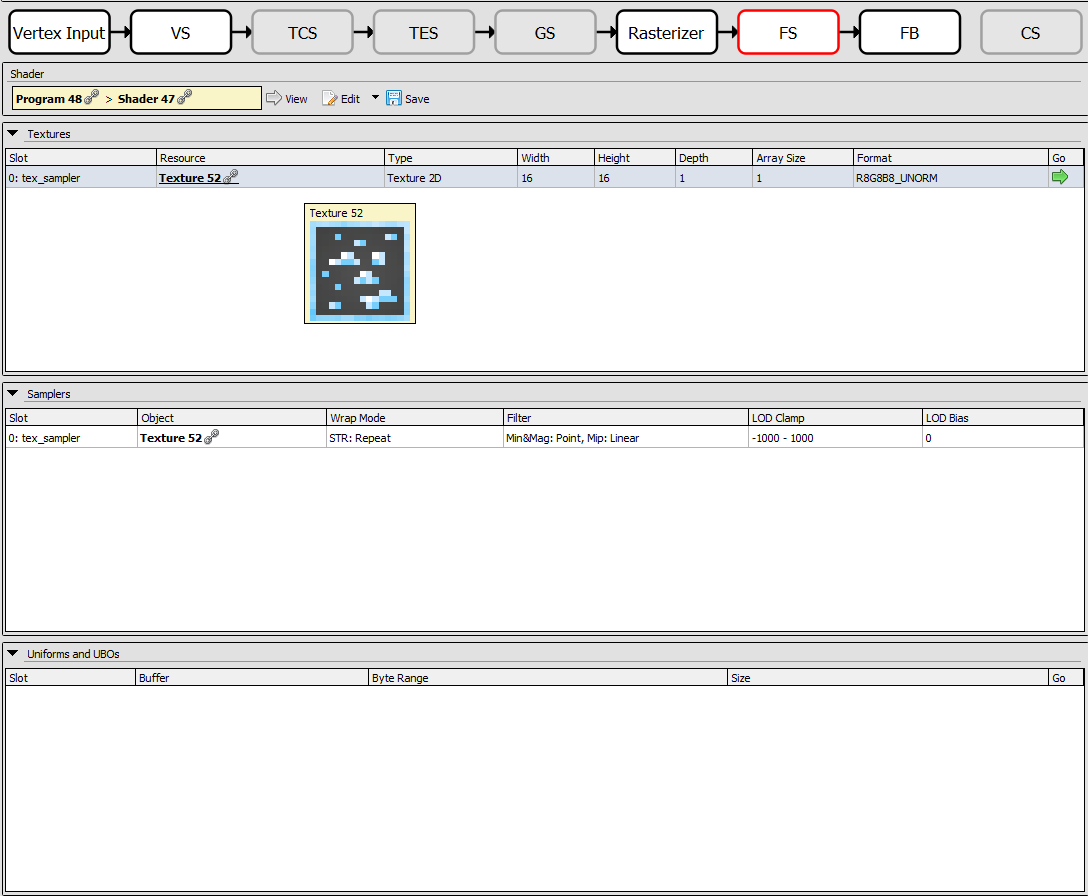

I replaced FragColor = texture(tex_sampler, TexCoord);// * vec4(result, 1.0); in the fragment shader with FragColor = vec4(TexCoord.x * TexCoord.x, TexCoord.y * TexCoord.y, 1.0, 1.0); which leads to a regular change in color and implies, that the texture cordinates are passed to the fragment shader. There seem to be wrong or missing parts somewhere which are required for using opengl textures.

CodePudding user response:

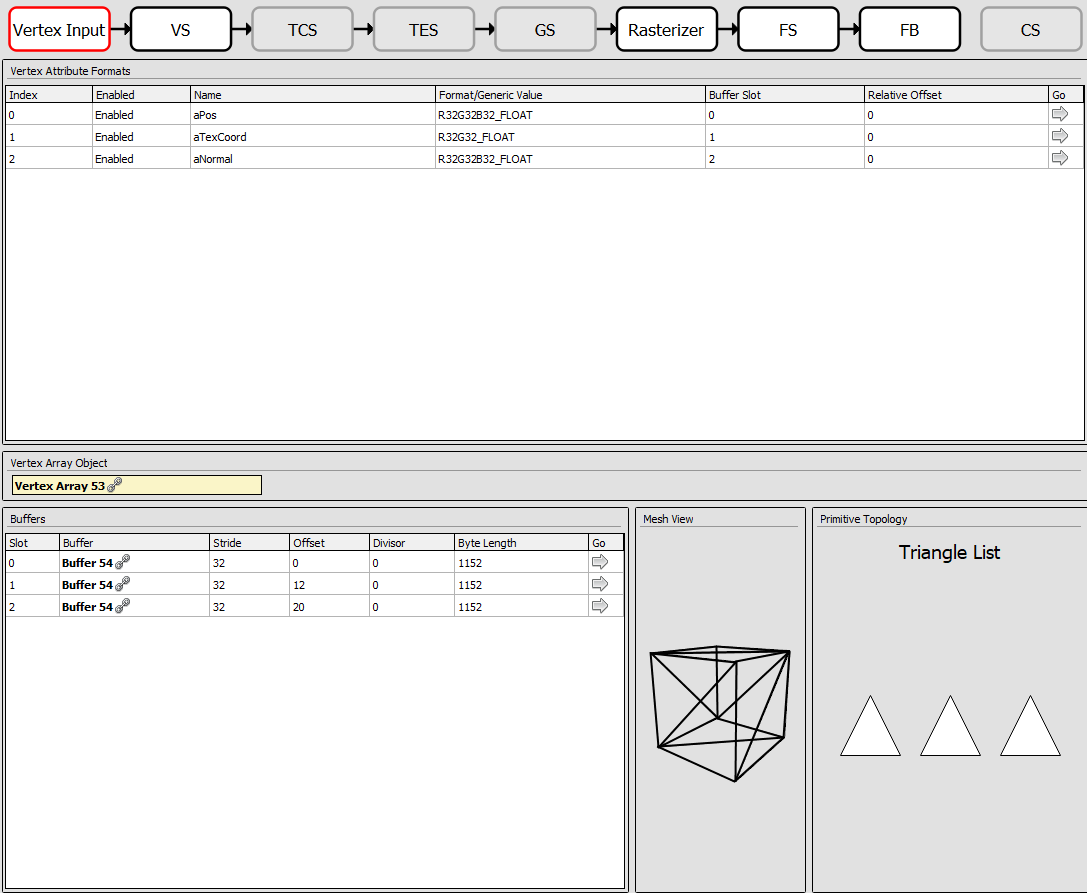

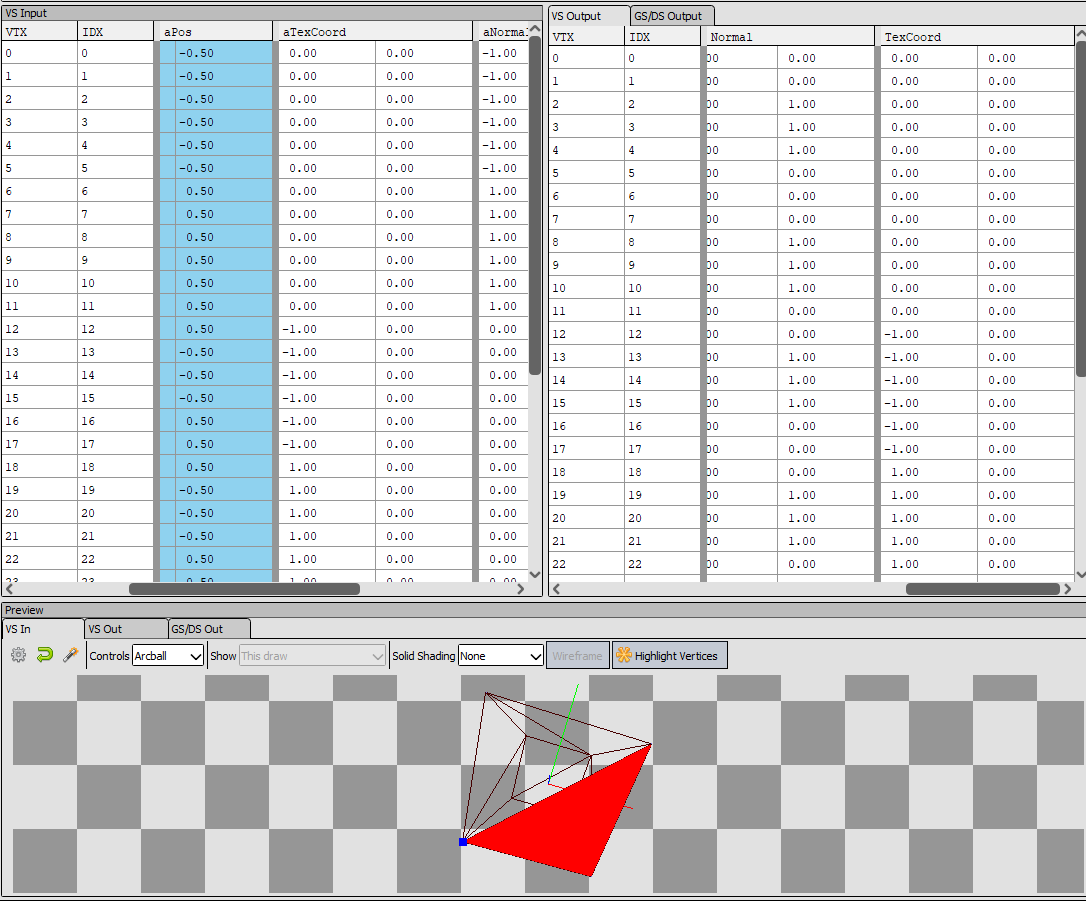

You can clearly see in renderdoc that the texcoords your shader is receiving are flat-out wrong. If you look closely, you can even see that your aTexCoord vector is the x and y component of your normals.

The code pasted in this question makes it appear as if your vertex format is posX, posY, posZ, texU, texV, nrmX, nrmY, nrmZ, and this is exactly what you set up with glVertexAttribPointer. But you left out an important part of your code: what data you actually write into your buffer, and what you have there is:

std::vector<Vertex> cubevert; for (int i = 0; i < sizeof(cube_vertices) / szeof(float); i = 8) { Vertex vert; vert.Position = glm::vec3(cube_vertices[i], cube_vertices[i 1], cube_vertices[i 2]); vert.TexCoords = glm::vec2(cube_vertices[i > 3], cube_vertices[i 4]); vert.Normal = glm::vec3(cube_vertices[i > 5], cube_vertices[i 6], cube_vertices[i 7]); cubevert.push_back(vert); }

So you actually-re-organize this data to a different format defined as:

struct Vertex { glm::vec3 Position; glm::vec3 Normal; glm::vec2 TexCoords; };

Which simply means you use the first two components of the normal as your texcoords, and the third component of the normal together with the texcoords as normal.