I'm implementing snapshot interpolator in network game.

What I currently did is below.

#include "stdafx.h"

#include <queue>

class Interpolator

{

using Clock = std::chrono::high_resolution_clock;

public:

struct Entry

{

bool empty;

Clock::time_point timeStamp;

XMFLOAT3 position;

XMFLOAT4 rotation;

Entry()

: empty{ true },

position{},

rotation{}

{

}

Entry(const XMFLOAT3& pos, const XMFLOAT4& rot)

: empty{ false }

{

timeStamp = Clock::now();

position = pos;

rotation = rot;

}

};

public:

Interpolator()

: mProgress{ 0.0f }

{

}

// Networkk Thread get object's position and rotation from server.

void Enqueue(const XMFLOAT3& pos, const XMFLOAT4& rot)

{

mEntryQueueMut.lock();

mEntryQueue.push(Entry(pos, rot));

mEntryQueueMut.unlock();

}

void Interpolate(float dt, XMFLOAT3& targetPos, XMFLOAT4& targetRot)

{

mEntryQueueMut.lock();

if (mEntryQueue.empty())

{

mEntryQueueMut.unlock();

return;

}

Entry next = mEntryQueue.front();

if (mPrevEntry.empty)

{

mEntryQueue.pop();

mEntryQueueMut.unlock();

mPrevEntry = next;

targetPos = next.position;

targetRot = next.rotation;

return;

}

mEntryQueueMut.unlock();

mProgress = dt;

float timeBetween = GetDurationSec(next.timeStamp, mPrevEntry.timeStamp);

float progress = std::min(1.0f, mProgress / timeBetween);

targetPos = Vector3::Lerp(mPrevEntry.position, next.position, progress);

targetRot = Vector4::Slerp(mPrevEntry.rotation, next.rotation, progress);

if (progress >= 1.0f)

{

mEntryQueueMut.lock();

mEntryQueue.pop();

mEntryQueueMut.unlock();

mPrevEntry = next;

mProgress -= timeBetween;

}

}

void Clear()

{

mEntryQueueMut.lock();

std::queue<Entry> temp;

std::swap(mEntryQueue, temp);

mEntryQueueMut.unlock();

mProgress = 0.0f;

mPrevEntry = Entry{};

}

private:

static float GetDurationSec(Clock::time_point& a, Clock::time_point& b)

{

auto msec = std::chrono::duration_cast<std::chrono::milliseconds>

(a - b).count();

return (float)msec / 1000.0f;

}

private:

std::queue<Entry> mEntryQueue;

std::mutex mEntryQueueMut;

Entry mPrevEntry;

float mProgress;

};

So, server sends object position and rotation through UDP packet like every 33 ms.

And client receive it and add into queue.

Then, every update I interpolate between previous state and next state with progression that is added with elapsed time.

But in result, it jitters very much. Can you give me a little advice for this?

CodePudding user response:

There are a few incorrect assumptions you might be making about the UDP packets your program is receiving. These incorrect assumptions include:

1. That you will receive all of the UDP packets that the server sent to you

2. That you will receive the UDP packets in the order they were sent

3. That you will receive one UDP packet every ~33mS

4. That the server's clock and the client's clock will advance at the exact same rate

Those are all behaviors that an ideal scenario would provide, but real life is far from ideal, which means that some of the UDP packets the server sends to you might get dropped, or delayed by some arbitrary amount of time, or arrive out of order, and ideally your client should be smart enough to handle those problems gracefully.

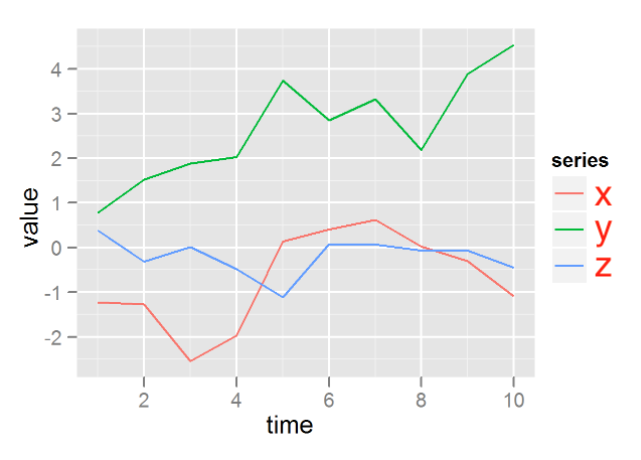

One way to deal with UDP flakiness is to think of the UDP packets the server is sending you as data-points along a timeline, something like this:

Each time you receive a packet from the server, it (hopefully) contains a timestamp as well as some data-values, and you can add that data to a local data-structure to update the time-series. Note that the example graphic shows the X, Y, and Z values at any specific time, in red, green, and blue, respectively.

So the first thing to do is have the client insert each data-packet into the data-structure as it receives it, and you'll also probably want to remove the oldest data-point from the data structure whenever it hits some maximum size, just to avoid excessive memory usage. Ideally the data-structure will be something like a std::map<Clock::time_point, Entry> that is automatically sorted by key, so that you can easily iterate back and forth across the timeline.

The other part of the solution is using this data-structure to look up what the X/Y/Z/whatever values "should be" at any given time_point t.

If the t you want to look up data for is explicitly present in the data structure (e.g. 2 or 4 in the example image), that's trivial; just do a find() on that time_point and if it succeeds, use the results verbatim.

OTOH if t is within the range of known time_points in the data structure but isn't one of them (e.g. 4.27 or 8.5 in the attached image), then you'll need to do a bit of linear interpolation to synthesize the values at that time. You'll need to iterate through the data structure and find the two time_points on either side of t, i.e. the largest time_point that is smaller than t and the one after that.

Once you have those two time_points (call them ta and tb), you can calculate t's percentage of the distance between them:

const float percent = (t-ta)/(tb-ta);

... and then use that percentage as your argument to Lerp() or Slerp() to interpolate X/Y/Z values for t.

Of course there is one more case you might have to handle -- if your t value is smaller than the smallest time_point value in the graph, or larger than the largest time_point value in the graph. That case is a bit problematic, since now you have only one "closest" time_point to deal with, and that's not enough to do useful linear interpolation. You could just use the values associated with that closest time_point verbatim, or you could try to use linear extrapolation to come up with appropriate values based on the slopes of the nearest line-segments, but either approach is just a wild guess and is likely to be unsatisfactory.

So that leads to the final part of the problem: How to decide what value of t your client should be using for its lookup into the graph on each rendering frame? The naive approach would just be to add a constant 33mS (or whatever the nominal frame-rate of your game is) to t on every graphics-tick, but this will eventually lead to problems as the client's local clock and the server's own clock are unsynchronized with respect to each other and so even if they start out at roughly the same value, they will eventually will "drift apart".

A slightly better approach might be to just always set t to be equal to the largest time_point currently present in the graph, but that will look jittery since on some graphics-frames, a new UDP packet will have just been received a millisecond ago, whereas on others, the frame might be rendered just before the next UDP packet comes in and would thus be rendering data that is 30ms out-of-date.

Ideally you want your t to always move monotonically (and as smoothly as possible) toward the right edge of the graph, but never actually arrive at the edge of the graph, which is a bit tricky since you can't 100% reliably predict when the next UDP packet(s) will come in or what their timestamps will be.

However, you could fake it by doing something like this (pseudocode) on each graphics-update-tick:

const float pct = 0.75f; // vary this value to taste

t = (t*pct) (largest_time_point_in_graph*(1.0f-pct));

Assuming that your UDP packets are reasonably well-behaved, that would keep t near (but not at) the right-hand side of the graph at all times. That might or might not be good enough to give you a usable result.

If that's not good enough, you could go whole-hog and reconstruct the server's clock on your client, by recording the timestamp of your client's local clock at the moment you receive each UDP packet, and correlating those local-clock-times against the server's timestamps that are embedded in the UDP packets. Then you can use that data to calculate an estimate of what the server's clock-value (presumably) is at any given client-clock-time, and use that to compute a reasonable value of t to use based on the current client-clock-time (minus some small constant to account for network propagation time). If that's of interest, let me know and I can provide more details about how to do it.