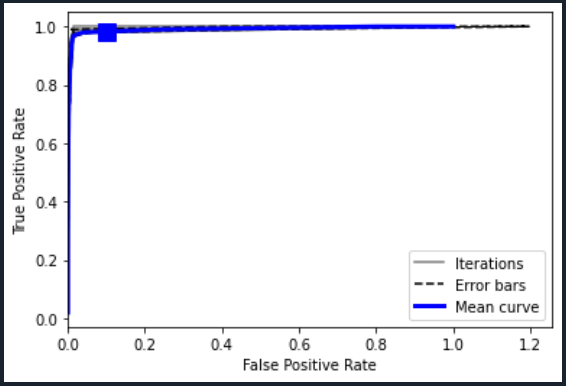

I'm making ROC curves for an imbalanced dataset, but they do not look like normal ROC curves at all. They look more like step functions (see image provided). From all the sources I can find, this should indicate that my machine learning algorithm is effective, but I'm still getting a lot of false positives. Why does my ROC curve so nicely shaped if my algorithm is not effective? Is there a better way to measure how 'good' this algorithm is? Thanks!

CodePudding user response:

Sometimes, ROC curves are not the best way to analyze algorithms for imbalanced datasets because a 'good' ROC curve just has a high true positive rate in comparison to a low false positive rate, which sometimes is misleading for imbalanced datasets because a true positive rate can be high while still mislabeling because the majority class dominated the minority class (as you touched on).

Instead, try a PR (precision-recall) curve - PR curves are much better for imbalanced datasets because precision is not skewed by imbalanced data. See this link: PR Curves