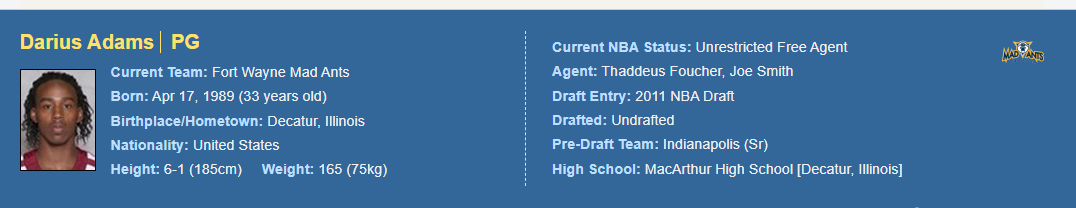

I've struggled on this for days and not sure what the issue could be - basically, I'm trying to extract the profile box data (picture below) of each link -- going through inspector, I thought I could pull the p tags and do so.

I'm new to this and trying to understand, but here's what I have thus far:

-- a code that (somewhat) succesfully pulls the info for ONE link:

import requests

from bs4 import BeautifulSoup

# getting html

url = 'https://basketball.realgm.com/player/Darius-Adams/Summary/28720'

req = requests.get(url)

soup = BeautifulSoup(req.text, 'html.parser')

container = soup.find('div', attrs={'class', 'main-container'})

playerinfo = container.find_all('p')

print(playerinfo)

I then also have a code that pulls all of the HREF tags from multiple links:

from bs4 import BeautifulSoup

import requests

def get_links(url):

links = []

website = requests.get(url)

website_text = website.text

soup = BeautifulSoup(website_text)

for link in soup.find_all('a'):

links.append(link.get('href'))

for link in links:

print(link)

print(len(links))

get_links('https://basketball.realgm.com/dleague/players/2022')

get_links('https://basketball.realgm.com/dleague/players/2021')

get_links('https://basketball.realgm.com/dleague/players/2020')

So basically, my goal is to combine these two, and get one code that will pull all of the P tags from multiple URLs. I've been trying to do it, and I'm really not sure at all why this isn't working here:

from bs4 import BeautifulSoup

import requests

def get_profile(url):

profiles = []

req = requests.get(url)

soup = BeautifulSoup(req.text, 'html.parser')

container = soup.find('div', attrs={'class', 'main-container'})

for profile in container.find_all('a'):

profiles.append(profile.get('p'))

for profile in profiles:

print(profile)

get_profile('https://basketball.realgm.com/player/Darius-Adams/Summary/28720')

get_profile('https://basketball.realgm.com/player/Marial-Shayok/Summary/26697')

Again, I'm really new to web scraping with Python but any advice would be greatly appreciated. Ultimately, my end goal is to have a tool that can scrape this data in a clean way all at once.

(Player name, Current Team, Born, Birthplace, etc).. maybe I'm doing it entirely wrong but any guidance is welcome!

CodePudding user response:

You need to combine your two scripts together and make requests for each player. Try the following approach. This searches for <td> tags that have the data-td=Player attribute:

import requests

from bs4 import BeautifulSoup

def get_links(url):

data = []

req_url = requests.get(url)

soup = BeautifulSoup(req_url.content, "html.parser")

for td in soup.find_all('td', {'data-th' : 'Player'}):

a_tag = td.a

name = a_tag.text

player_url = a_tag['href']

print(f"Getting {name}")

req_player_url = requests.get(f"https://basketball.realgm.com{player_url}")

soup_player = BeautifulSoup(req_player_url.content, "html.parser")

div_profile_box = soup_player.find("div", class_="profile-box")

row = {"Name" : name, "URL" : player_url}

for p in div_profile_box.find_all("p"):

try:

key, value = p.get_text(strip=True).split(':', 1)

row[key.strip()] = value.strip()

except: # not all entries have values

pass

data.append(row)

return data

urls = [

'https://basketball.realgm.com/dleague/players/2022',

'https://basketball.realgm.com/dleague/players/2021',

'https://basketball.realgm.com/dleague/players/2020',

]

for url in urls:

print(f"Getting: {url}")

data = get_links(url)

for entry in data:

print(entry)