# creating a flower dataset

f_ds = torchvision.datasets.ImageFolder(data_path)

# a transform to convert images to tensors

to_tensor = torchvision.transforms.ToTensor()

for idx, (img, label) in enumerate(f_ds):

if idx == 2100:

# random PIL image

display(img)

print(img.size, img.mode) # W * H

# convert the same arrray to_tensor (a torchvision transform to convert images to pytorch tensor)

img_tensor = to_tensor(img)

print("After converting to torch tensor: ", img_tensor.shape)

C, H, W = img_tensor.shape

# the same image reshaped to match matplotlib

reshaped_img_tensor = img_tensor.reshape(H, W, C) # i think the problem is here...

print('After reshaping img_tensor: ', reshaped_img_tensor.shape)

new_arr = (reshaped_img_tensor.numpy()*255.0).astype(np.uint8)

print("dtype:", new_arr.dtype, "min:", new_arr.min(), "max:",new_arr.max(), "shape:", new_arr.shape)

display(new_arr, 'RGB'))

break

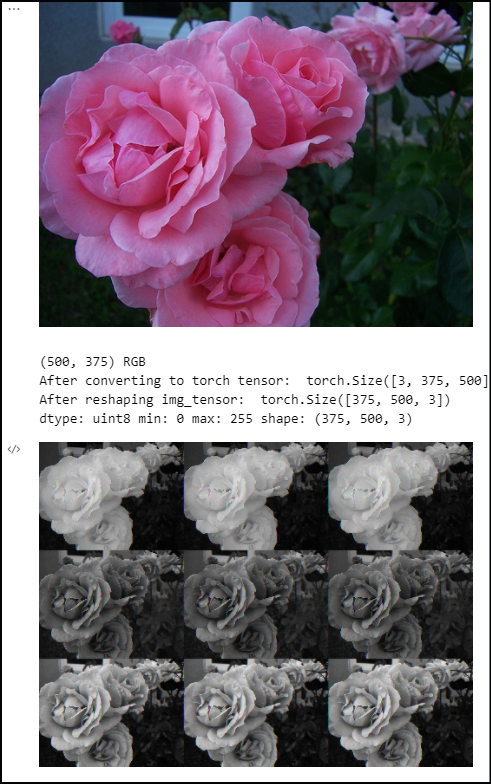

The same image array after reshaping is messed up. Here instead of showing an image with 3 channels it shows gray image(1 channel) and 3*3 images somehow. What am I missing here?

Please help me solve this.

Thank you :D

CodePudding user response:

ToTensor permutes the array and returns a tensor in the shape [C, H, W]. Using reshape to swap the order of dimensions is wrong and will interleave the image.

I should have used tenosor.permute to change the dimensions not reshape.