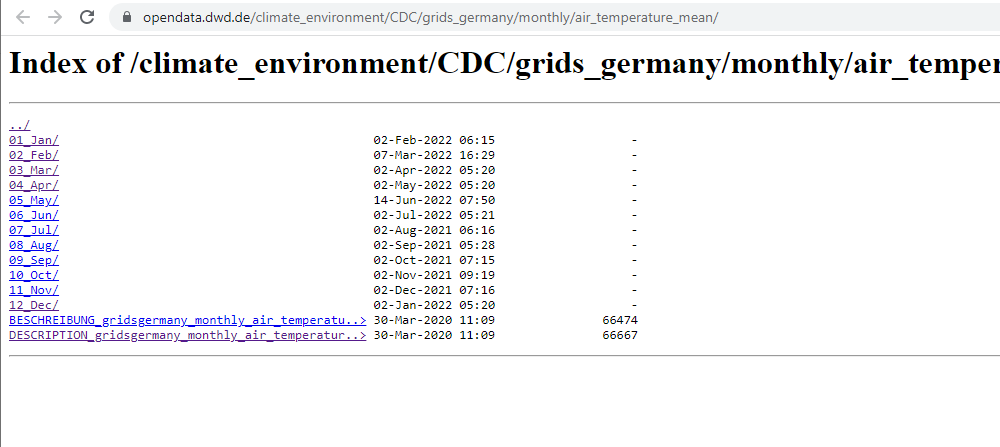

I need to download climatic datasets at monthly resolutions and several years. The data is available here:

I can download unique files by clicking on them and saving them. But how I can download several datasets (how to filter for e.g. specific years?), or simply download all of the files within a directory? I am sure there should be an automatic way using some FTP connection, or some R coding (in R studio), but can't find any relevant suggestions. I am a Windows 10 user. Please, where to start?

CodePudding user response:

You can use rvest package for scrapping the links and use those links to download the files for a specific month in the following way:

library(rvest)

library(stringr)

page_link <- "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/"

month_name <- "01_Jan"

# you can set month_name as "05_May" to get the data from 05_May

# getting the html page for 01_Jan folder

page <- read_html(paste0(page_link, month_name, "/"))

# getting the link text

link_text <- page %>%

html_elements("a") %>%

html_text()

# creating links

links <- paste0(page_link, month_name, "/", link_text)[-1]

# extracting the numbers for filename

filenames <- stringr::str_extract(pattern = "\\d ", string = link_text[-1])

# creating a directory

dir.create(month_name)

# setting the option for maximizing time limits for downloading

options(timeout = max(600, getOption("timeout")))

# downloading the file

for (i in seq_along(links)) {

download.file(links[i], paste0(month_name, "/", filenames[i], "asc.gz"))

}

CodePudding user response:

Try this:

library(rvest)

baseurl <- "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/"

res <- read_html(baseurl)

urls1 <- html_nodes(res, "a") %>%

html_attr("href") %>%

Filter(function(z) grepl("^[[:alnum:]]", z), .) %>%

paste0(baseurl, .)

This gets us the first level,

urls1

# [1] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/01_Jan/"

# [2] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/02_Feb/"

# [3] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/03_Mar/"

# [4] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/04_Apr/"

# [5] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/05_May/"

# [6] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/06_Jun/"

# [7] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/07_Jul/"

# [8] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/08_Aug/"

# [9] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/09_Sep/"

# [10] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/10_Oct/"

# [11] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/11_Nov/"

# [12] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/12_Dec/"

# [13] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/BESCHREIBUNG_gridsgermany_monthly_air_temperature_mean_de.pdf"

# [14] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/DESCRIPTION_gridsgermany_monthly_air_temperature_mean_en.pdf"

As you can see, some are files, some are directories. We can iterate over these URLs to do the same thing:

urls2 <- lapply(grep("/$", urls1, value = TRUE), function(url) {

res2 <- read_html(url)

html_nodes(res2, "a") %>%

html_attr("href") %>%

Filter(function(z) grepl("^[[:alnum:]]", z), .) %>%

paste0(url, .)

})

Each of those folders contain 141-142 different files:

lengths(urls2)

# [1] 142 142 142 142 142 142 141 141 141 141 141 141

### confirm no more directories

sapply(urls2, function(z) any(grepl("/$", z)))

# [1] FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE FALSE

(This would not be difficult to transform into a recursive search vice a fixed-2-deep search.)

These files can all be combined with those from urls1 that were files (the two .pdf files)

allurls <- c(grep("/$", urls1, value = TRUE, invert = TRUE), unlist(urls2))

length(allurls)

# [1] 1700

head(allurls)

# [1] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/BESCHREIBUNG_gridsgermany_monthly_air_temperature_mean_de.pdf"

# [2] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/DESCRIPTION_gridsgermany_monthly_air_temperature_mean_en.pdf"

# [3] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/01_Jan/grids_germany_monthly_air_temp_mean_188101.asc.gz"

# [4] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/01_Jan/grids_germany_monthly_air_temp_mean_188201.asc.gz"

# [5] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/01_Jan/grids_germany_monthly_air_temp_mean_188301.asc.gz"

# [6] "https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/01_Jan/grids_germany_monthly_air_temp_mean_188401.asc.gz"

And now you can filter as desired and download those that are needed:

needthese <- allurls[c(3,5)]

ign <- mapply(download.file, needthese, basename(needthese))

# trying URL 'https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/01_Jan/grids_germany_monthly_air_temp_mean_188101.asc.gz'

# Content type 'application/octet-stream' length 221215 bytes (216 KB)

# downloaded 216 KB

# trying URL 'https://opendata.dwd.de/climate_environment/CDC/grids_germany/monthly/air_temperature_mean/01_Jan/grids_germany_monthly_air_temp_mean_188301.asc.gz'

# Content type 'application/octet-stream' length 217413 bytes (212 KB)

# downloaded 212 KB

file.info(list.files(pattern = "gz$"))

# size isdir mode mtime ctime atime exe

# grids_germany_monthly_air_temp_mean_188101.asc.gz 221215 FALSE 666 2022-07-06 09:17:21 2022-07-06 09:17:19 2022-07-06 09:17:52 no

# grids_germany_monthly_air_temp_mean_188301.asc.gz 217413 FALSE 666 2022-07-06 09:17:22 2022-07-06 09:17:21 2022-07-06 09:17:52 no