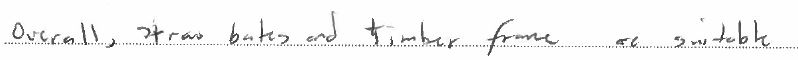

I am using the below script to try and separate handwritten text from the lines which the text was written on. Currently I am trying to select the lines.

This seems to work well when the line are solid but when the lines are a string of dots it becomes tricky. To try and get around this I have tried using dilate to make the dots into solid lines, but dilate is also making the text solid which then gets pick up as horizontal lines. I can tweak the kernel for each image but that is not a workable solution when dealing with thousandths of images.

Can someone suggest how I might make this work please. Is this the best approach or is there a better approach for selecting these lines?

import cv2

file_path = r'image.jpg'

image = cv2.imread(file_path)

# resize image if image is bigger then screen size

print('before Dimensions : ', image.shape)

if image.shape[0] > 1200:

image = cv2.resize(image, None, fx=0.2, fy=0.2)

print('after Dimensions : ', image.shape)

result = image.copy()

gray = cv2.cvtColor(image,cv2.COLOR_BGR2GRAY)

thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV cv2.THRESH_OTSU)[1]

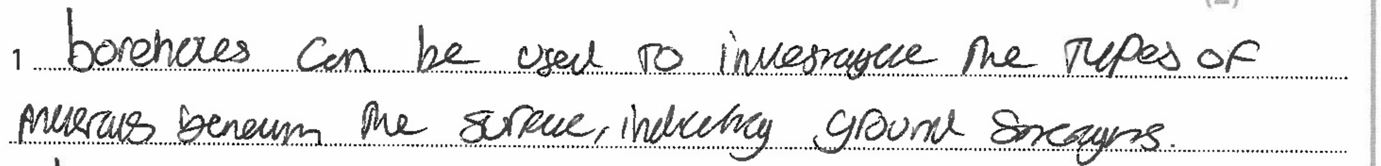

# Applying dilation to make lines solid

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (3,3))

dilation = cv2.dilate(thresh, kernel, iterations = 1)

# Detect horizontal lines

horizontal_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (40,1))

detect_horizontal = cv2.morphologyEx(dilation, cv2.MORPH_OPEN, horizontal_kernel, iterations=2)

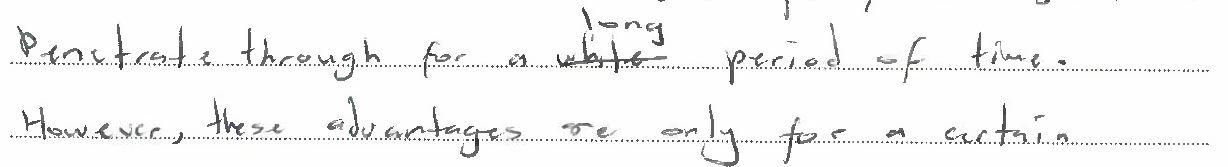

cnts = cv2.findContours(detect_horizontal, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

cnts = cnts[0] if len(cnts) == 2 else cnts[1]

for c in cnts:

cv2.drawContours(result, [c], -1, (36,255,12), 2)

cv2.imshow('1- gray', gray)

cv2.imshow("2- thresh", thresh)

cv2.imshow("3- detect_horizontal", detect_horizontal)

cv2.imshow("4- result", result)

cv2.waitKey(0)

cv2.destroyAllWindows()

CodePudding user response:

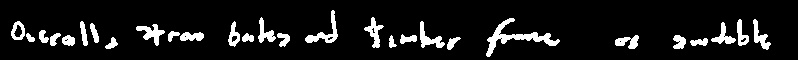

By finding contours, we can eliminate smaller ones by their area using cv2.contourArea. This will work under the assumption that the image contains dotted lines.

Code:

# read image, convert to grayscale and apply Otsu threshold

img = cv2.imread('text.jpg')

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

th = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY_INV cv2.THRESH_OTSU)[1]

# create black background of same image shape

black = np.zeros((img.shape[0], img.shape[1], 3), np.uint8)

# find contours from threshold image

contours = cv2.findContours(th, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)[0]

# draw contours whose area is above certain value

area_threshold = 7

for c in contours:

area = cv2.contourArea(c)

if area > area_threshold:

black = cv2.drawContours(black,[c],0,(255,255,255),2)

black:

To refine this for more images, you can filter contours using some statistical measures (like mean, median, etc.)