On Wikipedia, the construction of a Huffman tree is described as such:

The simplest construction algorithm uses a priority queue where the node with lowest probability is given highest priority:

Create a leaf node for each symbol and add it to the priority queue.

While there is more than one node in the queue:

Remove the two nodes of highest priority (lowest probability) from the queue

Create a new internal node with these two nodes as children and with probability equal to the sum of the two nodes' probabilities.

Add the new node to the queue.

The remaining node is the root node and the tree is complete.

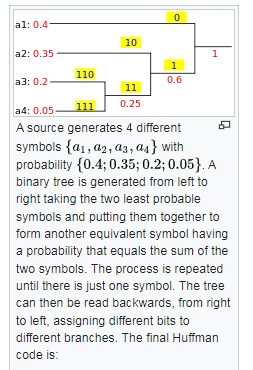

But their example for deconstruction (assigning 0 and 1) is a bit weird:

It's easy to see that except from the leaf node, the frequencies of intermediate nodes don't seem to matter that much? For instance, a3 > a4 and 0 are added to a3 while 1 are added to a4's string. But, a1 < a2 a3 a4 and the same thing is done. So, does the frequencies matter?

The same thing happens in videos from Leios Lab and Reducible (two well-known math/programming Youtubers). And also these Stack question:

Which node go in left or right on addition of weight while huffman tree creation (stating that you should be consistent when putting nodes left/right based on frequencies)

What happens if we are inconsistent while creating Huffman Tree? and Huffman Tree Coding (stating that while being inconsistent does change the code after completion, it doesn't matter, as the height stay the same)

So here is my questions:

Does it matter if children nodes are place left/right randomly?

If it matter (or doesn't matter), is there a standard? For example, lower node right, higher node left, lower gets a

1, higher get a0(or whichever combination of these four actions)? It probably doesn't matter with the efficiency if you're consistent already (as all the 3 answers above indicate) but is there a common consensus?

For example, this online generator follows the lower left, higher right, lower 0, higher 1 rule, but others can follow another rule.

P.S: Noted that the second question is two-part, as the right/left problem is related to construction, while the 0/1 relates to when you assign value. Normally you would do both in a same Huffman function anyway but I'm creating an animation on this and the order probably does matter visually.

CodePudding user response:

No. The code will still be optimal. (The accepted answer to the linked question is wrong!)

No. The standard, to the extent that there is one, is to ignore the resulting tree entirely, and use only the resulting code lengths (in bits) for each symbol, and a well-defined lexographic ordering of the symbols, to generate the actual ones and zeros of the codes. This is called a Canonical Huffman code. There are many possible assignments of ones and zeros to the symbol codes, given that the zero and the one can be arbitrarily assigned to left or right for every branch. Using a canonical procedure establishes an agreement between the encoder and decoder as to a single possible code for any given set of lengths and symbols. This not only sets a standard for the zeros and ones, but importantly, since the purpose is compression, reduces the amount of information that needs to be sent from the encoder to the decoder in order to describe the code.