So I'm trying to make a simple scene with some geometry of sorts and a movable camera. So far I've been able to render basic geometry in 2D alongside transforming set geometry using matrices. Following this I moved on to the

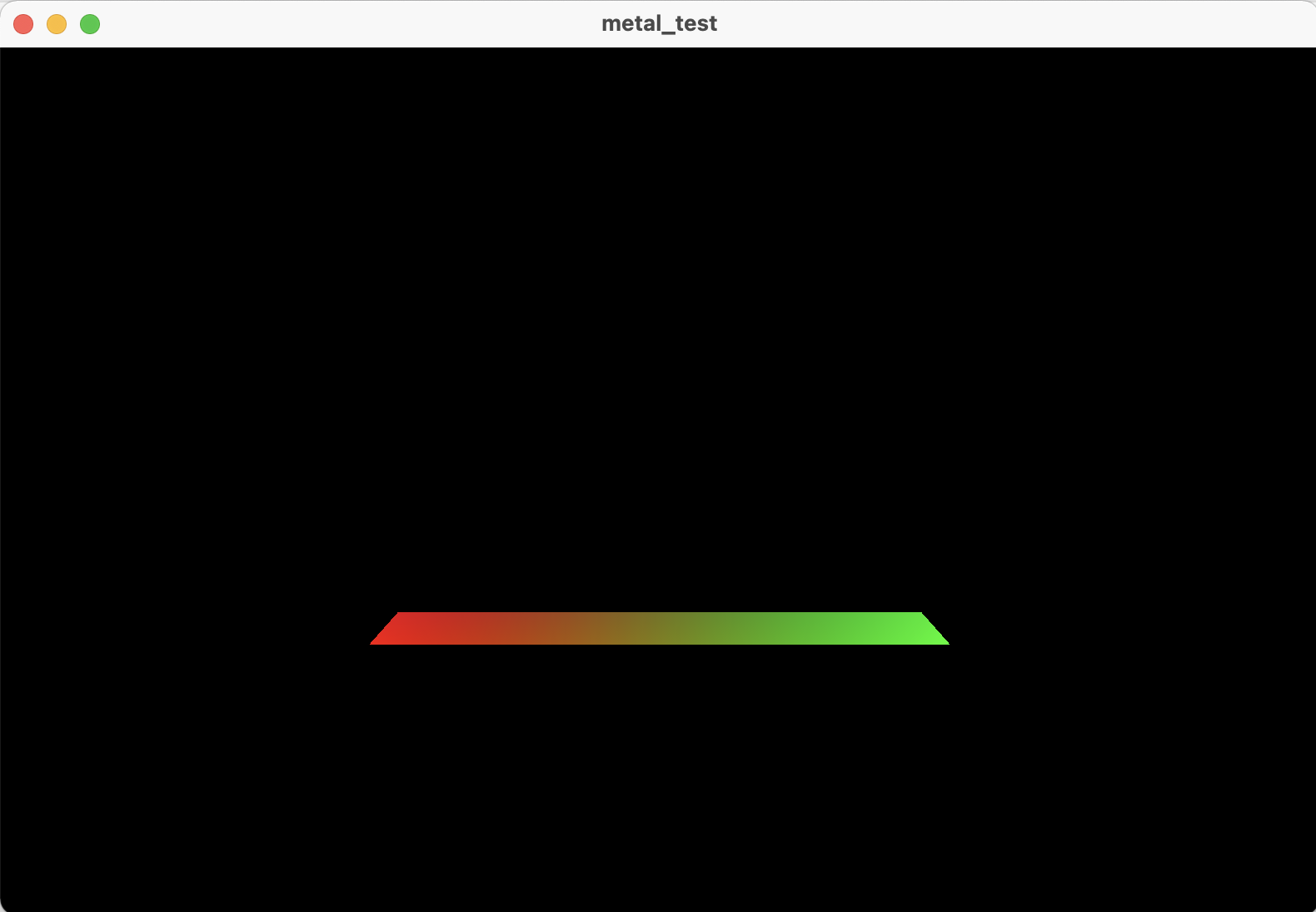

When moving further away though, parts of the scene are being wrongfully culled. Notably the ones farther away from the camera: Camera Position: (0,0,2), Camera Direction: (0,0,-1)

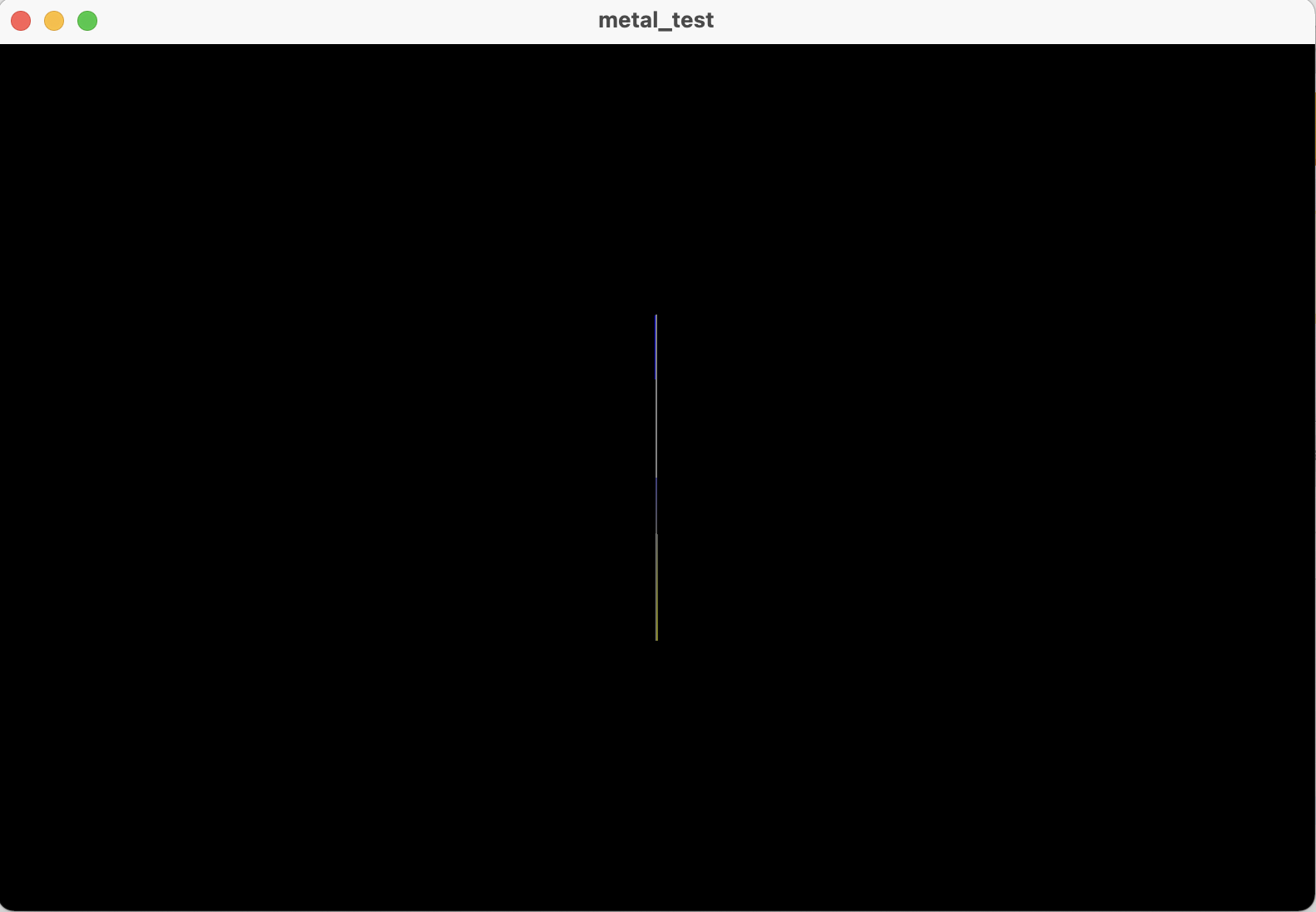

Rotating the Camera also produces confusing results: Camera Position: (0,0,1), Camera Direction: (cos(250°), 0, sin(250°)), yes I converted to radians

My Suspicions

- The Projection isn't converting the vertices from view space to Normalised Device Coordinates correctly. Also when comparing two first two images, the lower part of the triangle seems to get bigger as the camera moves away which also doesn't appear to be right.

- Obviously the view matrix is also not correct as I'm pretty sure what's describe above isn't supposed to happen.

Code Samples

MainShader.metal

#include <metal_stdlib>

#include <Shared/Primitives.h>

#include <Shared/MainRendererShared.h>

using namespace metal;

struct transformed_data {

float4 position [[position]];

float4 color;

};

// vertex shader

vertex transformed_data vertex_shader(uint vertex_id [[vertex_id]],

constant _vertex *vertices [[buffer(0)]],

constant _uniforms& uniforms [[buffer(1)]])

{

transformed_data output;

float3 dir = {-0.34202, 0, -0.9396926};

float3 inEye = float3{ 0, 0, 1 }; // position

float3 inTo = inEye dir; // position direction

float3 inUp = float3{ 0, 1, 0};

float3 z = normalize(inTo - inEye);

float3 x = normalize(cross(inUp, z));

float3 y = cross(z, x);

float3 t = (float3) { -dot(x, inEye), -dot(y, inEye), -dot(z, inEye) };

float4x4 viewm = float4x4(float4 { x.x, y.x, z.x, 0 },

float4 { x.y, y.y, z.y, 0 },

float4 { x.z, y.z, z.z, 0 },

float4 { t.x, t.y, t.z, 1 });

float _nearPlane = 0.1f;

float _farPlane = 100.0f;

float _aspectRatio = uniforms.viewport_size.x / uniforms.viewport_size.y;

float va_tan = 1.0f / tan(0.6f * 3.14f * 0.5f);

float ys = va_tan;

float xs = ys / _aspectRatio;

float zs = _farPlane / (_farPlane - _nearPlane);

float4x4 projectionm = float4x4((float4){ xs, 0, 0, 0},

(float4){ 0, ys, 0, 0},

(float4){ 0, 0, zs, 1},

(float4){ 0, 0, -_nearPlane * zs, 0 } );

float4 projected = (projectionm*viewm) * float4(vertices[vertex_id].position,1);

vector_float2 viewport_dim = vector_float2(uniforms.viewport_size);

output.position = vector_float4(0.0, 0.0, 0.0, 1.0);

output.position.xy = projected.xy / (viewport_dim / 2);

output.position.z = projected.z;

output.color = vertices[vertex_id].color;

return output;

}

// fragment shader

fragment float4 fragment_shader(transformed_data in [[stage_in]])

{

return in.color;

}

These are the vertex definitions.

let triangle_vertices = [_vertex(position: [ 480.0, -270.0, 1.0], color: [1.0, 0.0, 0.0, 1.0]),

_vertex(position: [-480.0, -270.0, 1.0], color: [0.0, 1.0, 0.0, 1.0]),

_vertex(position: [ 0.0, 270.0, 0.0], color: [0.0, 0.0, 1.0, 1.0])]

// TO-DO: make this use 4 vertecies and 6 indecies

let quad_vertices = [_vertex(position: [ 480.0, 270.0, 0.5], color: [0.5, 0.5, 0.5, 1.0]),

_vertex(position: [ 480.0, -270.0, 0.5], color: [0.5, 0.5, 0.5, 1.0]),

_vertex(position: [-480.0, -270.0, 0.5], color: [0.5, 0.5, 0.5, 1.0]),

_vertex(position: [-480.0, 270.0, 0.5], color: [0.5, 0.5, 0.5, 1.0]),

_vertex(position: [ 480.0, 270.0, 0.5], color: [0.5, 0.5, 0.5, 1.0]),

_vertex(position: [-480.0, -270.0, 0.5], color: [0.5, 0.5, 0.5, 1.0])]

This is the initialisation code of the Depth Stencil Descriptor and State.

_view.depthStencilPixelFormat = MTLPixelFormat.depth32Float

_view.clearDepth = 1.0

// other render initialisation code

let depth_stencil_descriptor = MTLDepthStencilDescriptor()

depth_stencil_descriptor.depthCompareFunction = MTLCompareFunction.lessEqual depth_stencil_descriptor.isDepthWriteEnabled = true;

depth_stencil_state = try! _view.device!.makeDepthStencilState(descriptor: depth_stencil_descriptor)!

So if you have any idea on why its not working or have some code of your own that's working or know of any public samples containing a working first-person camera, feel free to help me out.

Thank you in advance!

(please ignore any spelling or similar mistakes, english is not my primary language)

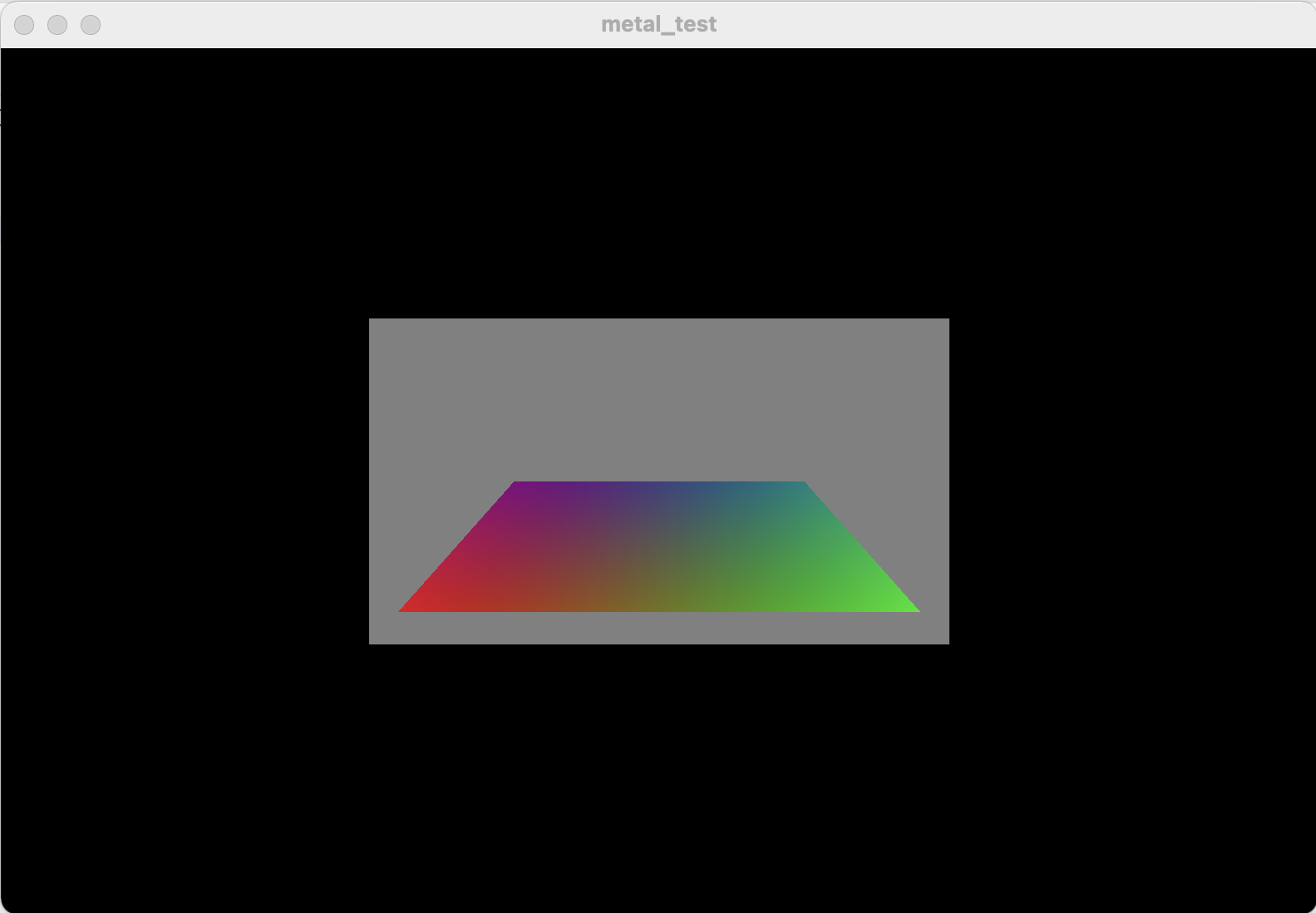

CodePudding user response:

Your view and projection matrices implementation is correct. But the following part of your implementation is wrong:

float4 projected = (projectionm*viewm) * float4(vertices[vertex_id].position,1);

vector_float2 viewport_dim = vector_float2(uniforms.viewport_size);

output.position = vector_float4(0.0, 0.0, 0.0, 1.0);

output.position.xy = projected.xy / (viewport_dim / 2);

output.position.z = projected.z;

output.color = vertices[vertex_id].color;

You should replace it with the following:

output.position = projectionm * viewm * float4(vertices[vertex_id].position,1);

output.color = vertices[vertex_id].color;

Also I think your field of view is a bit too wide (108 degrees) which may make it hard to debug. Changing the FOV to 60 may make it easier for debugging purposes:

float va_tan = 1.0f / tan((60 / 180) * 3.14f * 0.5f);