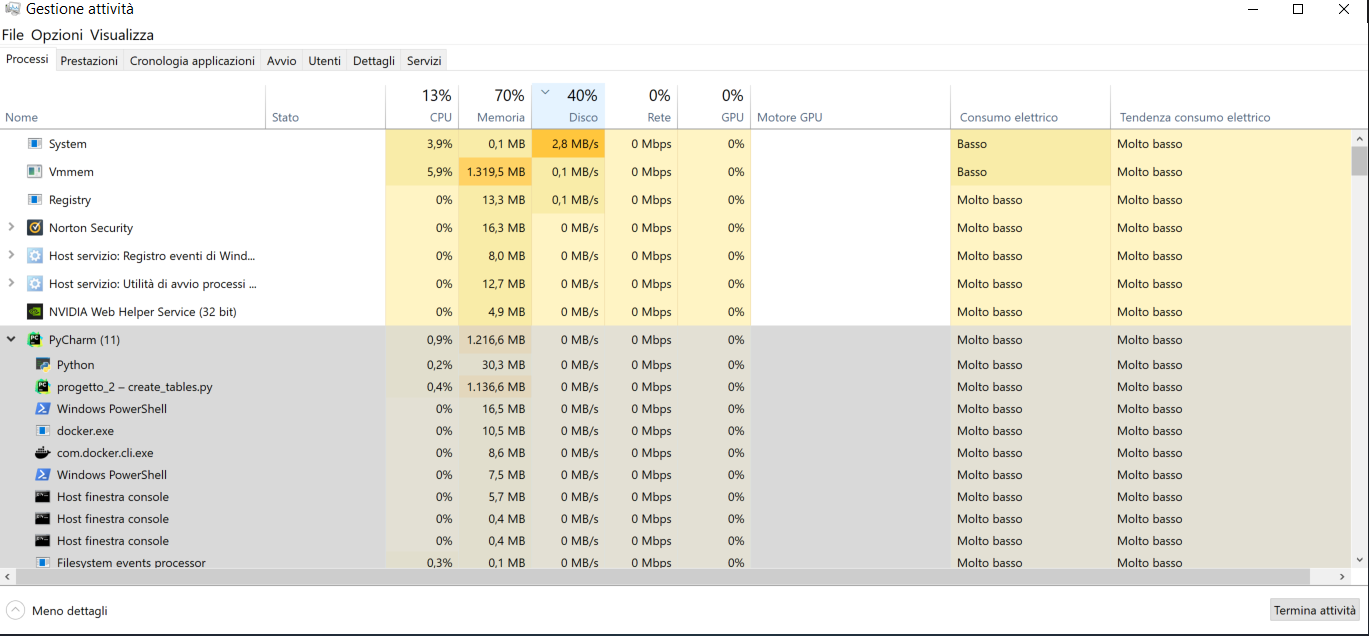

I am trying to load some GBs of data stored locally in 6 txt files inside some tables in a dockerized local Dynamodb instance using Python3 and the boto3 library.

The problem is the process speed, the estimated time for loading a single file's data (~10M lines) is of 19 Hours. I used a profiler to find the bottleneck in the code and the majority of the computational time is taken by the boto3 function storing the items in the database.

def add_batch(self, items, table):

request = {

table: []

}

if type(items) is not list:

print(f'\nError while loading item\'s batch, expecting a <list> but got {type(items)}')

return None

for item in items:

request[table].append(

{

'PutRequest': {

'Item': item

}

}

)

while request:

response = self._client.batch_write_item(RequestItems=request) # by far the slowest call

if response['UnprocessedItems']:

request = response['UnprocessedItems']

print('unprocessed items: ', request)

else:

request = None

return 0

The batch size is of 25 items, the throughput for the table is 100 (tried a lot of values with little results).

Understandably, I was getting better results when the container was run with the InMemory option set to True. I had to change it because I can't possibly wait hours every time I restart the container waiting for it to load the data.

At the moment I start the container with this simple command:

docker run -p 8000:8000 amazon/dynamodb-local -jar DynamoDBLocal.jar

I tried with some parallelization but the boto3 library doesn't seem to like it since it keeps raising exceptions.

CodePudding user response:

DynamoDB.local is not designed at all for performance. It is merely meant to be for offline functional development and testing before deploying to production in the actual DynamoDB service.