I'm working with a dataset that has two level grouping. Here I give an example:

set.seed(123)

example=data.frame(

id = c(rep(1,20),rep(2,20)), # grouping

Grp = rep(c(rep('A',10),rep('B',10)),2), # grouping

target = c(rep(1:10,2), rep(20:11,2)),

var1 = sample(1:100,40,replace=TRUE),

var2 =sample(1:100,40,replace=TRUE)

)

In this case, the data is grouped by id and by Grp. I want to calculate the correlation of target with var1 and var2. However, I don't know which is the most efficient way to apply this using a tidy approach and based on the groups.

I tried with dplyr approach. Like using:

example %>% group_by(id,Grp) %>%

summarise(cor(target,c(var1,var2))) # length error

Or even creating a custom function and applying it. But this last only summarise all the data without grouping:

corr_analisis_e = function(df){

return( cor(df[,'target'] , df[,c('var1','var2')]) )

}

example %>% group_by(id,Grp) %>% corr_analisis_e() # get all the data at once

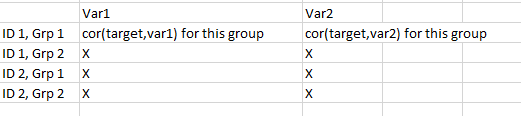

As output, I would expect to get something like a matrix or dataframe of 4 rows and 2 columns, where each row is a group (id and Grp) and columns are var1 and var2. Every value the result from the cor() method.

CodePudding user response:

example %>%

group_by(id, Grp) %>%

summarise(across(c(var1, var2), ~ cor(.x, target)))

# A tibble: 4 x 4

# Groups: id [2]

id Grp var1 var2

<dbl> <chr> <dbl> <dbl>

1 1 A -0.400 0.532

2 1 B -0.133 -0.187

3 2 A -0.655 -0.103

4 2 B -0.580 0.445

The grouping columns can then be removed with %>% ungroup %>% select(var1:var2).